Archives

- March 2025

- December 2024

- October 2024

- August 2024

- July 2024

- June 2024

- March 2024

- April 2023

- December 2022

- April 2022

- March 2022

- January 2021

- June 2020

- May 2020

- April 2020

- November 2018

- June 2018

- November 2017

- April 2017

- February 2017

- November 2016

- September 2016

- June 2016

- March 2016

- December 2015

- October 2015

- September 2015

- July 2015

- May 2015

- March 2015

- December 2014

- October 2014

- September 2014

- August 2014

- July 2014

- June 2014

- May 2014

- April 2014

- February 2014

- January 2014

- December 2013

- November 2013

- October 2013

- July 2013

- May 2013

- April 2013

- March 2013

- January 2013

- December 2012

- November 2012

- August 2012

- July 2012

- May 2012

- April 2012

- March 2012

- February 2012

- January 2012

- December 2011

- November 2011

- October 2011

- September 2011

Categories

Snowboarding in Åre, Sweden

Last I’ve was out snowboarding with friends was January 2020, not long before the world went to shit and little did we know that there would be limited opportunities to do it again until 2 years later.

It was around mid January the small WhatsApp group of us so went boarding at La Clusaz in 2020 started chatting thinking about getting the gang together and hitting the slopes again this year

I booked a place in Åre Sweden that Kristaps found on Abnb which was more than big enough for all of us but unfortunately this year not everyone was able to come due to work commitments and timing with the spontaneous decision to go.

In the end there was only 3 of us this year, we met up at Ostersund airport and we all jumped in a hire car for the 90 minute journey to Åre. It’s the first time I’d driven in a vehicle with studded tires and on roads covered with so much snow so it was a bit of an adventure

Pillerin, Matt & Myself

After getting some food shopping supplies for the week on route to Åre we got to the Abnb unpacked ready for the following days boarding on the slopes, we were at the Tegefjäll part of Åre and the slopes were comfortably quiet with very little queuing to get on a lift which was amazing.

In our little resort of Tegefjäll 10 mins drive from the main resort the Slopes were comfortably quiet, enough people there to give an atmosphere but quiet enough not to be crashing into people.

At the top of the lift from our area there was a great little tipi where we popped in a few times for a lunchtime beer and burger to replenish our energy for boarding. This little place was much more fun and cost than the more commercial eating places dotted on the slopes.

We spent the majority of the time at Tegefjäll, getting the lifts and boarding across to Duved another area linked by lifts and slopes. We easily spent a day getting the lifts, boarding across up and down, to Duved, grabbing lunch and by the tine we boarded back the lifts were closing. It was quite leisurely area and a pleasing way to spend the daylight.

The weather was a bit hit and miss over the week that most of the lifts in the main Åre resort were closed die to wind and I think it wasn’t until the Thursday when we managed to get across to the main resorts and try the slopes there. There was quite a bit of queuing to get tot he very top but once we got there it was amazing, everything was covered in layers and layers of snow dust.

Getting down front he top was fun as there were quite a few flat parts of the run requiring a fair amount of speed to get across without having to take the board off and walk. It was so soft and powdery near the top and at some points with pretty limited visibility.

We finished the last couple of days back at Tegefjäll and had a very slow last day on Saturday as we were all aching from a LOT of boarding. We finished almost all the food we had bought for the week that evening and watched The Muppets film (2011) which is still brilliant! The whole week flew by and it was so good to be back on the slopes with friends after that last couple of years. Next trip is to Dubai working on the closing of the Expo 2020, stay tuned 🙂

Posted in Uncategorized

Leave a comment

Twenty-Twenty-One

Its difficult how to start this new blog post in the new year after what a fuck up 2020 was, it would be nice to think that as its a new Gregorian year its a chance for a reset and the start of a more sociable era. I’ve got high hopes for 2021 though I don’t think were going to start the climb back up to the new normality until April depending on how the Westminster circus handles the vaccine rollout.

The year ended and started off very subdued, new years eve was spent in the flat watching Netflix, drinking whiskey sours and catching up with friends on the phone and the following day tidying and having a good clear out in the flat. This was a pretty different to NYD 2020 where i was on a plane to Vegas to work with the excellent guys at Pixway on the Nissan stand at CES2020.

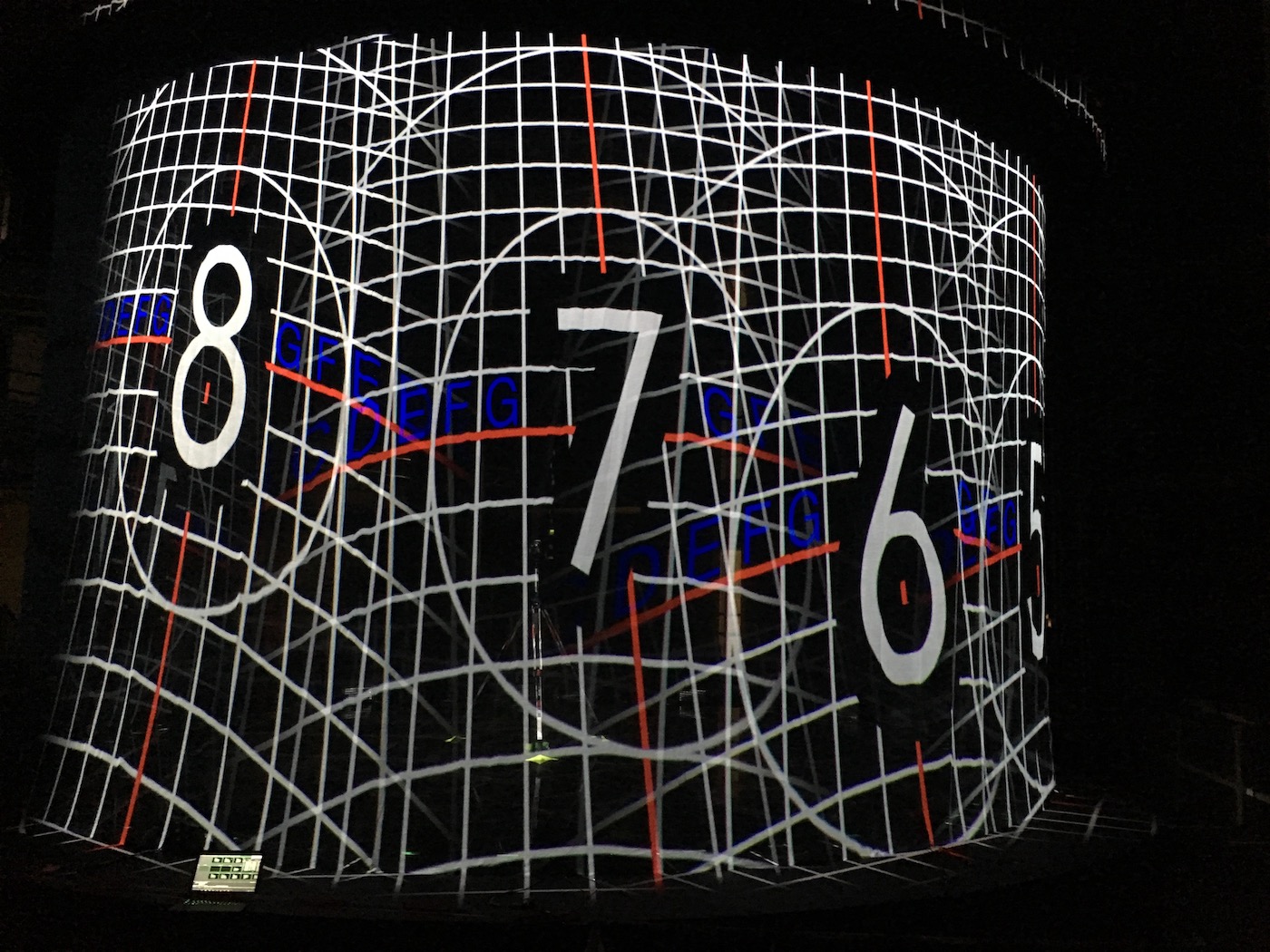

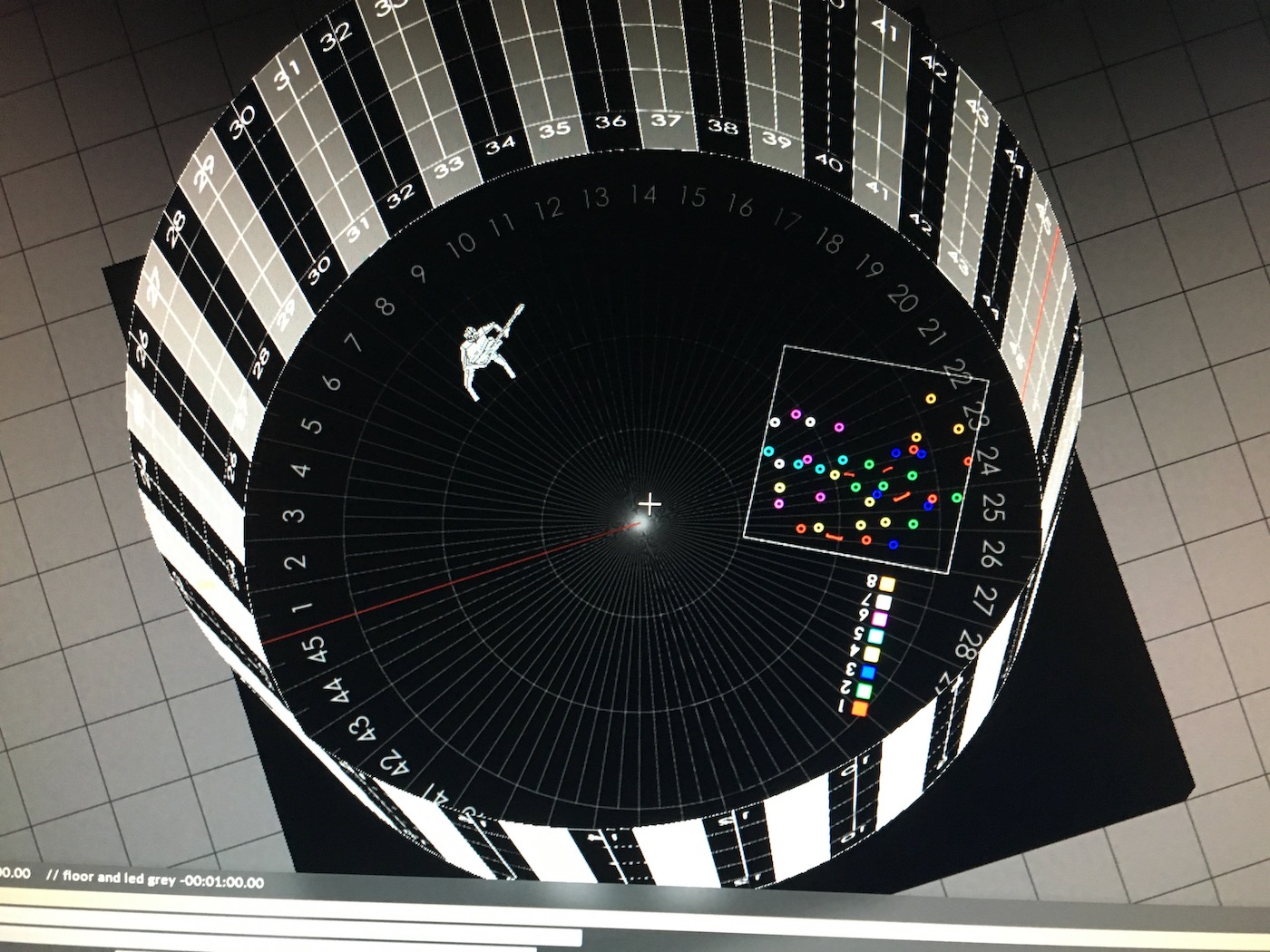

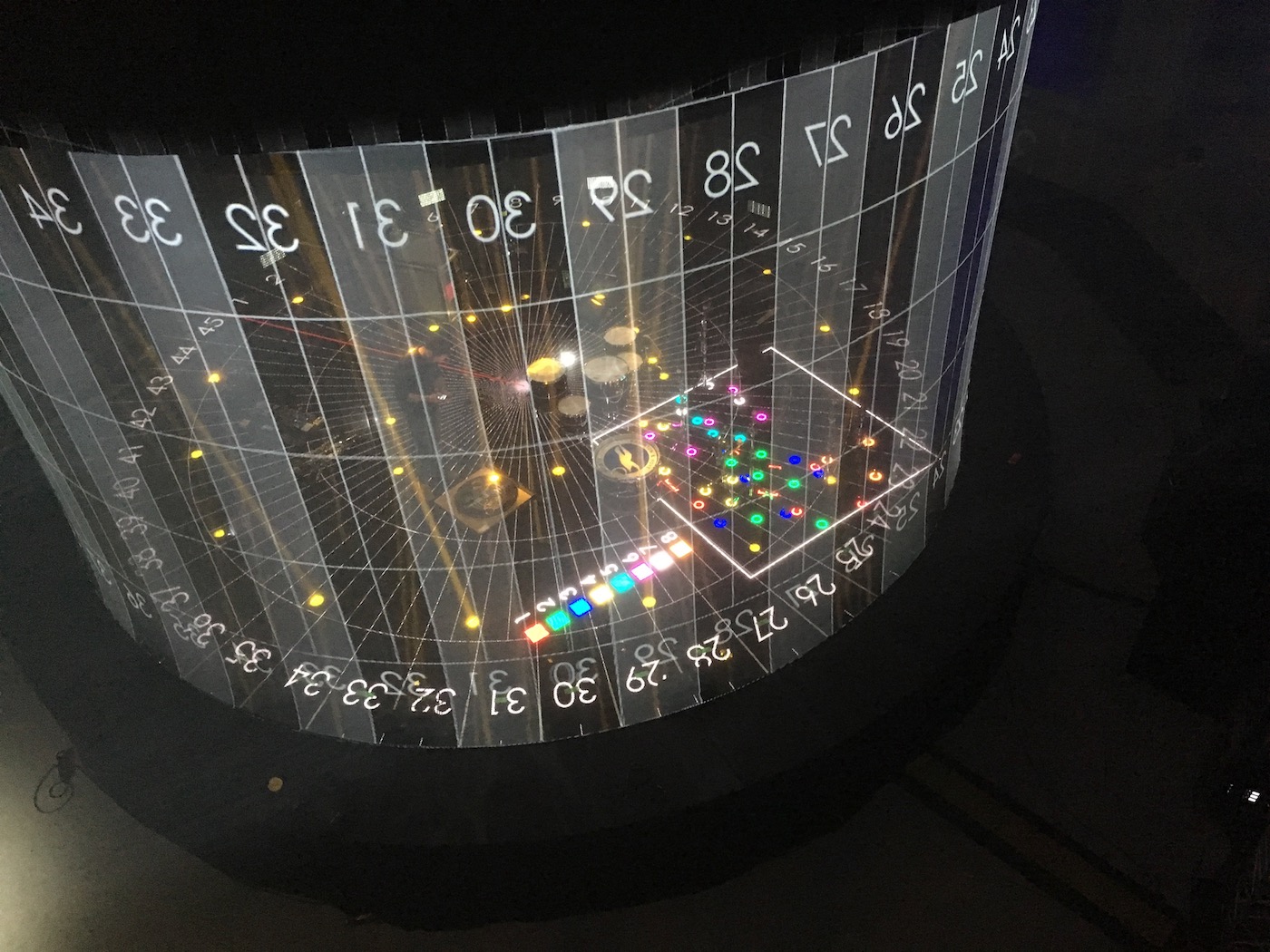

For the Nissan booth at CES2020 we had a 16 projector circular projection surface, 8 bends and 8 overlays and we were using the disguise Omnical system to assist with projected image alignment. I’ve used Omnical a reasonable amount since 2018 on various jobs and have a good upstanding and experience of what s required to make it work well. The more accurate you can make your geometry in d3 the easier the process will be, for the CES booth we had a CAD model of the stand but generally on temp structures and especially a big cylinder which we have here there will be some discrepancies in the build and the cad. Before starting any projection calibration, Francisco and myself spent a good couple of hours taking a survey of the projection surface with several lasers and tape measures and I reconstructed the ad model to as close to the real world as possible. From the top don the projection surface was more like a oval than a circle after doing a survey, fixing these small solid foundations makes a HUGE difference further down the line.

We had I think 9 shows a day at Nissan over the period of CES and all went well with the client being swimmingly happy! Next to us Manuel was looking after the Audi booth which was beautifully designed. He spent hours programming the d3 timeline so the projection onto the frosted windows of the car responded to the car console stick and button operations, it was a really nice interactive part of the stand.

Outside the convention centre Paul and Delaney were looking after BMW, which was a custom built experience in a structure in on of the parking lots of the convection centre, it was incredibly slick just as you’d expect from BMW. Ed was over on Adaptive but I didn’t manage to get any pictures of his setup.

It was a good few days supporting the shows in Vegas and as allways, so nice to catch up with everyone over Tacos and Beer!!!! <– This place is amazing.

After the shows I stayed on in Vegas for a few days and one of my best mates Marley w as int he states for a few weeks and came to meet me in Vegas for a ROADTRIP!!!!! We hired a Jaguar F-type and after a chat with my American friend Charles about which part of the Grand canyon to visit he suggested Horseshoe Bend so we took his advice, stuck it in the sat-nav and headed North !

It was a fantastic drive each of us taking turns, roof down, hat and scarves on as it was petty cold once we left Vegas, i think it was around 16:00 when we got to the parking lot and then another 20min hike across the canyon get to the iconic site. There were quite a few people there and we spent about half an hour just wandering round taking photos and admiring the landscape, it was so worth doing the long drive to be there and take it all in.

After leaving Horseshoe Bend we stopped off at Glen Canyon dam to sight see just before last light. From here we headed back towards Vegas with the intention of finding a hotel on the way, but no real plan. We past a load of motels but decided to carry onto a town called Flagstaff we saw on the map. Upon turning up the street and seeing the sign for the Hotel Monte Vista we knew this was the place we were gonna stay. Its such a beautiful old building and our little venture round town for food and beers really made me want to go back there sometime.

I wasn’t sure how to start the first blog post after so long and its just kind of evolved into this which I think is a good start. Stay tuned for next week where ill be adding another post about current ramblings and possibly a lookback to 2020. Stay safe everyone.

Momentum r1.0.0

INTRO

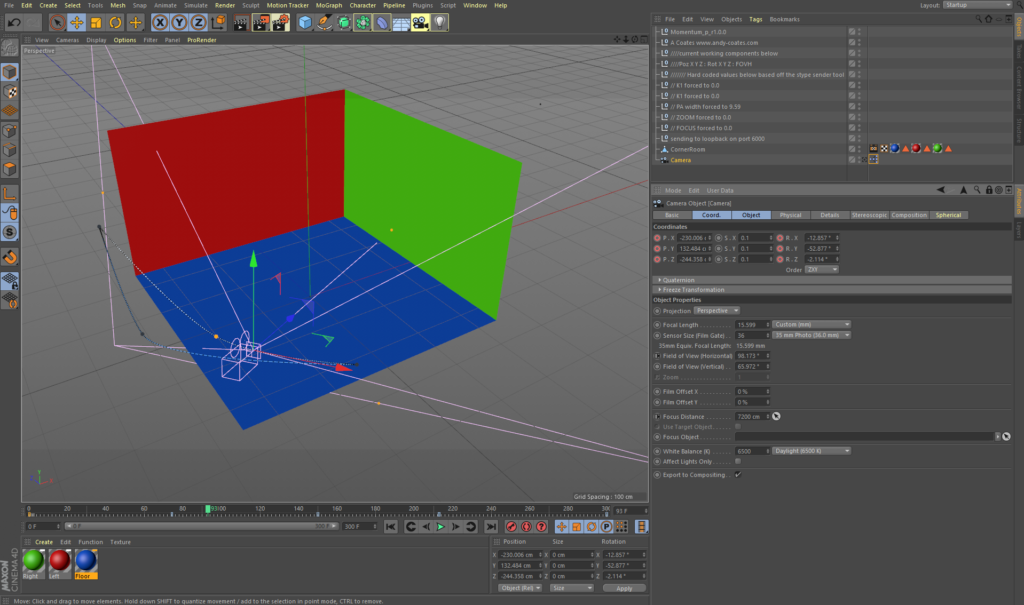

Momentum is a Cinema4D rig to output camera positional data in the StypeHF format over a network. This is aimed at the pre-production workflow when using disguise XR

BASICS

Momentum is packaged in a simple c4d project and consists of a Xpresso tag and a python script to output the data in the StypeHF format, not all of the StypeHF fields are sent by momentum we only send the following, packet number and time code omitted.

- Poz X,Y,Z

- Rotate X,Y Z

- FOV X

- Aspect Ratio (This is forced to 1.7777 this only works in HD)

- Focus (This is default to zero, momentum does not take into account zoom data)

- Zoom (This is default to zero, momentum does not take into account zoom data)

- K1 (This is default to zero, will be overridden by disguise)

- K2 (This is forced to zero, will be overridden by disguise)

- PA Width (This is default to 9.59 standard)

- Centre X (This is default to zero, momentum does not take into account zoom data)

- Centre Y (This is default to zero, will be overridden by disguise)

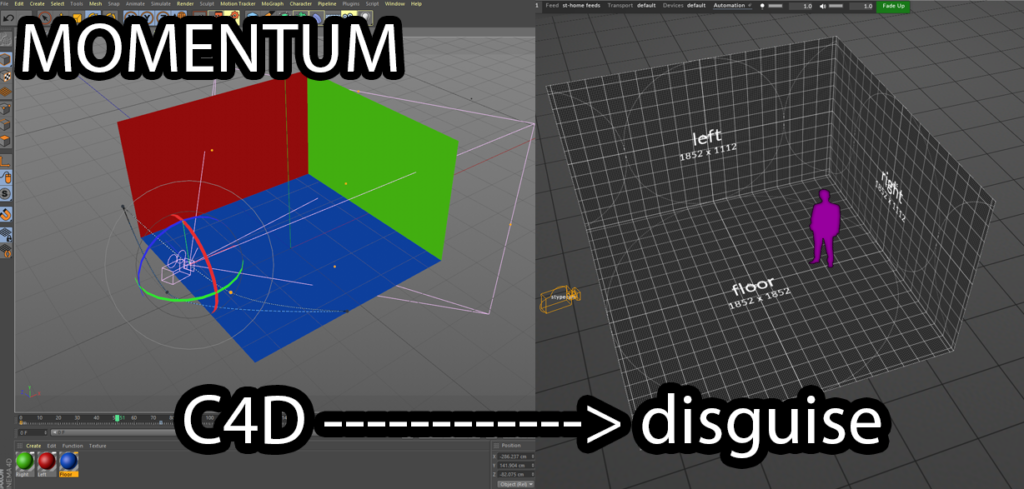

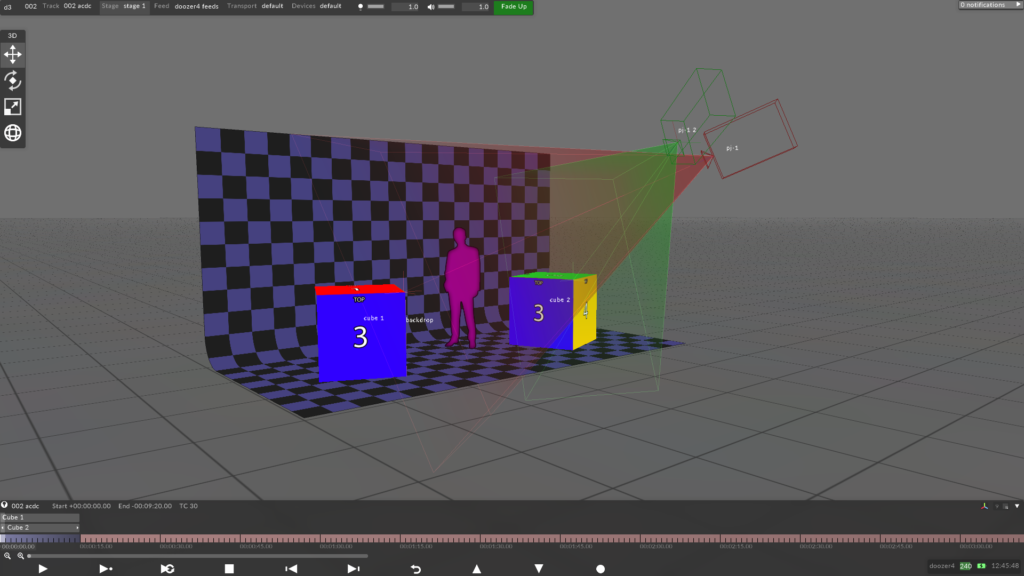

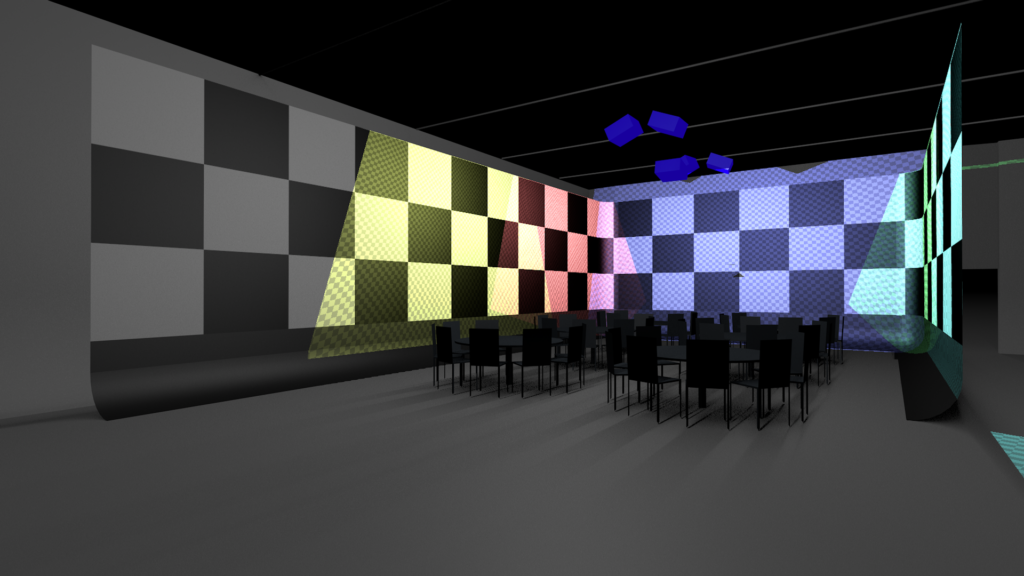

MOMENTUM First glance

The above screenshot shows what you will see when opening Momentum, this is a basic scene with a stage and some camera positions key framed in the timeline. After hitting play the tracking data will be transmitted and any changes updated in real time upon pressing stop the data will stop being transmitted.

Each packet is transmitted per frame so to adjust the packet rate please change the frame rate of your c4d project. Note that this is not explicitly locked to the frame rate so heavy scenes will experience a drop in frame rate but low poly scenes on a good computer will be smooth.

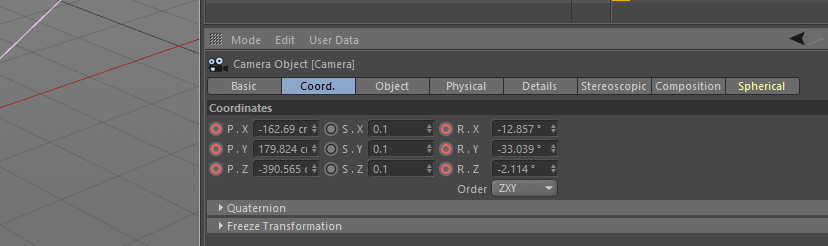

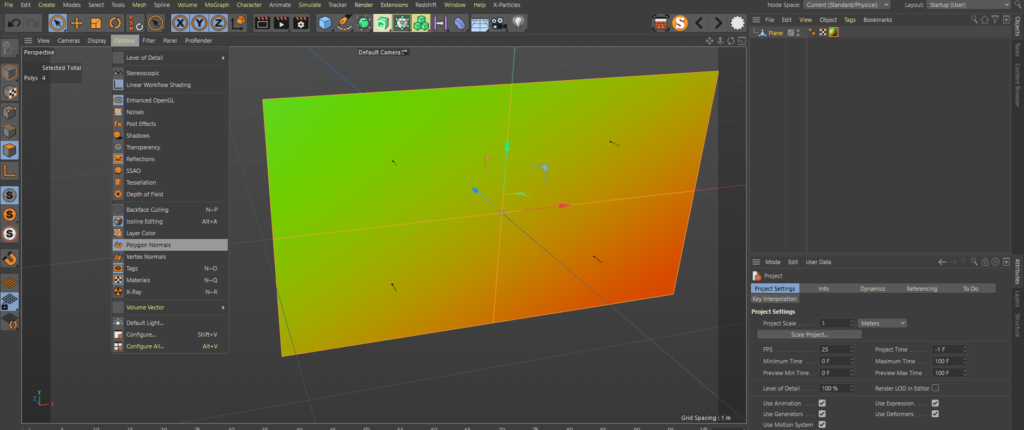

AXIS Rotation

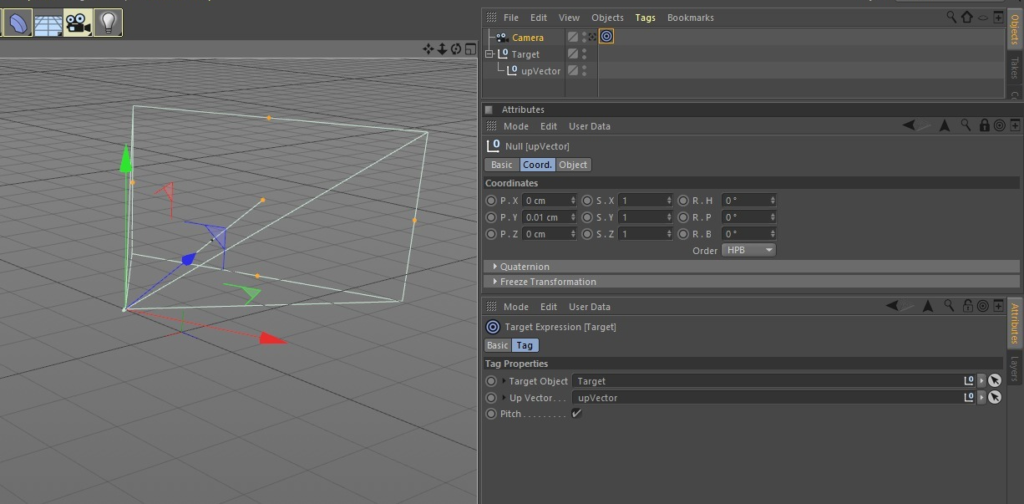

This is tested to be compatible up to disguise release 17.3 (pending) and the XR Beta branch. The axis rotation order must be set to ZXY as shown in the screenshot below for disguise to pick up the data correctly and handle roll correctly.

Target Tag

Because of this axis order the Target Tag in cinema will behave strangely, I’m working on a fix for this as of 12/06/2020

Solution found for the target tag see below image and description 15/06/2020

To get the target tag to work correctly with the required Axis rotation you need to add in an “Up Vector” null. Make the Up Vector null child of your target null or object and position it 0.01 cm on the Y axis in relation to your target and add this to the target Up Vector field.

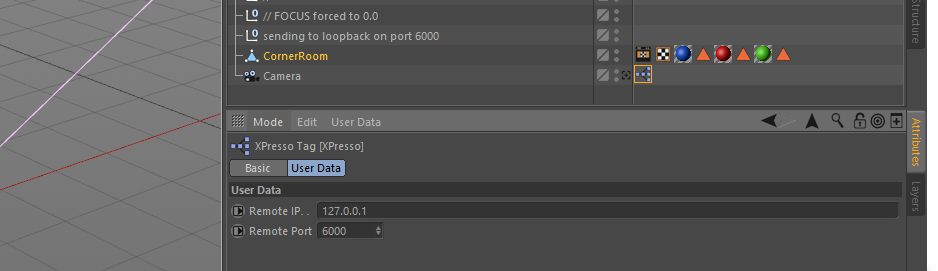

IP & Port Number

Clicking on the Xpresso tag will open up a window with the option to send the positional data to a specific IP address and port number. Please not this isn’t tested across large networks so if you experience any issues please feed back via the email at the end of this post

Credits

Thank you so much to the following people for help with coding beta testing and fault finding of momentum, without this teamwork non of this would have happened

Karl Bromage – Studio One Four

Andreas Culius – nocte designs

Rich Porter – The Hive & YouTube Channel

Scott Millar – Longer Days

Lewis Kyle White – LKW

DOWNLOAD MOMENTUM

Download Momentum from GitHub via the link below, any comments or issues please get in touch via andy(at)andy-coates(dot)com

https://github.com/andydenniscoates/momentum

Posted in Uncategorized

Tagged c4d, camera tracking, disguise, stype, unreal engine, virtual production

6 Comments

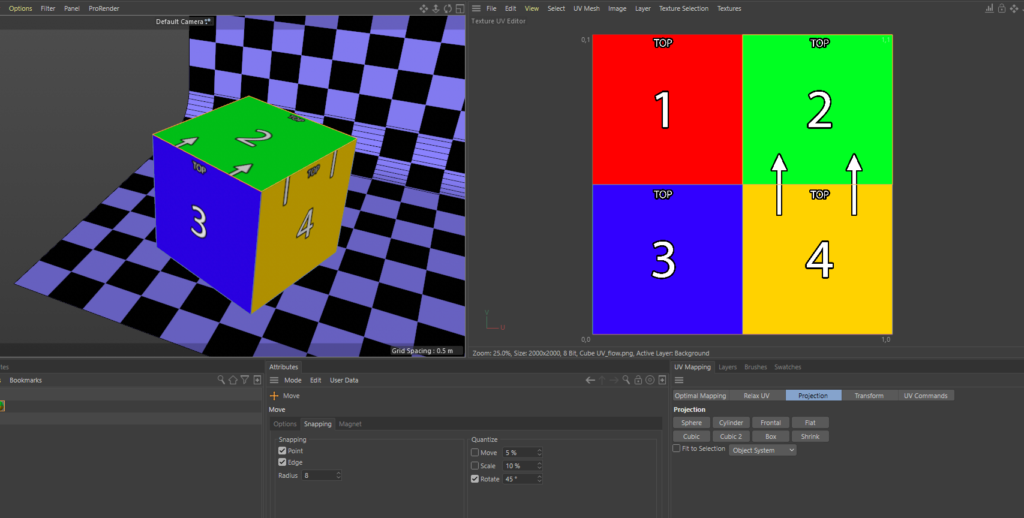

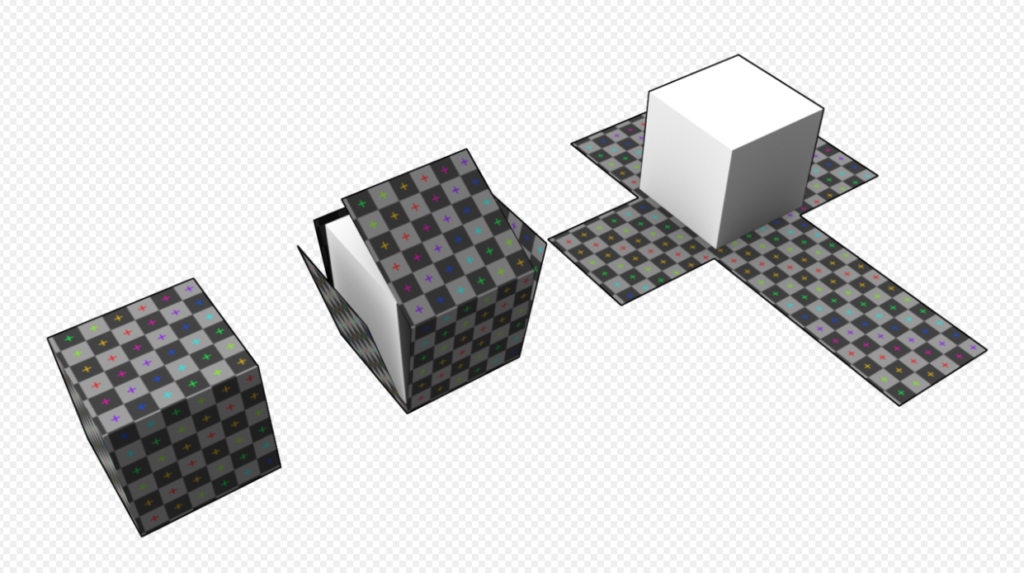

disguise & C4D workflows : 002 Simple cube stage setup

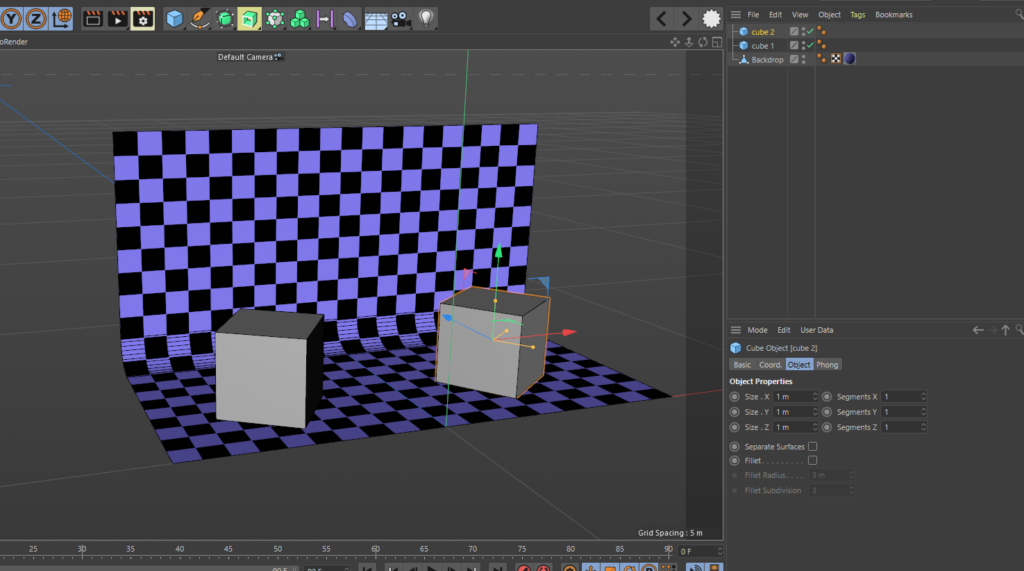

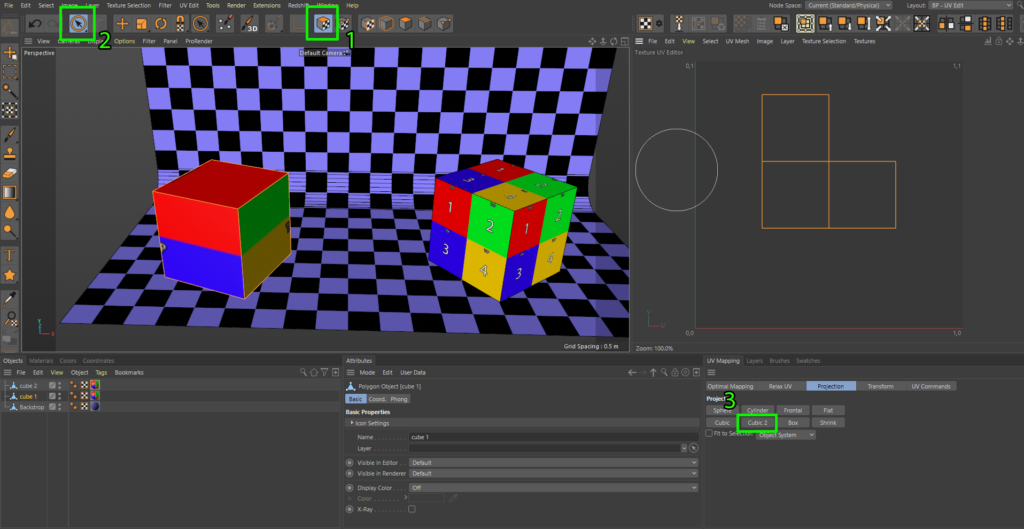

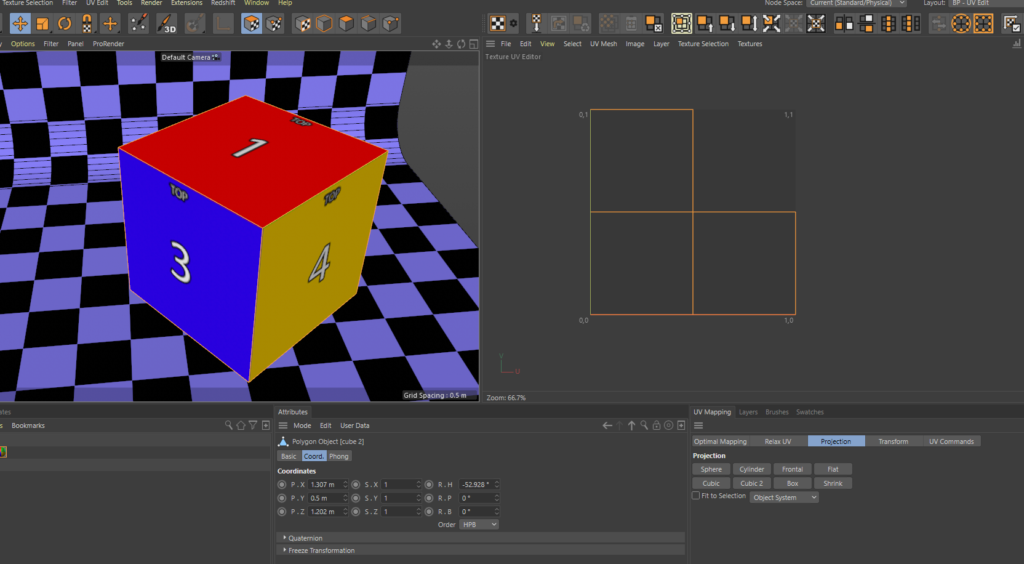

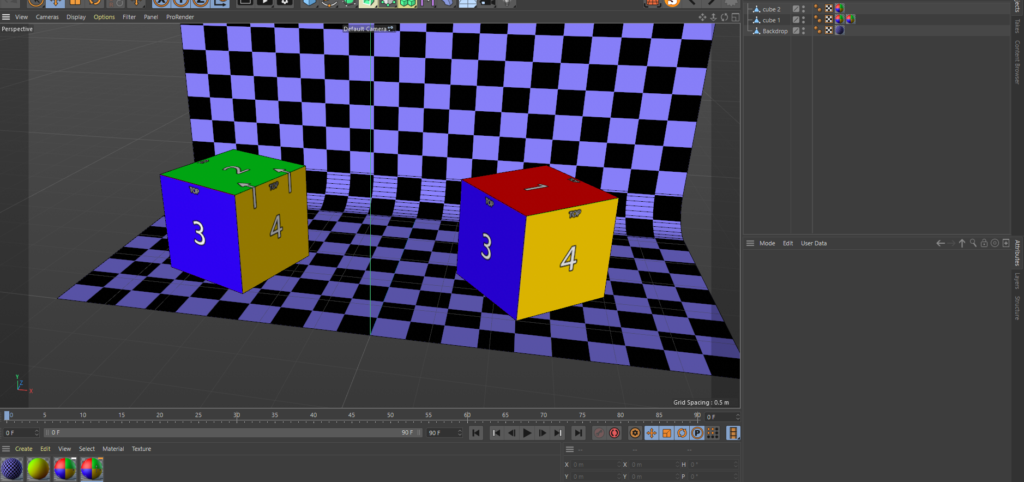

Hello everyone, following on from the previous blog post I’m going to be running through building from scratch the above disguise project and reasons behind the methods. Im going to be continuing this series in Cinema4D R21, I found a bug in R22 which breaks my workflow, I’ve spoke with Maxon and waiting fro them to fix it. The cinema project and relating assets for this post can be downloaded here: 002 C4D-d3_workflows

I’m primarily going to be concentrating on the 3D project build up here and less on the disguise side of things as there’s some excellent training resources out there already. I highly recommend you check out my good friend and colleague Rich Porters YouTube channel for disguise tips and tricks.

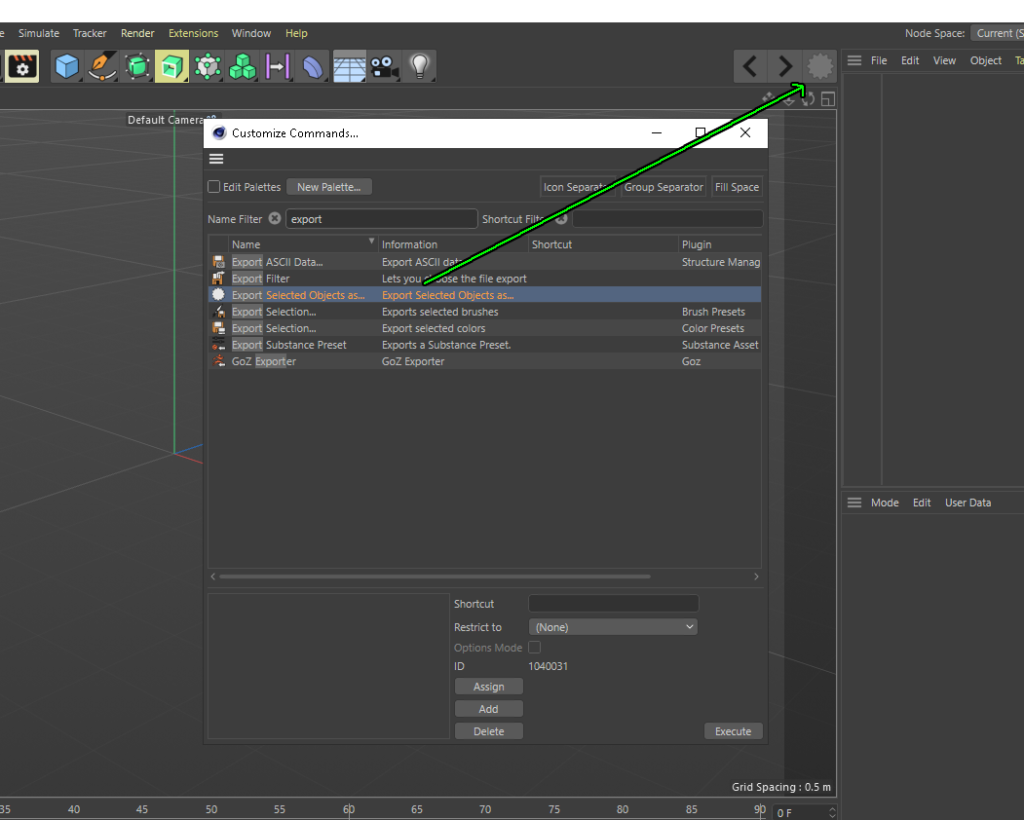

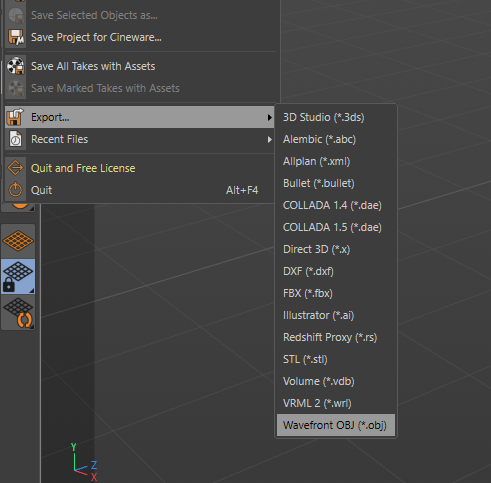

Lets get started by adding a really useful button to your cinema workspace and this is so we can export objects as separate elements. Using the export option in the file menu only allows us to export everything in the scene as one object and we want separate elements. Adding this button will allow you to export selected objects individually.

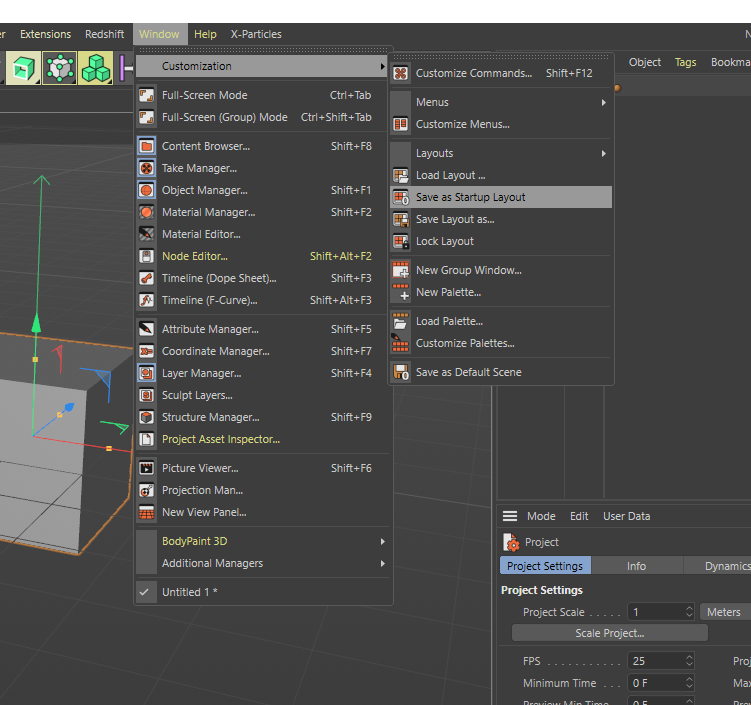

Fist of all hit Shift + F12 to bring up the customise commands menu ins in the search bar type “export” and this will narrow down a list of export commands. We want Export Selected Object as..click on this and drag it on to the tool bar. Once you’ve done that, save as startup layout as show before so the button stays there on restart. Its currently greyed out and that’s because we don’t have an object selected or any objects in our scene.

What the audience can see

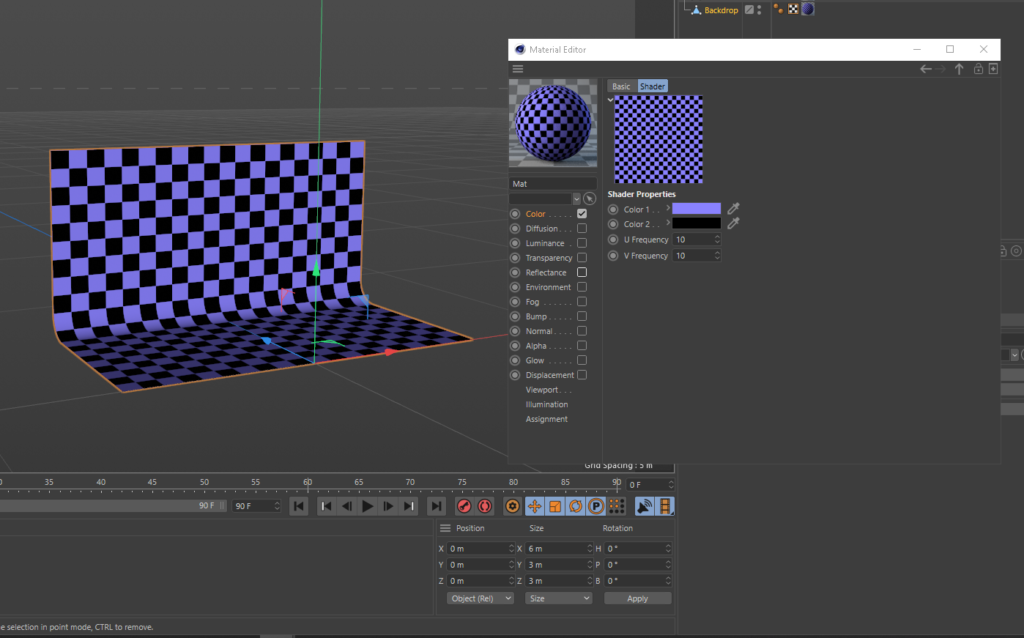

So the scenario is that e have a small stage and 2 cubes onstage which are projecting onto, the audience are in front of the stage theatre style and can see the forward facing parts of the cubes and the tops. This is always my starting question when speaking with the creative team in the early stage. In this case, in our 3d scene we only need the parts of the object which are going to be seen by the audience and we can focus on making the best use of the UV space, theirs no point in wasting good UV space on a part of the object which isn’t going to be projected on. I always like to have the venue stage in my scene as its a really good reference eve if its not going to be projected onto, and it makes things look nice. Make a stage like below, 6m wide 3m deep and 3m high, easiest way to do this is make a cube to those dimensions and delete all but the bottom and rear face, I then positioned the downstage centre of my stage to 0,0,0 in my scene. If you want to skip this part use the backdrop.obj from the assets download above.

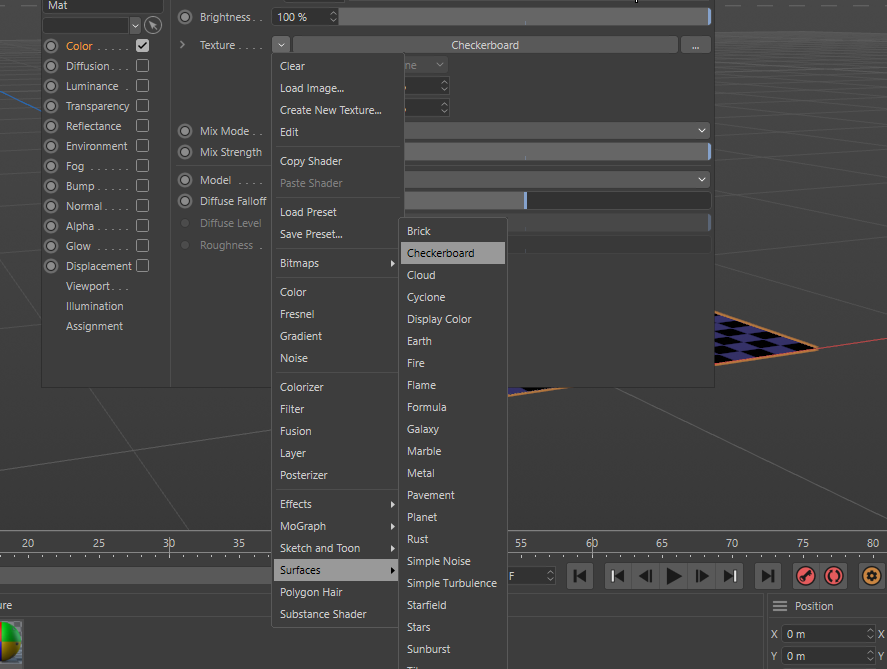

I then created a new material and selected the checker board from the surfaces menu in the materials options and then double click on the thumbnail to bring up another window and set the colours and amount of squares to your taste.

Now we have our stage setup lets make a couple of cubes, we could make one and duplicate it but making 2 is good practice and each will have a slightly different associated UV. Next create 2 cubes and make them both 1 meter x 1 meter x 1 meter and place them in your scene like below, make them editable by selecting them and hitting C on the keyboard.

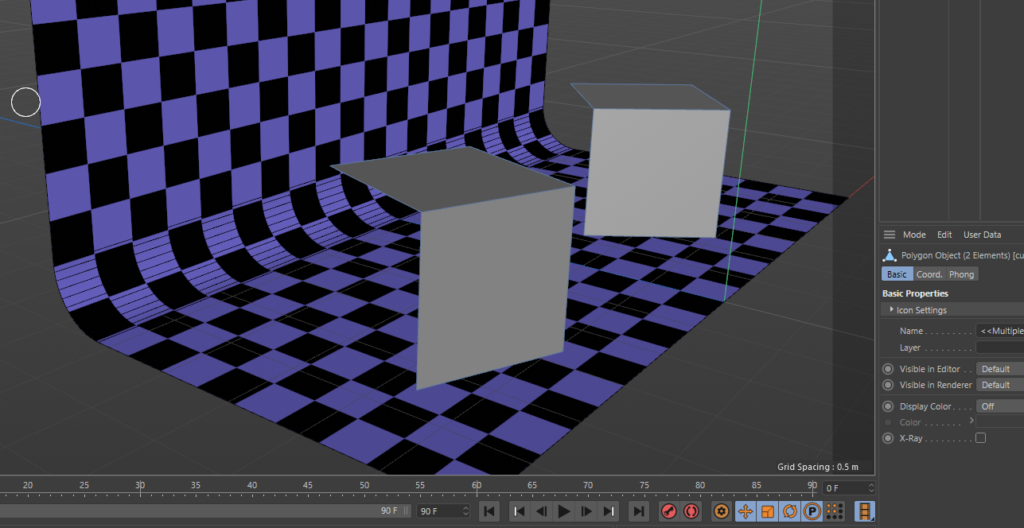

now all we need here is the front faces of the cube, only the faces which the audience will see and the projectors are hitting. The quickest way to do this is make sure your in polygon selection mode, select the faces you want then hit the keys U then I then delete. This will invert the selection and delete the rear faces which we don’t need, your scene should look like the image below.

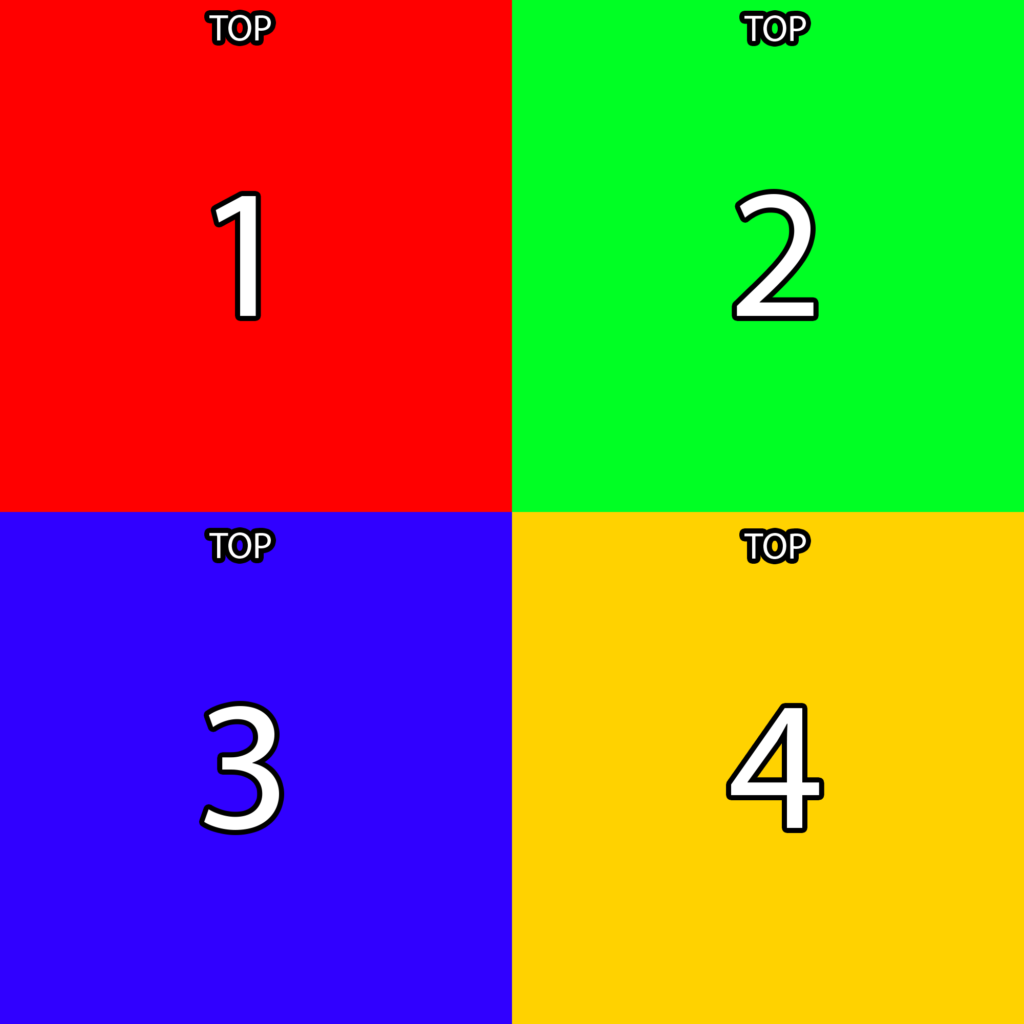

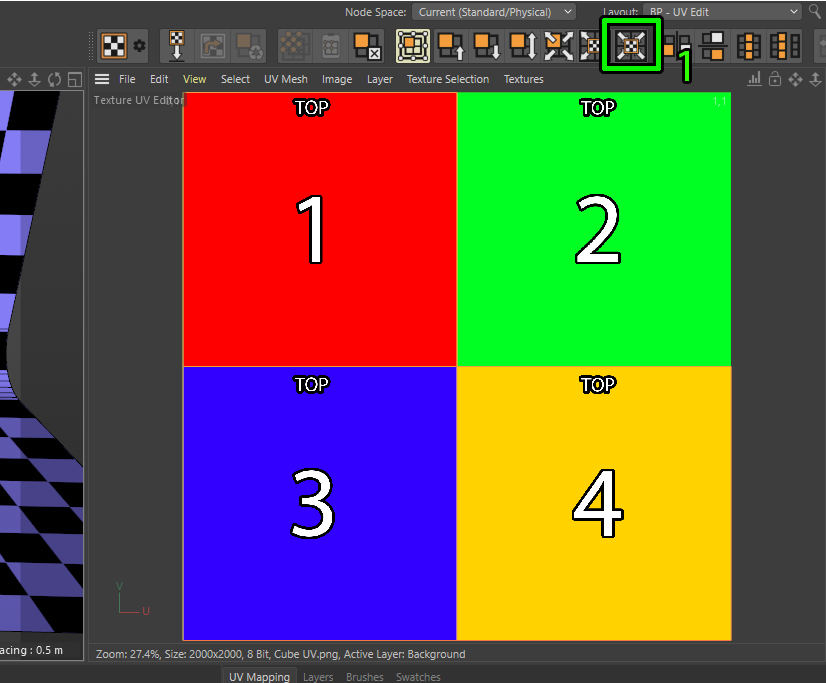

Now we have put two cubes and know the dimensions, from here we can make life infinitely easier for ourselves by creating a simple guide for ourselves to unwrap to. we know each face is the same size so I made a simple 2×2 colour grid with numbers and text to say which way is up.

Net pull that image onto your cubes in cinema and jump into UV layout.

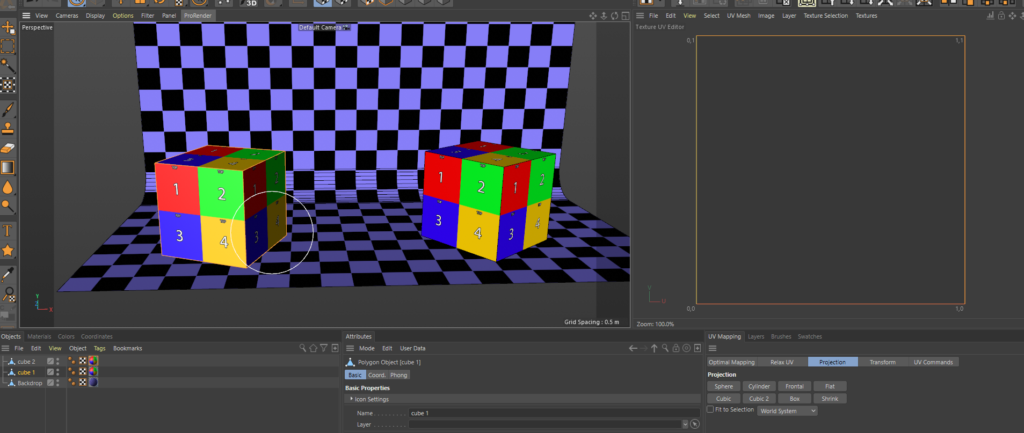

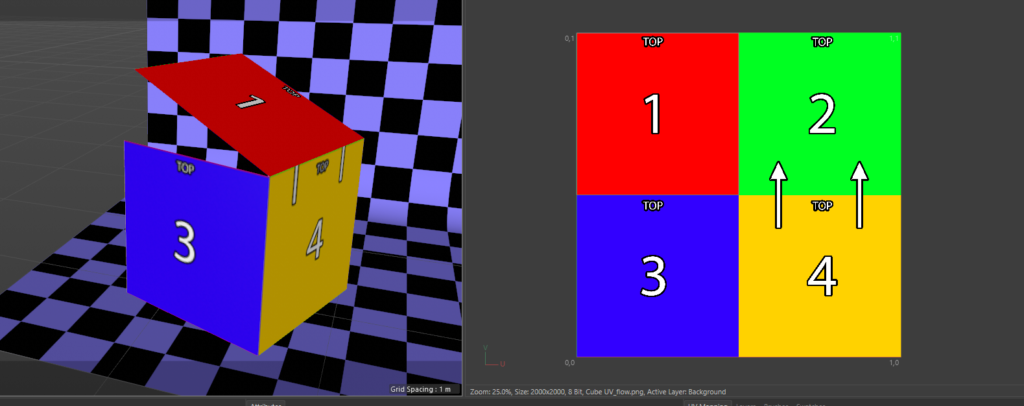

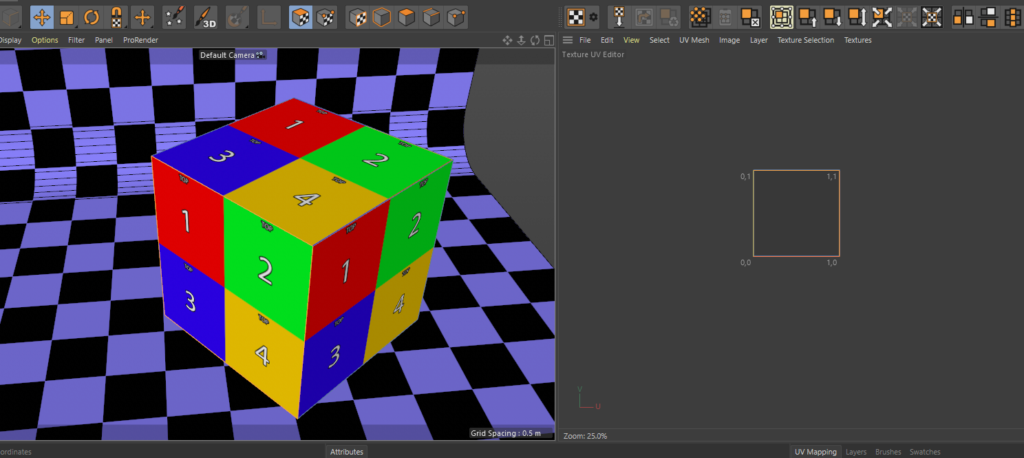

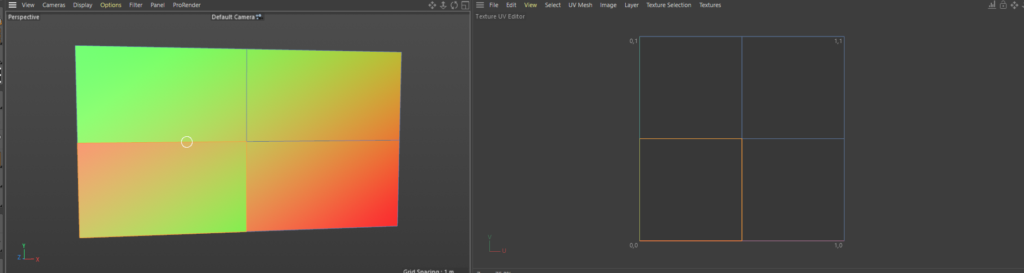

NOTE: I found a bug in R22 with the fit to canvas command so ill be showing this in R21 and again update this to show the R22 method once Maxon fix the bug. Yous shoud now see the same as below, our helpful lineup grid is replicated on all three faces on each cube this is the default UV map when you create a cube in Cinema, were gonna change this so each face only sees one of the colour quadrants.

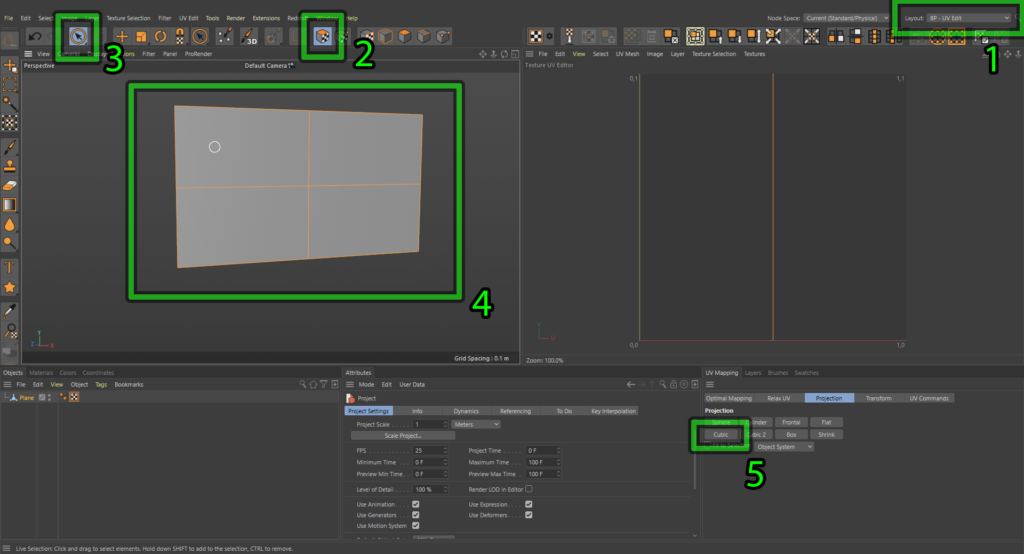

R21 UV instructions

Using the screenshot as guide below, make sure your in UV polygon selection mode (1) , then choose the section tool (2) and select all the faces on cube 1 then hit the Cubic 2 button (3) and you should see the scene like this, make sure the drop-down below the Cubic 2 button is set to object system for this to work. This has automatically unwrapped each face of the cube for us, but its still not right, we want the selection to fill the UV space.

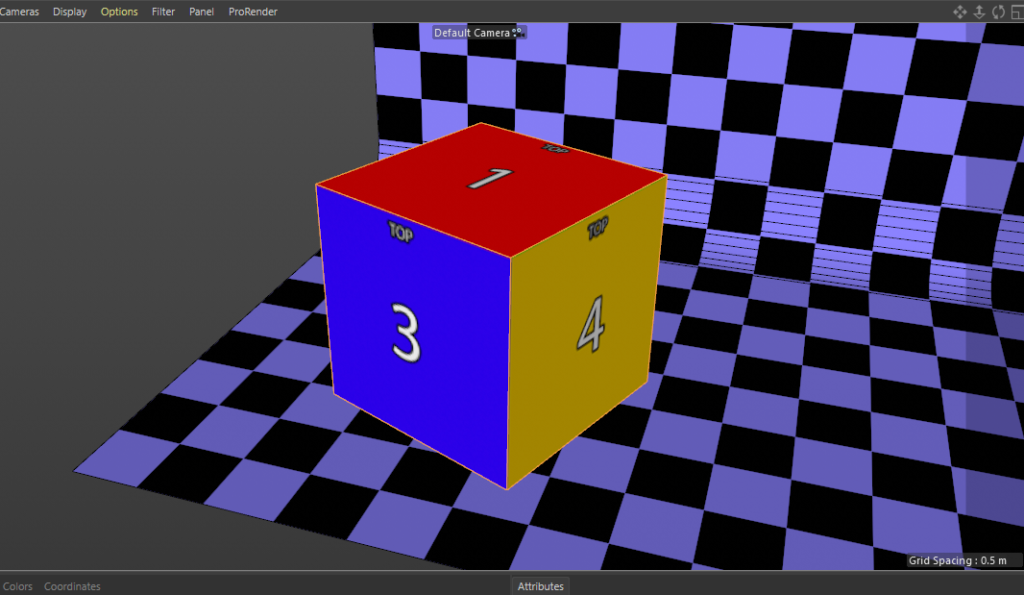

To make things visually easier lets load our Cube UV image we created earlier into the UV space window and then click the fit UV to canvas button (1). This will expand our selection to fill the UV space

this will also be reflected in our 3D view and now we can see the colours and numbers perfectly mapped to each face of the cube.

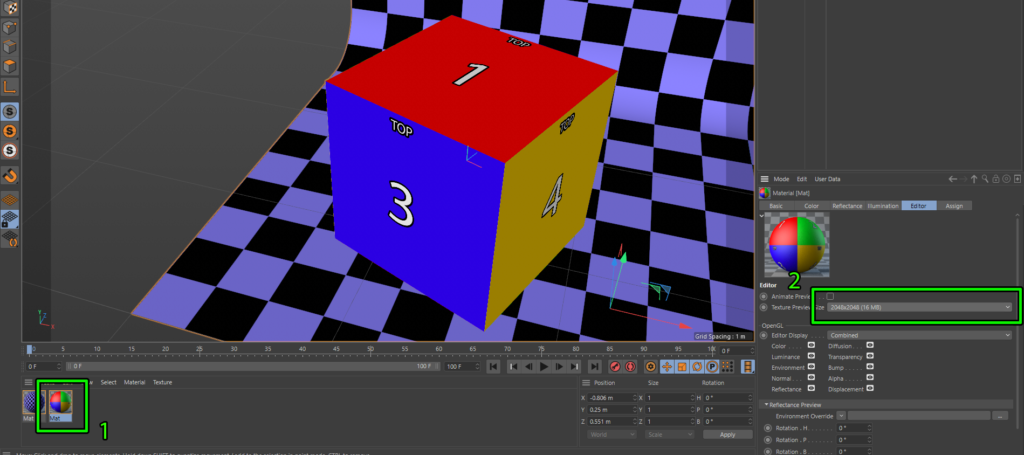

If we jump back into startup layout your texture might look like its displayed at low res on your cube and look really crunchy. To make things look sharper you need to increase the texture preview size. Do this by selecting your texture (1) and then change the texture preview size to something higher than it is from the drop down as shown below (2).

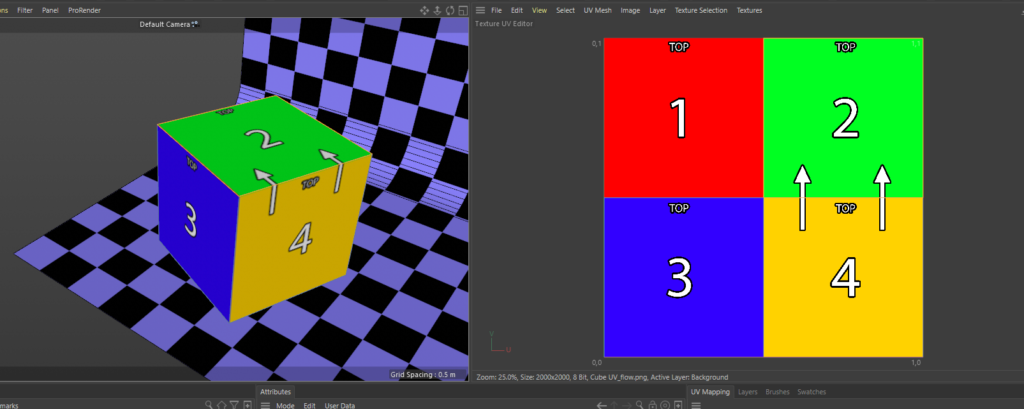

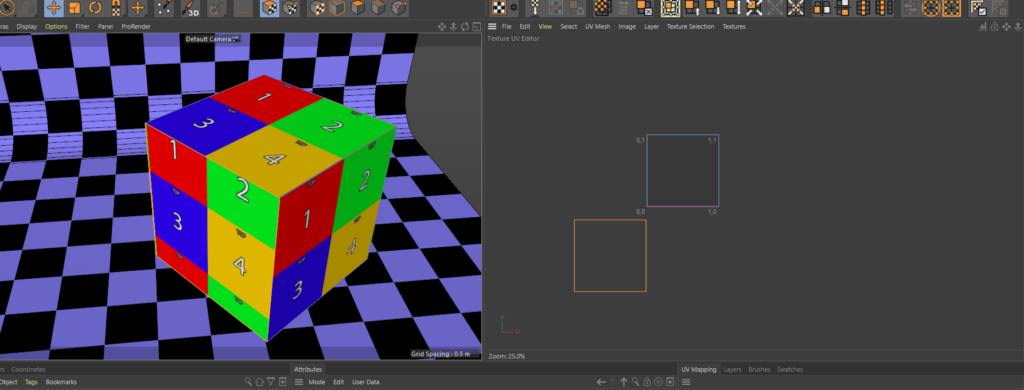

So all of that was pretty straight forward but I would like to change the UV layout, in the 2d workflow applying content to this cube i want it to flow up the left side and over the top essentially where the crease would be if we unfolded it. I modified the UV content template to show what I would like.

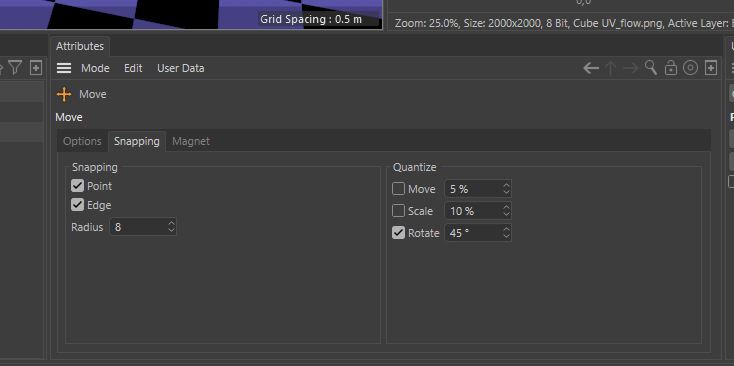

If we jump back into UV layout, it looks like we can just move that UV polygon to the right. First of all hit the E key to use the move tool and in the snapping tab bottom centre select Point and Edge for Snapping, also select rotate and set it to 45 degree. This will allow us to move the selection precisely, now select the top left UV polygon in the UV space and move it right so it snaps to the right quadrant.

Now it hasn’t completely dine what was expect, we also need to rotate the UV anticlockwise. Because we have snapped the rotate to 45 degree increments we can just use the rotate tool.

Select the rotate tool by hitting the R Key, select click on the UV we want to rotate and drag left, do this twice until it is rotated. Now its exactly how i would like it, the snapping selections are really key here, of snap rotate is not selected and you rotate a UV a few times it can be difficult to get back to perpendicular.

Now lets move onto cube 2 and do this a little different, click on Cube 2 and then clear the UV canvas background if it doesn’t do it when you click on Cube 2 as shown below in the textures window. You can easily bring up the textures you’ve previously loaded here from this selection menu.

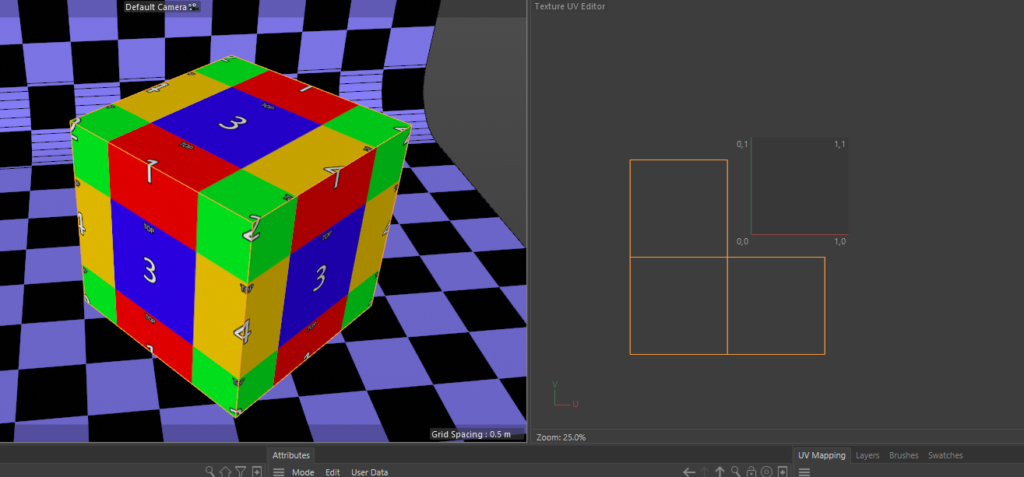

For this cube were gonna do this quick and dirty, we just want to select the bottom left face but we cant just grab that in the UV window as they are all stacked on-top of each other. Instead of clicking in the UV window, providing your UV polygon selection is turned on and you have the move tool selected you can just click that face in the 3D view and then go over to the UV space scroll zoom out and drag it around, you can see how it repeats the UV when outside of the UV space.

Now drag all the squares and arrange them like this, on this cube i want the content flow, or hinge to be between the 2 left faces. Were working a little blind here but can see with our previously created texture everything is rotated the right way.

To get this looking more sensible hit ctrl + a to select all the UV and then click on the Fit UV to canvas button like we did earlier, this will pull all the UV into the normalised UV space now the cube texture will look correct.

There we have it, there’s quite a few little steps here to remember and selections but hopefully will help anyone new to UV mapping in cinema wishing to build disguise projects.

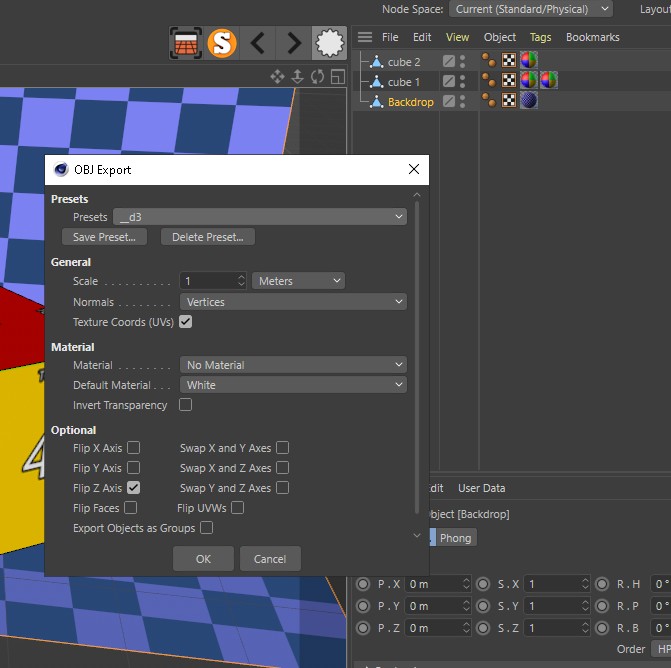

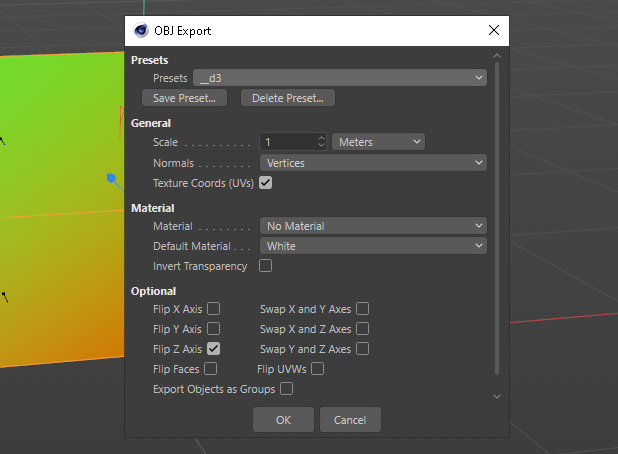

Now all we need to do is export our mesh into our disguise project mesh folder and now we have our really handy Export Selected Object As.. button we can export each of our mesh individually. Lets start by exporting the backdrop, select the Backdrop in the Objects window, then clock on the Export Selected Objects button and select wavefront OBJ from the bottom of the list. I made a preset for disguise and these are my settings.

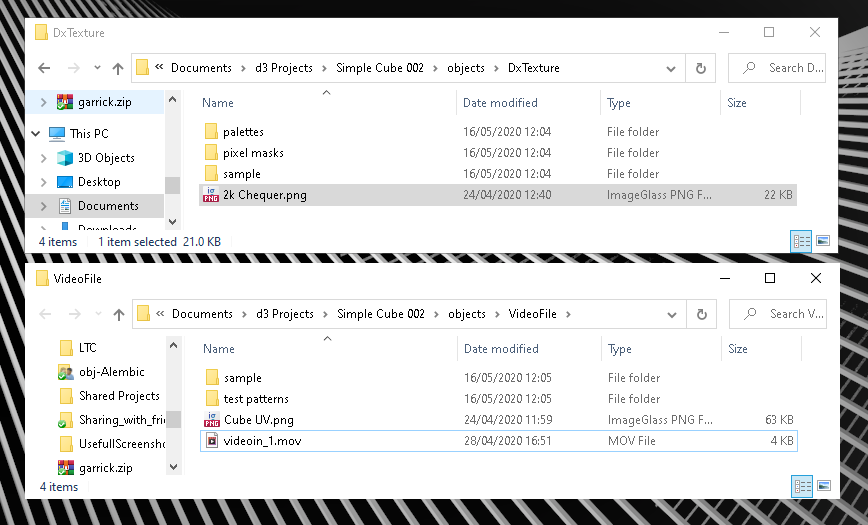

Once I’ve exported all the mesh i also put my texture into he project too i put the Cube UV texture into the video folder, but because I’m going to use the backdrop as a prop that needs to go into DxTexture folder so we can apply it to the prop.

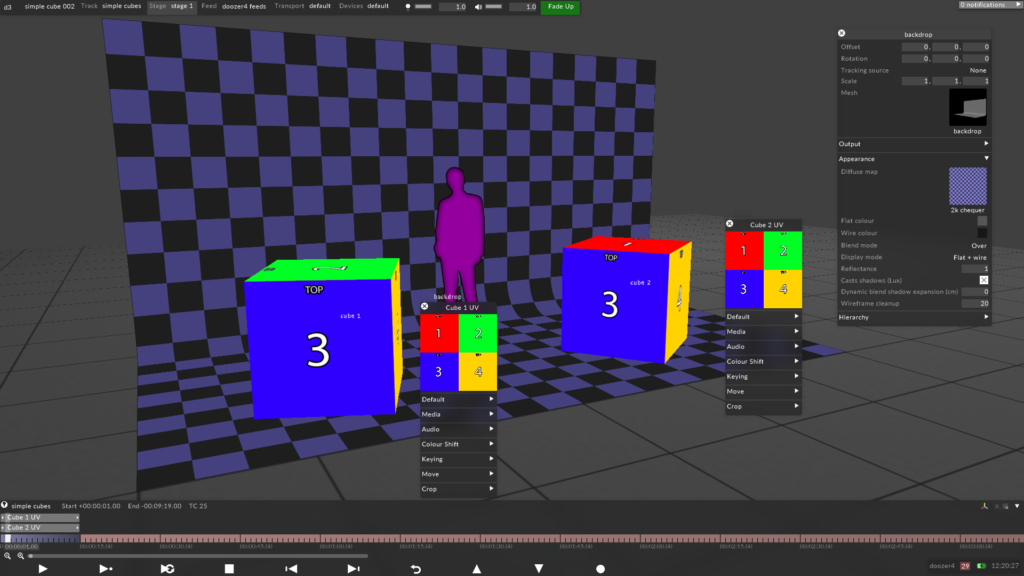

After bringing all the mesh into disguise and direct mapping to the cubes we have a mirror of our cinema project. From here if any changes to the mesh are needed I do all the changes in cinema and re-export that element. This gives me much more control, accuracy and flexibility than moving and scaling in in disguise direct

I hope this has been helpful to anyone reading and if you have any questions please reach out to me. Next post will be about generating content templates and working with the content team.

Posted in Uncategorized

3 Comments

disguise & C4D workflows : 001 Introduction

Projection study pitch for Hawthorn 2018

Intro

I’ve been meaning to do this for a long time, passing on my knowledge of my personal workflow for building video projects using the disguise media server and Cinema 4D. For anyone new to the blog, I’ve been using disguise (formally known as d3) heavily since 2014 and have built many big video projects over the past 6 years. Prior to this i was building my own media server applications using vvvv , quartz composer and other software since 2005.

Beginning

There’s quite allot of knowledge to pass on here of various different techniques so I’m gonna start at the super basics of UV mapping a basic flat surface in C4D, these things come as default in the disguise software but this process will serve as a good basis for bigger more complex things which I promise you they will get complex.

C4D Setup

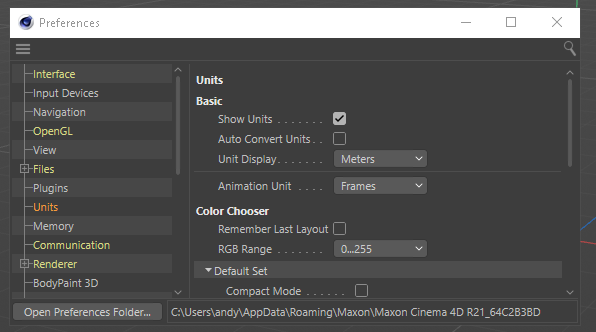

First thing is I set up my blank cinema project so it shares the same units and scaling as the disguise environment, hit CTRL+E to bring up a preferences windows and set the units to meters after selecting the Units options so all dimensions are displayed in meters

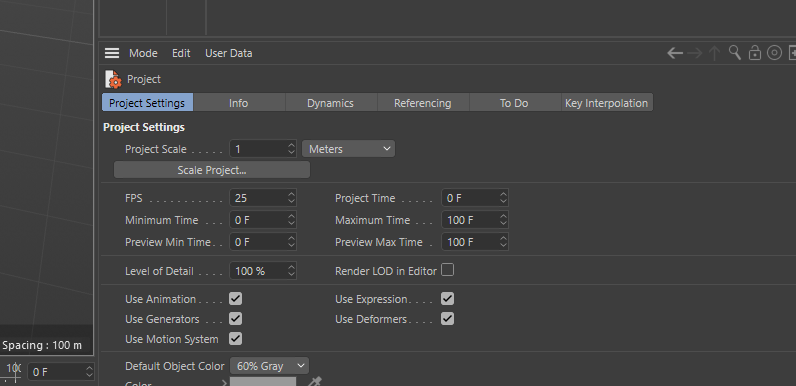

Next is to set the Project scale to meters CTRL+E which will display the “project settings” in the bottom left window. Set the project scale to 1 = Meters.

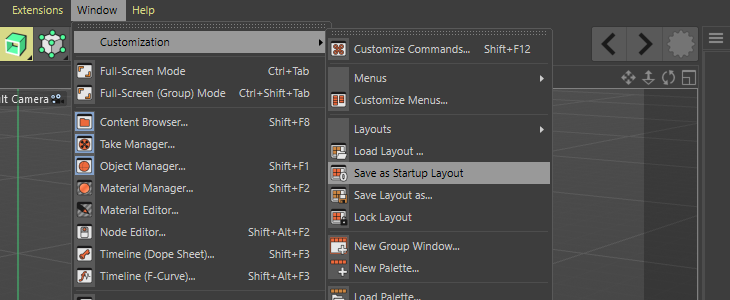

And, finally, to save these as the defaults for each time you open cinema. click on the Window menu, along to customisation and then hit save as default layout. This is really useful as if you find yourself using the same setup each time, this includes 3D objects in the scene and textures you can build what you use most commonly and save as startup layout.

That’s it, all done with the setup, now were ready to UV a flat surface. So far, I’ve been under the assumption that you know what a UV is. Just in case you don’t, here’s a very basic description.

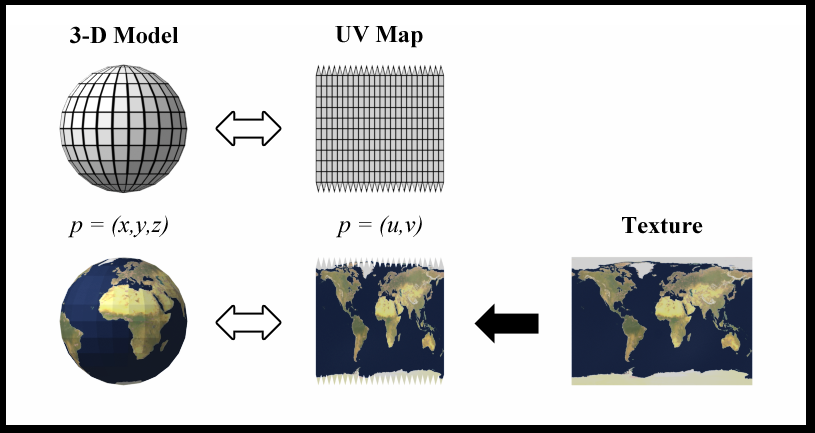

What is a UV

To be able to apply an image texture to a 3d object it needs to have its pixel space defined, this is a way of relating a 2D flat image space to a 3D image space. In short its essentially origami in reverse….kind of. Below is an image taken from Wikipedia UV mapping which shows the UV and the relationship to how an earth image gets wrapped onto the 3D sphere and how a how a cube is unwrapped receptively.

Making a basic screen in cinema

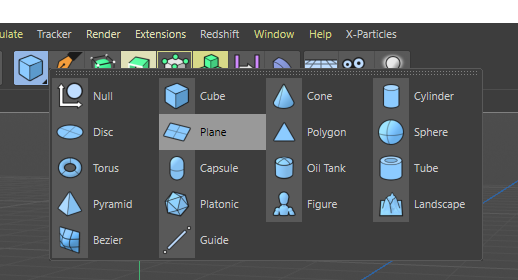

Finally were here getting down to some nitty gritty of making something!! Now as default, when you create a basic basic object from the library in cinema it comes with a default UV space/tag. Sometimes we just want to draw a screen in 3D space using the polygon pen on-top of a received cad or something. These 3D objects created with the polygon pen don’t by default have a UV tag associated with them so I’m gonna show you how to add a UV tag.

Lets start super basic first by making a plane, click and HOLD on the blue cube icon and then a menu and then it will open a menu to select a plane, move the mouse over that and release.

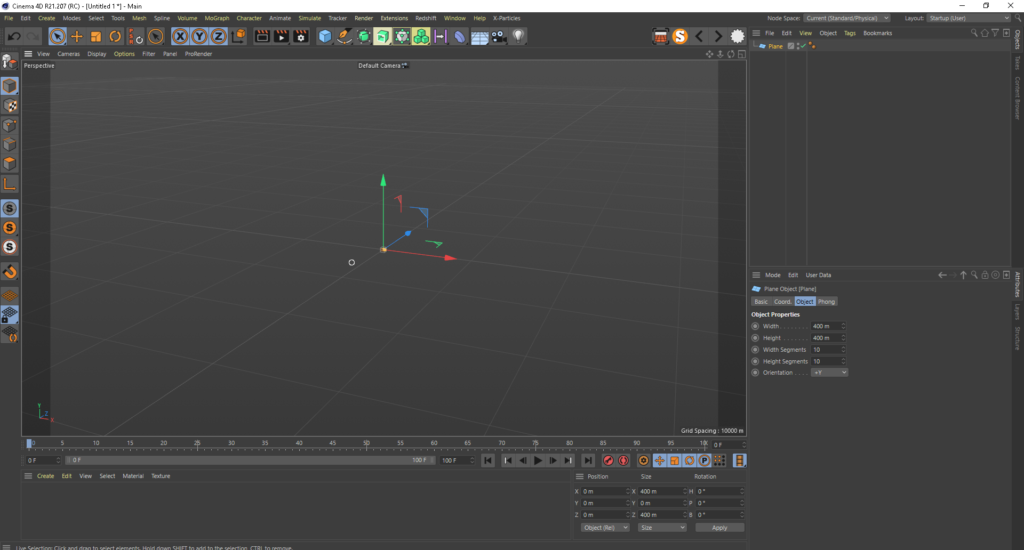

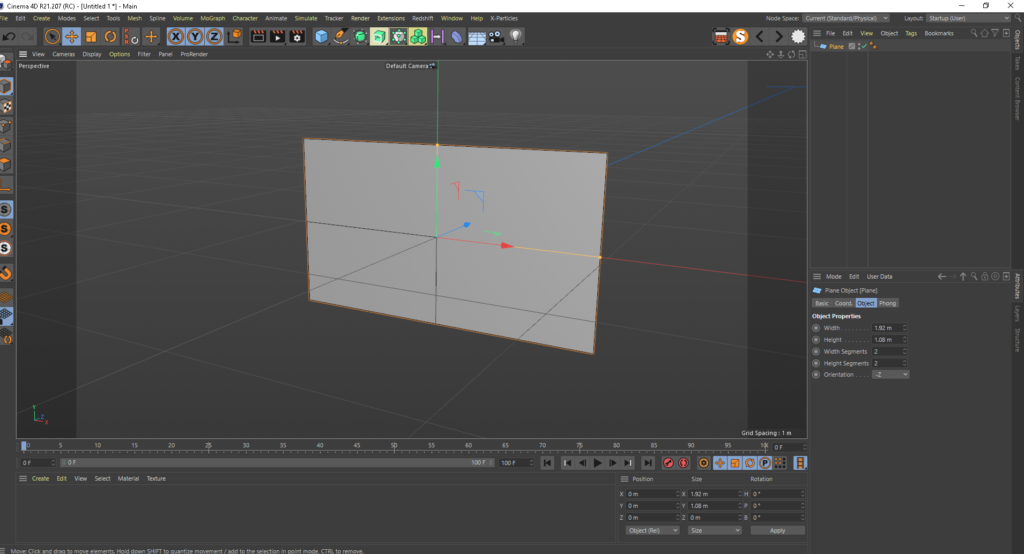

You now should now have a glorious flat plane in your 3D scene, and as of C4D_release21 you should see something like this. By default we’ve created a 400m x 400m flat plane divided into 10 sections by 10 sections. This is all good but we cant see anything useful in the d3 window and 400m square is far too big for what were trying to achieve.

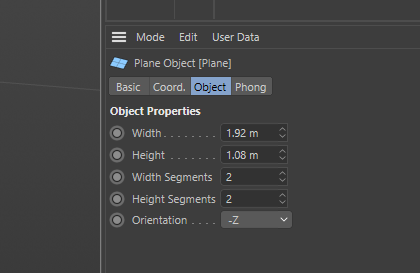

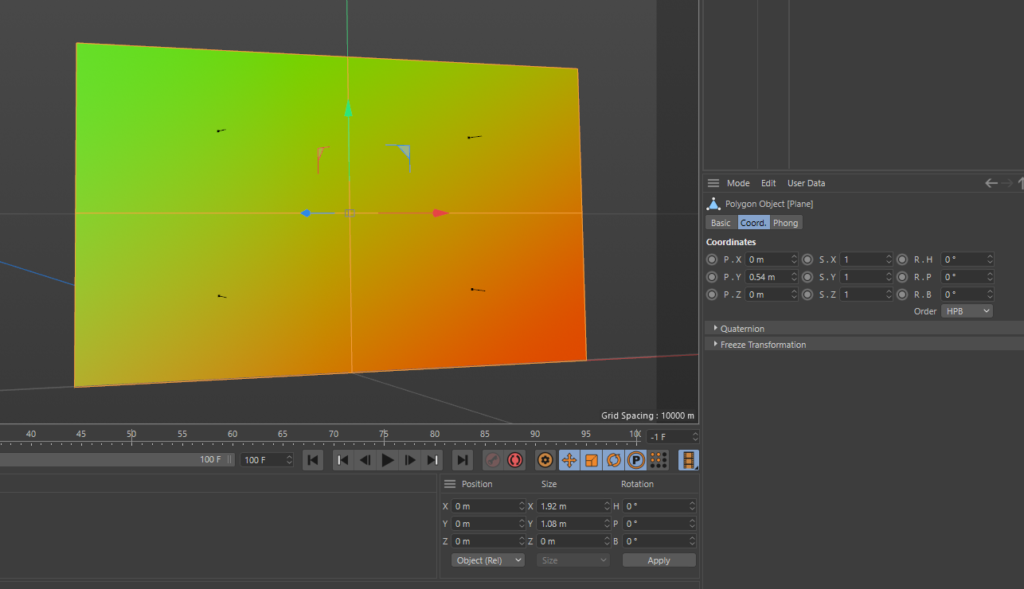

So, lets make this a more sensible size and the right place in our 3d view-port. Make sure the plane is selected in the content browser and set the object to the below settings, we are basically making a 1920 x 1080 pixel size screen (thus the width and height) and setting it the correct forward orientation split into 2×2 segments.

The reason for splitting into segments is for further down the line if you have 2 projectors covering the surface and you need QuickCal points in the centre and the edges centre, I’ll cover QuckCal in detail in later posts.

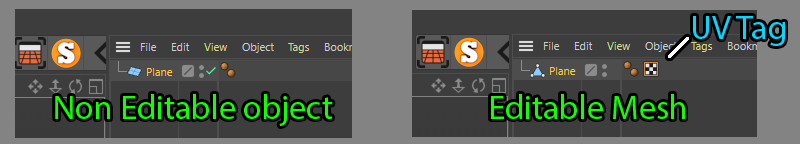

Now we have our simple plane in the 3d view, hit n-b on the keyboard which is the shortcut to display the object in Gouraud Shading with Lines mode. The object is still in object mode which means we can parametrically change, in this case the divisions on the fly. However we need the object to be editable and you can do this by making sire the object is selected and hitting C on the keyboard, the only way to get back to parametric mode is to undo so you need to be happy with your settings before you make it editable. I quite often save in versions so now would be a good time to hit save as

“callmenames_v001” before hitting C and then save again as “callmesnames_v002”.

Quick note here, for all the rest of this post, the orientation of the 3d view camera is at -Z to the mesh. +X is right +Y is Up and +Z is moving away from the camera.

Once you hit that C key you will see the icon change from a plane inti a triangle which means this is now a mesh. You will also see a black and white chequer box appears and that is the UV tag, this is what defines the pixel space for our object, without this you wont be able to apply an image or a move to it as there s no map for which 2D pixel should go where on the 3D object.

Lets delete this, just to show an example on how you add a UV tag if you’ve generated your mesh via another method, or maybe imported from something with no UV

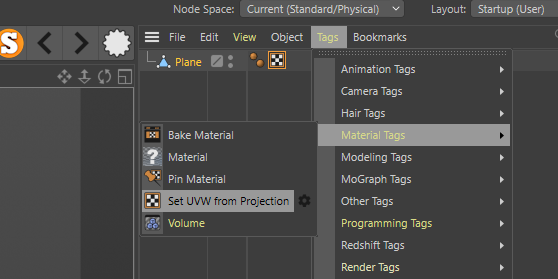

The easiest way to add a UV tag is make sure your mesh is selected, go to the tags menu, scroll down to the materials option and then click Set UVW from Projection this will add our tag back on but our UV map wont be correct, which is the perfect setup for the next step.

Note: Earlier versions of C4D the location and the Set UV from Projection will be in a different place but easy enough to find looking though the menus

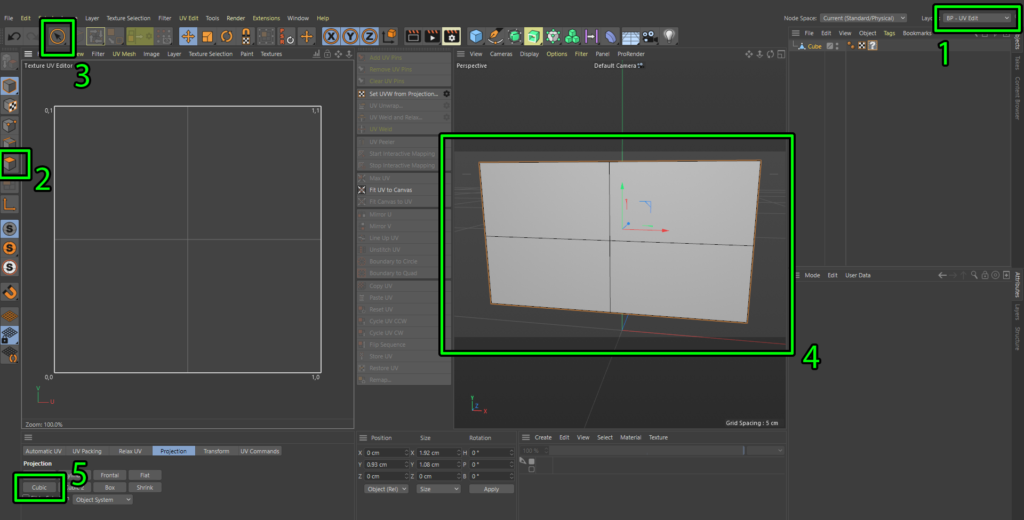

Next step in the process is to go into cinemas UV mapping layout, do this by selecting BP- UV Edit in the drop down (1). this will rearrange your work space and display the tools needed for UV editing, next….

(2) make sure the UV Polygons mode is engaged.

(3) select your Live Selection tool.

(4) make sure all the polygons are selected on your object.

(5) and finally hit the cubic button.

Once you hit that button, you will see in the UV texture editor window, change to 4 squares winch represent the UV pace of the 4 quadrants that make up the 3D object. Now is a pretty good time to click the other buttons in the UV mapping widow and see what happens but make sure you back on Cubic at the end.

At the time of writing this Maxon updated their UV tools and completely rearranged the UV layout space. Here’s a screenshot to reflect those changes if your having trouble finding them. Also the Materials window is now below the 3D view port which you will need to know for the next part if your running R22

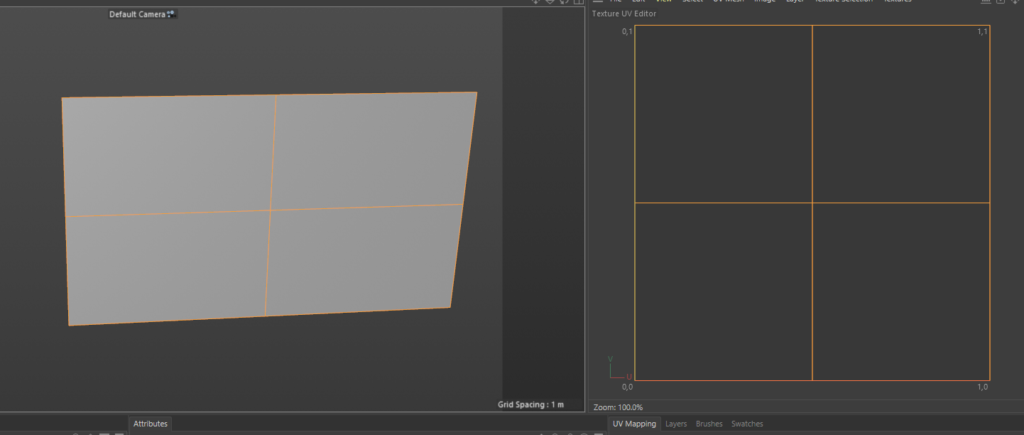

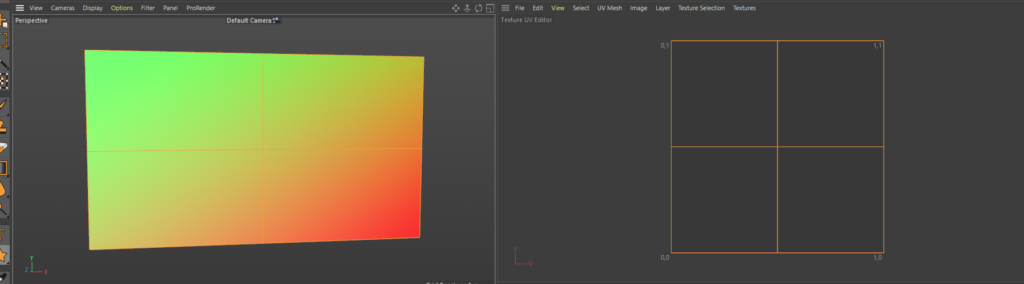

Now you should see something like below but we need to make sure none of our UV squares are flipped or rotated which can happen quite often when your dealing with multiple screens. Next step ill show you my method for checking this super quickly in cinema

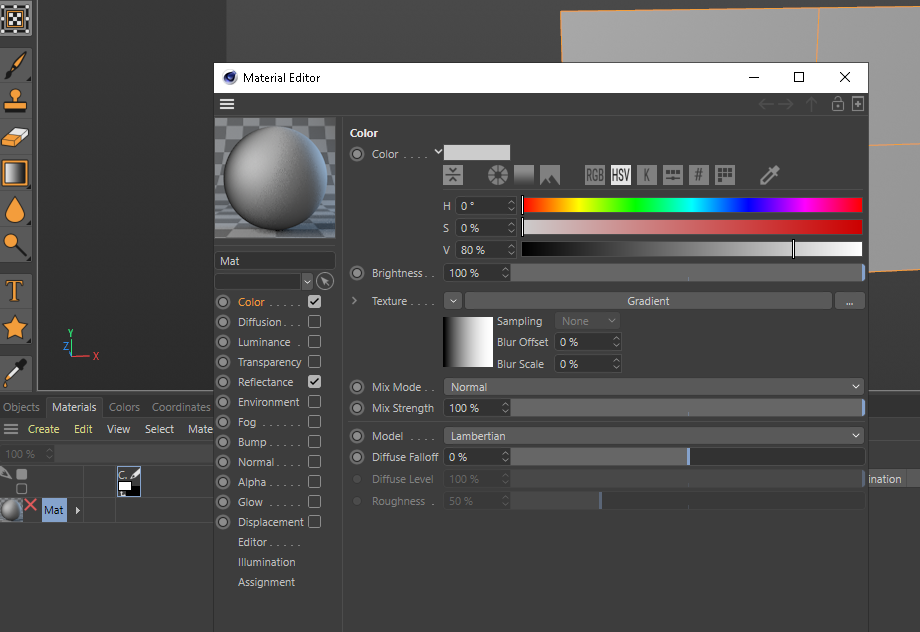

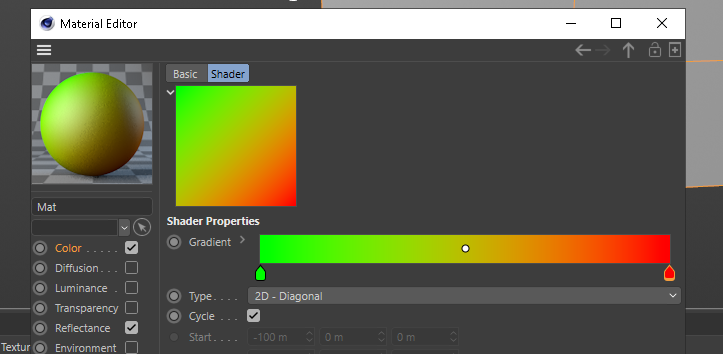

Select the materials tab and then create new default material and then double clock the material to bring up the material editor window. Make sure colour is selected and then in the texture drop down arrow select gradient, this will give us a default gradient of black to white horizontally.

We want the gradient to be diagonal and my preferences are a green to red gradient, to do this double click the gradient square window, which will bring up another widow and copy my settings below. you change the colours by double clicking on the gradient tags below the horizontal bar. Once your done, close the window and now we have the ultimate orientation checking tool.

Drag your new texture on your model and if everything is in the correct origination you will always see green top left and red bottom right. if this isn’t the case then something is flipped in the X or Y

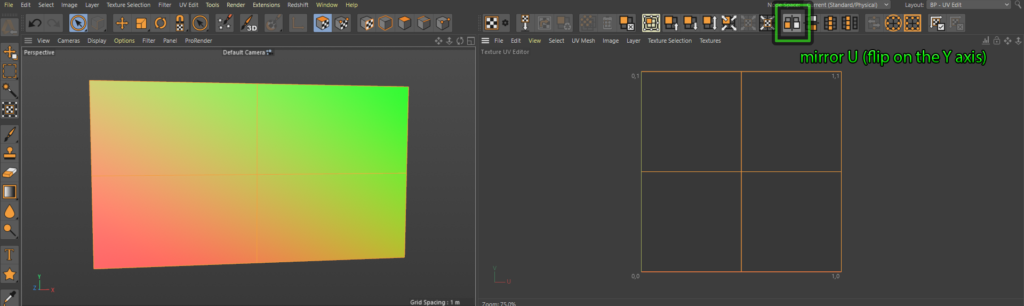

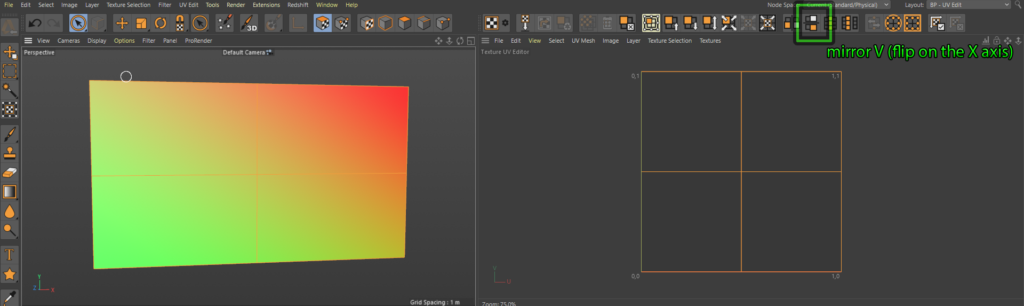

Below are a couple of examples of if the UV is flipped, make sure the whole object is still selected and UV Polygon mode. if you click these buttons highlighted you can see the effects of the flipping and orientation

And then to get even more detailed you can even select individual, polygons and flip them move them do whatever you like with them and really mess up your UV texture space. This is a great example of how the diagonal gradient can quickly help identify incorrect flips.

I’m not gonna cover all the buttons and tools here in this post, this will become more in depth on the next one which we will unwrap a cube. Now that we have our mesh UV’d and everything is orientated correctly we need to check our polygon normal’s. Basically the normal’s are which is the front and which is the back of the polygon, this is really important for disguise as it uses this information when calculating various things. For example, if your normal’s are facing away from the projector then dynamic soft edge will not work correctly.

To make sure the normal’s are facing the correct way, jump back into your “Startup (User)” layout. Make sure your in polygon selection mode and select all the polygons in the scene, then in the options tab shown below, and turn on Polygon normals. Now you will see some tiny arrows in the view port showing which s the front face of the polygon and we can see everything is facing the correct way. If you do need to change the orientation the shortcut is to hit U then R and you will see the arrows flip to the other side.

So now we have our mesh which is the size we want, our UV’s are correct and our normals are facing the correct way. I’m gonna move the mesh up so that it sits at 0,0,0 in world space at its bottom centre. I basically moved it up in the Y half the height of the mesh. This means when we bring it into disguise it will sit right on 0,0,0 too.

OK lets export this thing into disguise! Currently cinemas inbuilt export tools are pretty crap and I’ll get onto the other options for exporting in future posts. For this occasion we will use the standard export option. TO access this go to the file tab, scroll down to Export then select Wavefront OBJ at the bottom of the list.

This will bring up a dialog box, like below, I’ve created a preset for disguise and these are export settings you should use ad you can export straight into the disguise mesh folder and save it as whatever you wish.

Big Note: One of the most useful things you can do to make your life easier in the long term is understand the import and export characteristics between the various software’s. knowing what needs the Z flipping and how the default scaling factor is between different things.

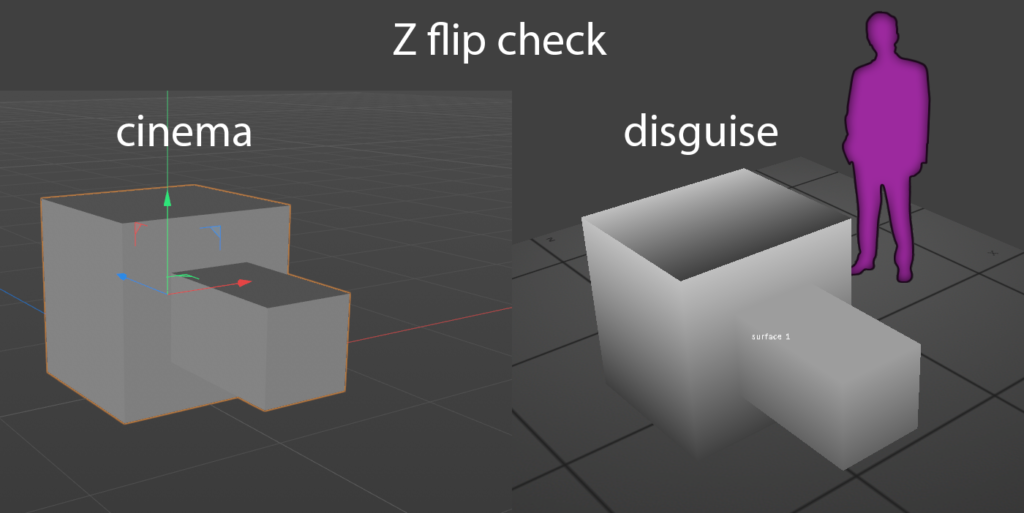

Below is a simple shape shape I export to check z-flips between software, the small nubbin is always facing Z- and it would be really clear when importing into disguise if the z does or doesn’t need flipping as the nubbin would be facing Z+, the wrong way.

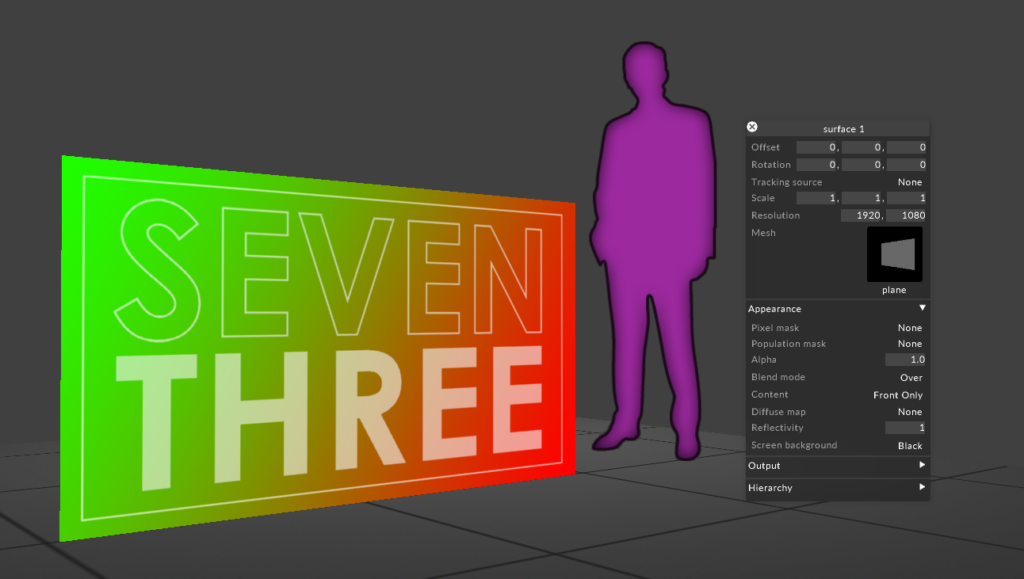

And here we are finally our generated plane from cinema exported and imported into disguise. I’m going to assume you understand the basics of how to import the mesh and bring it into disguise. All scaling, position and orientation is correct and i double checked this by generating a little test pattern, basically my favourite UV checker gradient and some text,

Closing

That’s it I hope I’ve manged to pass on some knowledge, I appreciate that these are super baby steps. And with this example, quite allot of steps to quite a boring result but I promise you, following this workflow and the techniques will make building projects in cinema and bringing them it to disguise will start to become quicker and easier.

Next post coming soon, unwrapping a cube in cinema, generating a content template and more cinema UV detailed tool explanations.

Please leave any comments, suggestions and questions below and thanks for reading, best Andy

Posted in cinema4d, d3

Tagged 3d modelling, cinema4d, disguise, media server, orienation, scaling, uv, video, workflows, z-flip

2 Comments

Summer is over and what a jolly busy time it was

Here we are, another couple of months between posts and currently sat in my hotel room in Macau determined to complete this post/rundown in maybe the next hour. Last time I posted I was Moscow working on the world cup for Whitelight. Now I’m working on a new show going in to Studio city, Macau, touted as the most electrifying stunt show ever. I cant say much about that because I’m under NDA which seems to be the case with most of the jobs I’m looking after these days.

Right now I’m scanning through my calendar and photos to put together this post listening to max cooper whom I’ve been listening to quite allot recently. Here’s a link below, get your ears around this, it’s lovely.

So, here’s a breakdown of busyness since last posting in June.

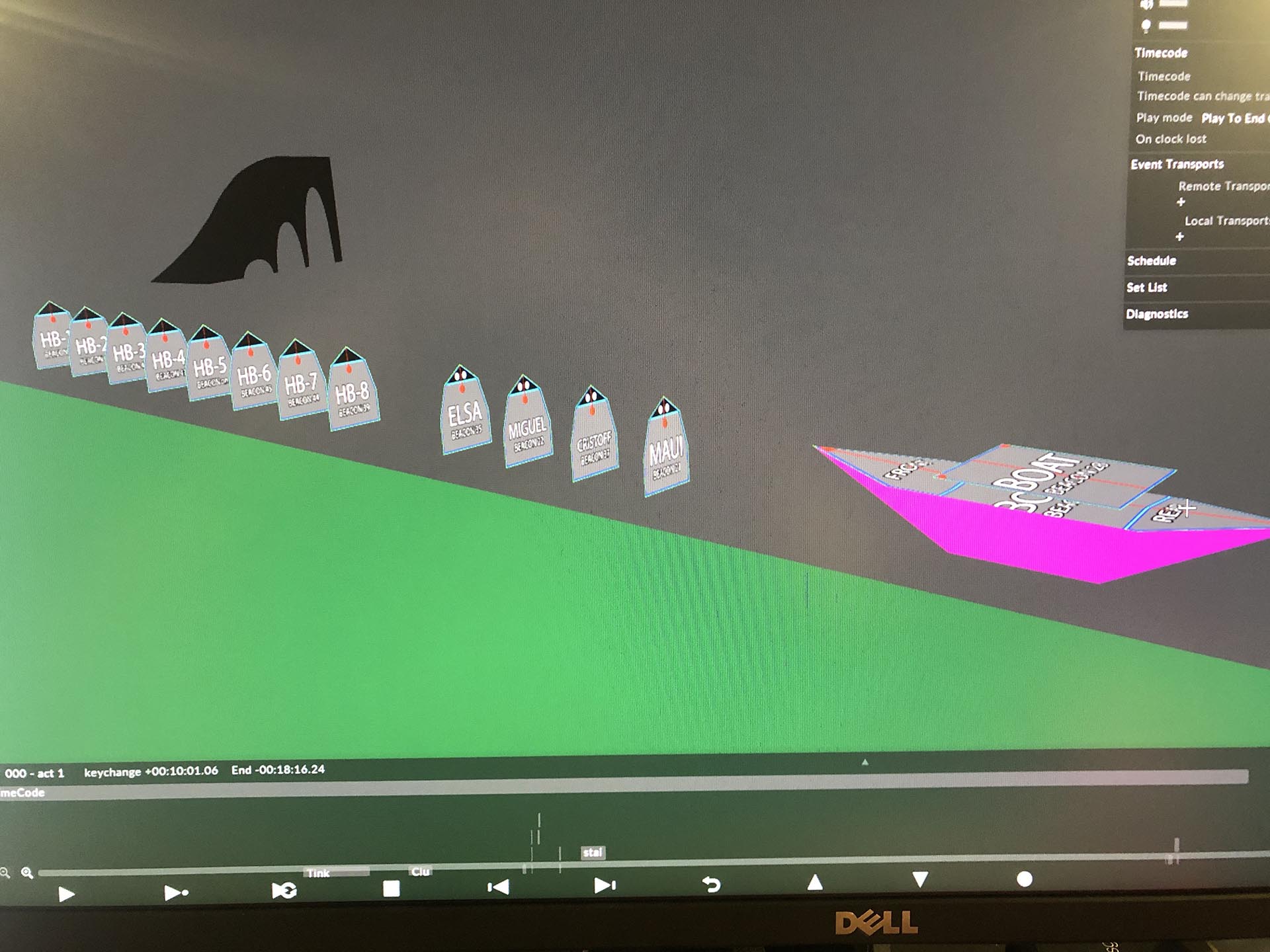

- Starlight express d3 project setup

- D35 Disney on ice with TheHive

- CT Projection mapping for Electric Audi Launch

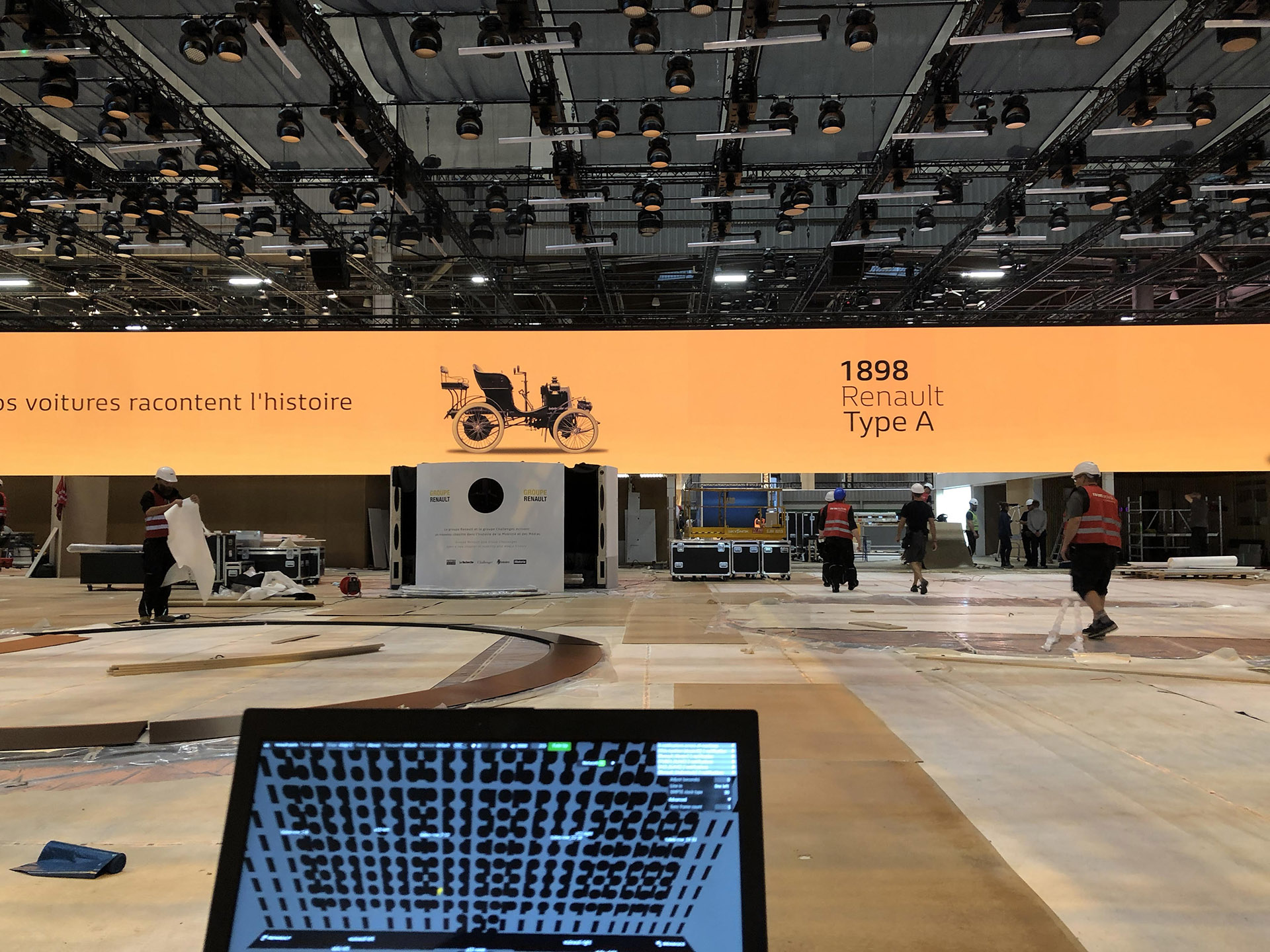

- Paris motor-show with Pixway

- Got the keys to my new flat

- Projection Mapping the Empress Ballroom

- Pixway Open-day

- Projection mapping the Louvre Abu Dhabi

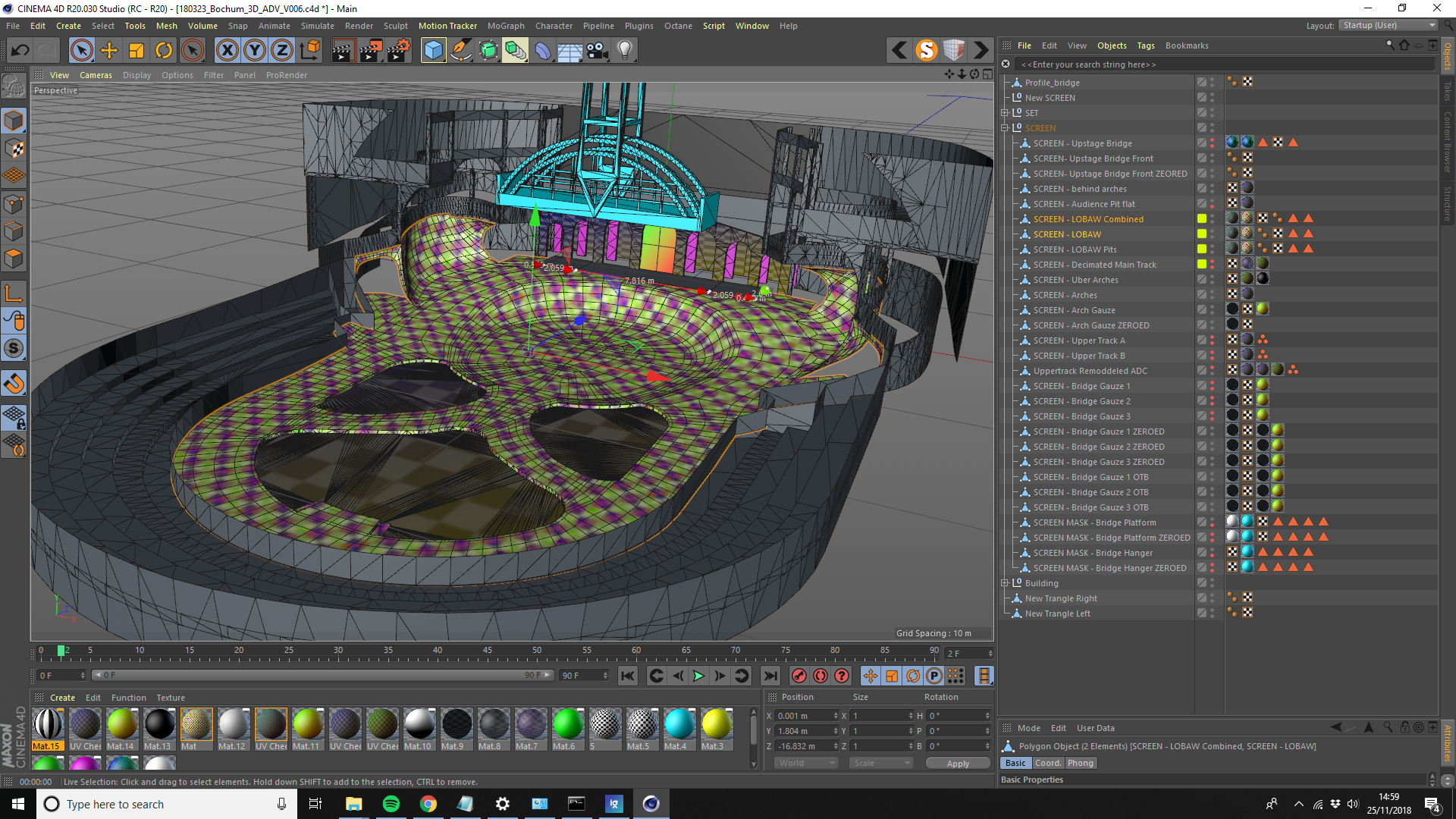

I think it was around April when i first got the email form Duncan asking if i could do the d3 project setup for a big project he was doing in Bochum, Germany. This was for a refurb of starlight express & the project setup spec involved taking the dirty meshed scan data and reconstructing it with sensible UV’s and streamline it for disguise.

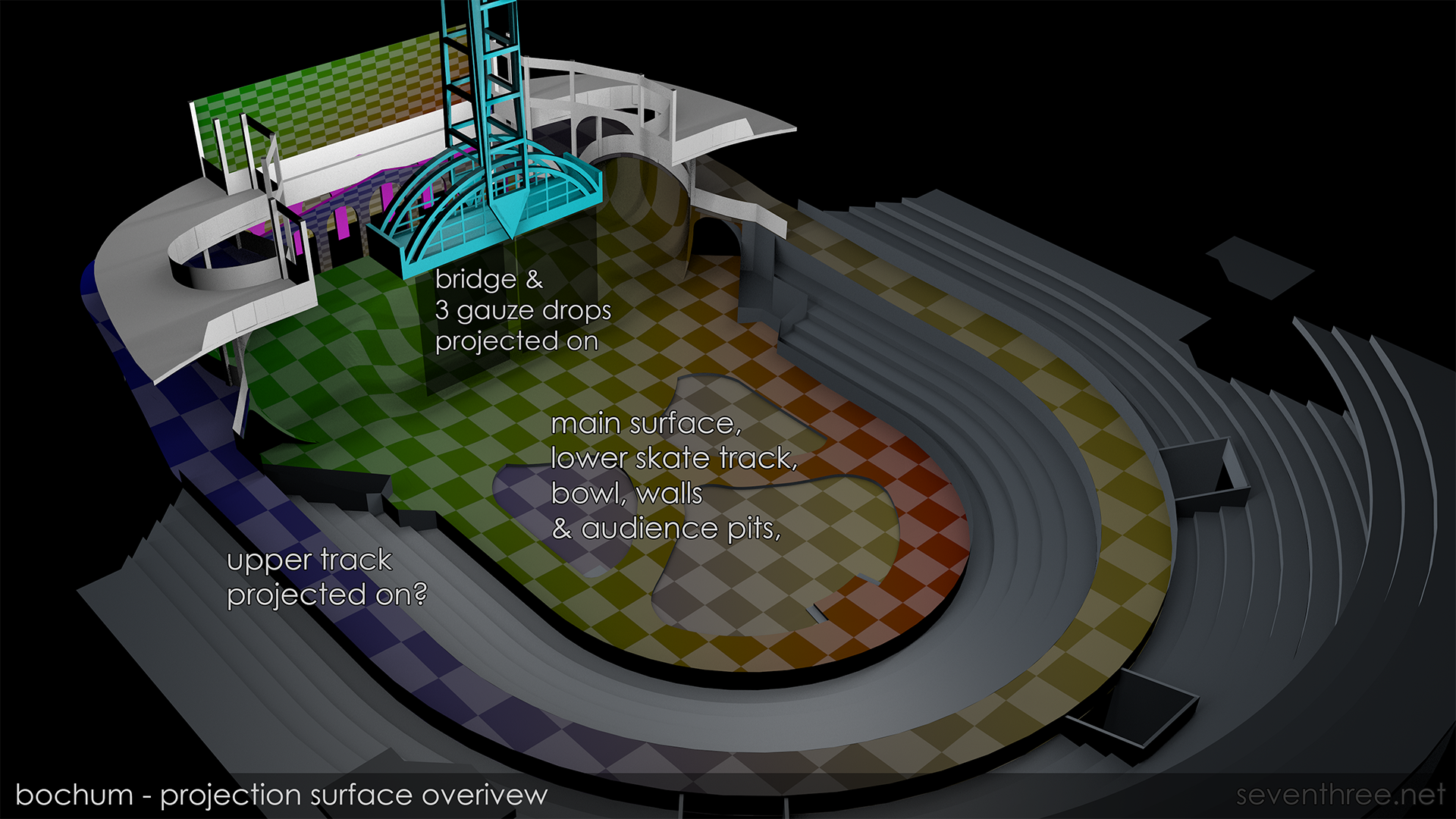

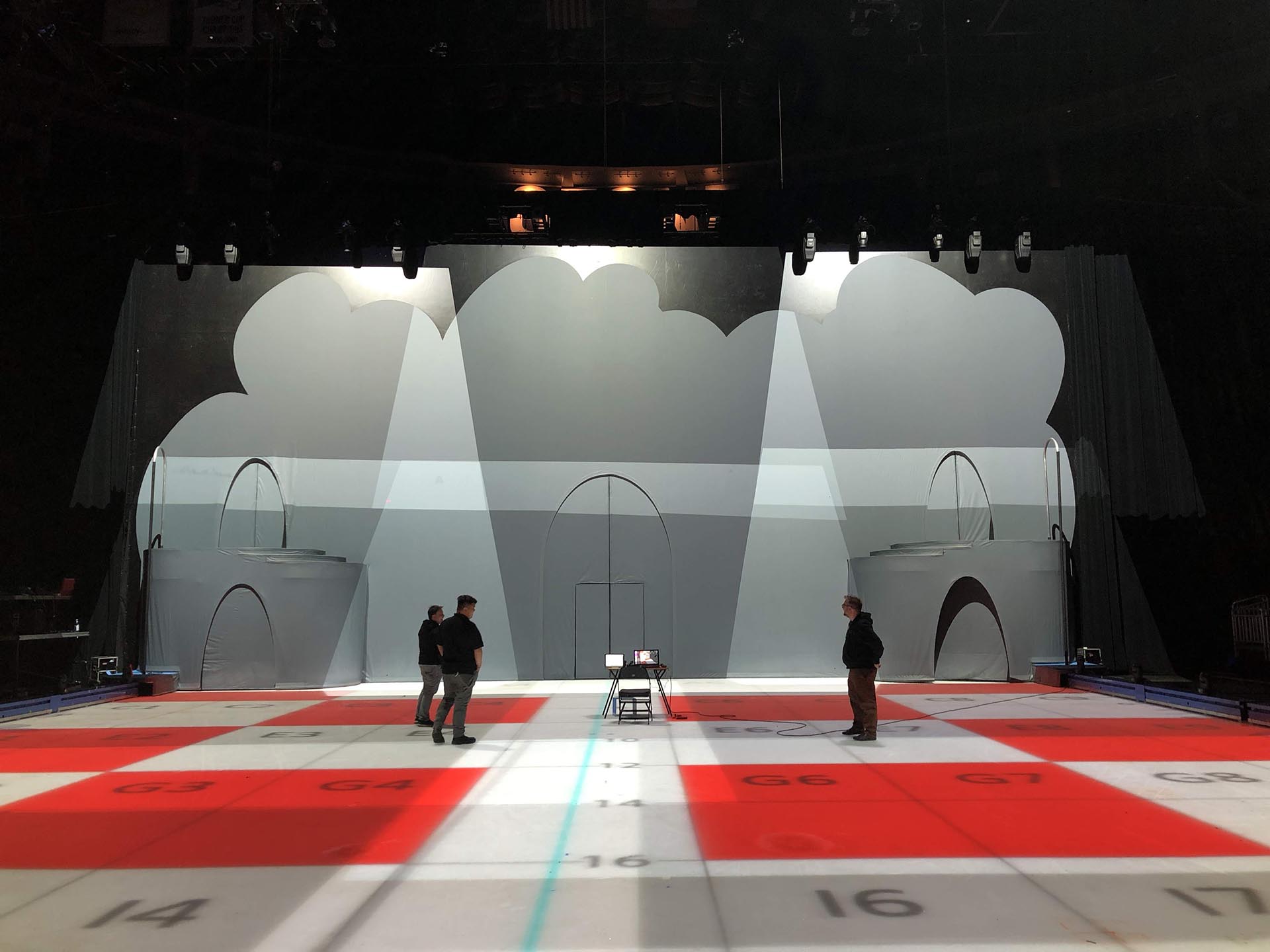

It was a pretty in depth setup and allot of cleaning up was required, above is a later version of my cinema project much tidier than the first iteration and below is an early render I sent to the team to make sure we were all on the same page with what was to be projected on.

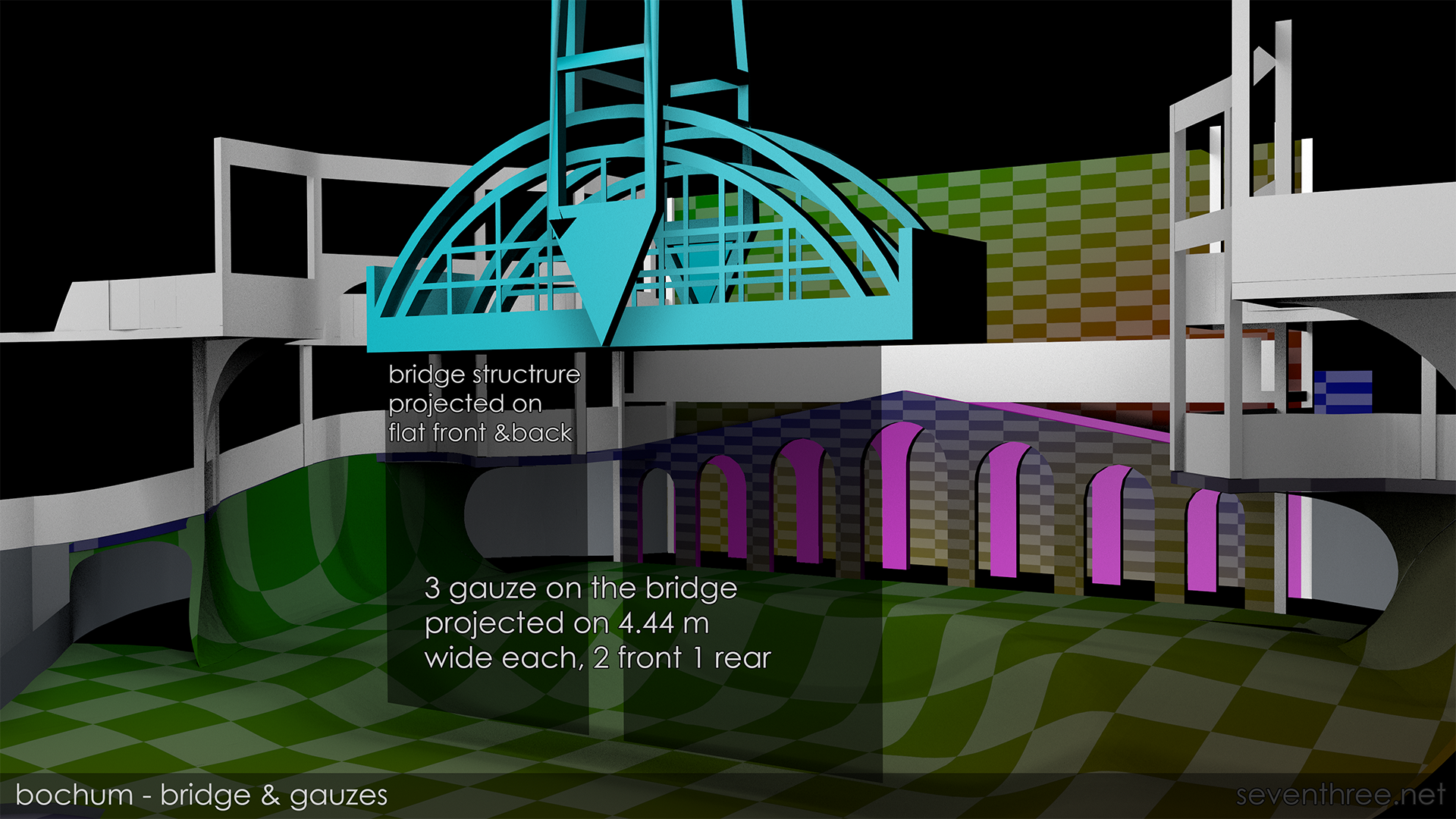

The bridge aspect started out to what i thought was a simple prop, but as the project evolved I made updates requested by Emily to ass functionality into the virtual bridge so it was rigged and parented like the actual moving all singing dancing bridge onsite. This meant that the all of the physical moves could be matched in the virtual world to allow tracking projection on the set

A more detailed overview is on the disguise website here: Starlight Express Bochum

Shortly after getting back from the Moscow job i was on a plane with Rich working for The Hive to Orlando to help put together the video for D35 which was the internal name for the Feld Disney on ice show : Mickeys Search Party

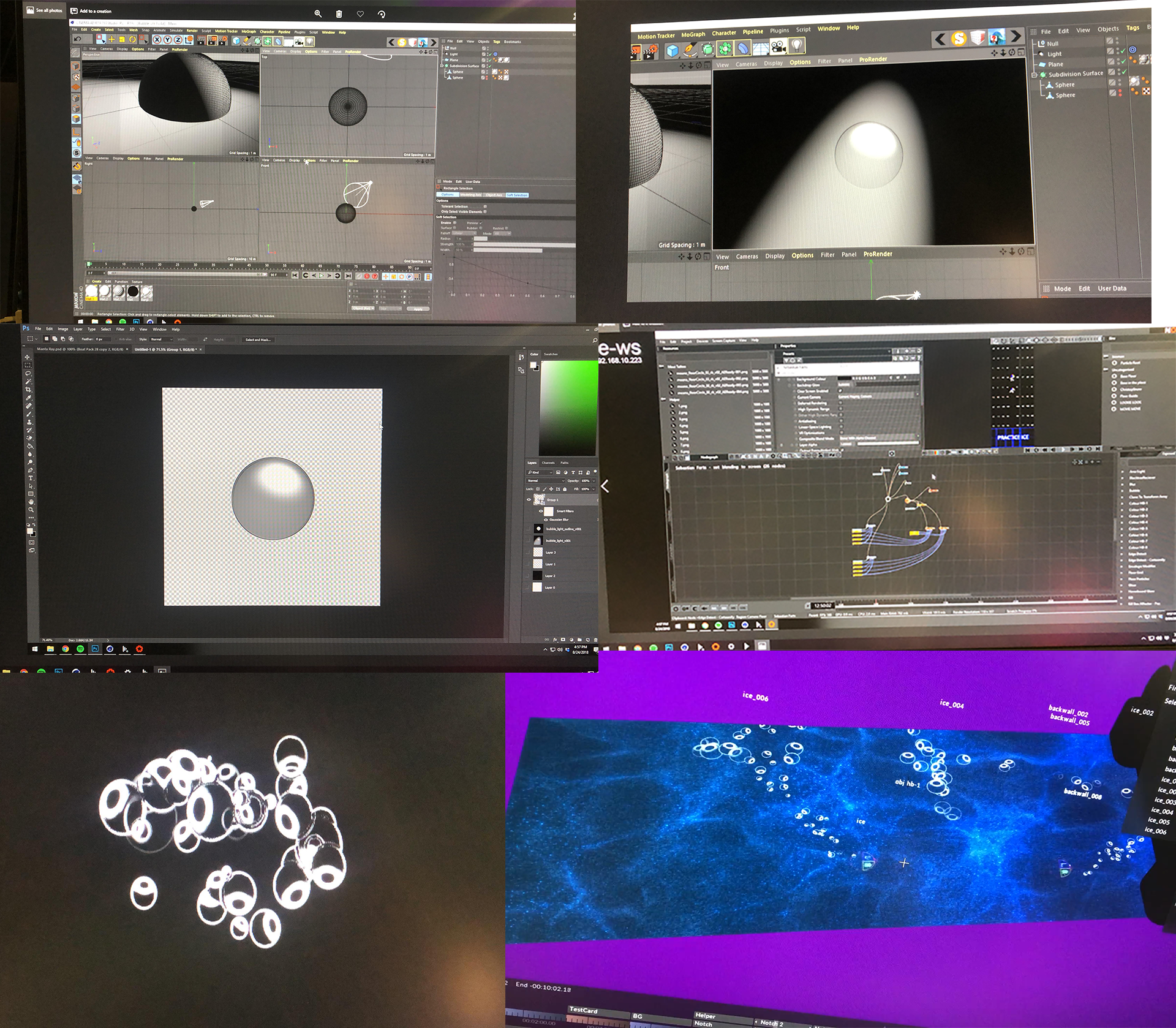

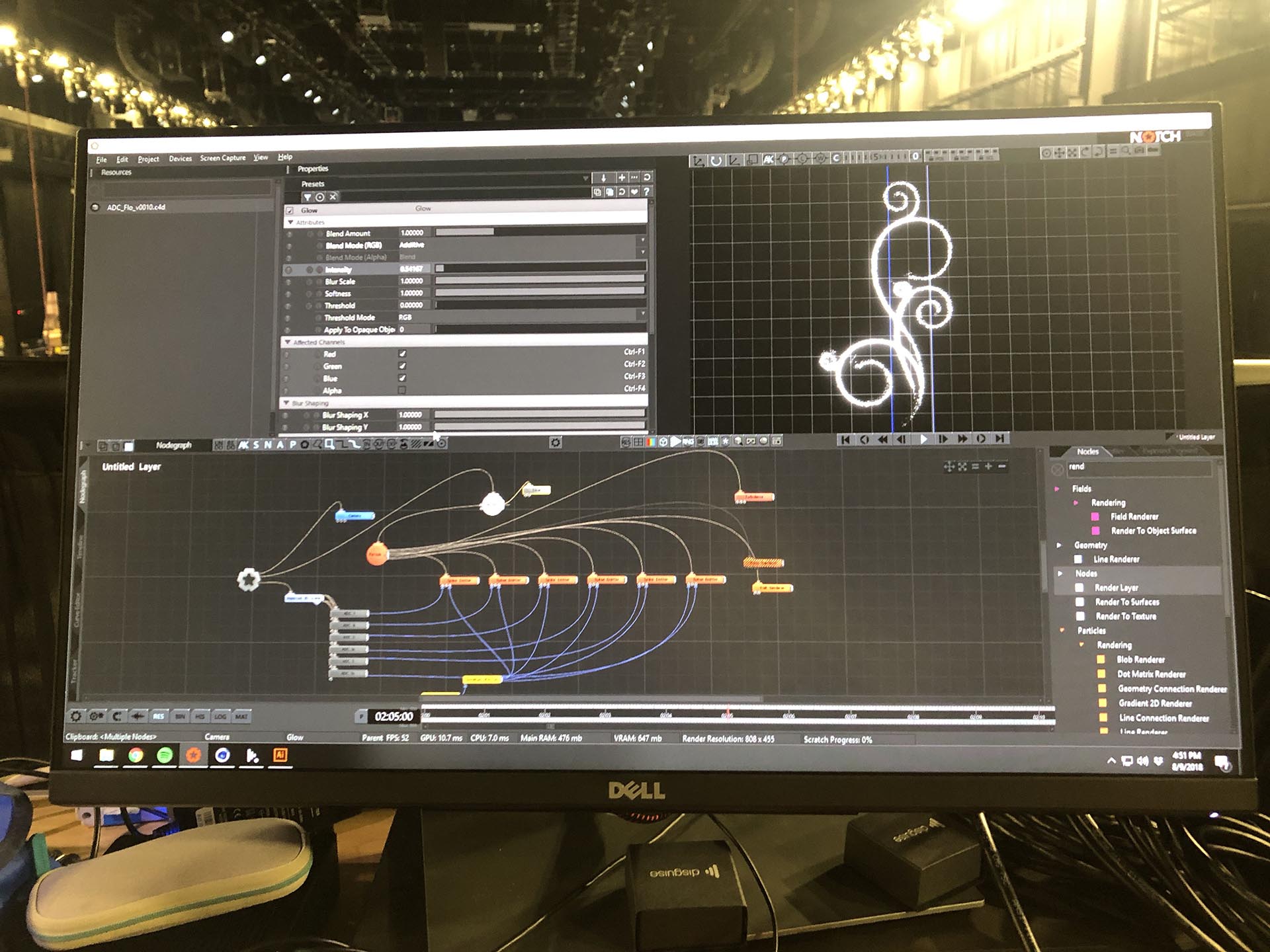

On the show we were projecting on to the ice floor and the scenic rear wall with 12 beamers all together Rich was in charge of programming and I was there to assist putting together Notch blocks and conform the real-time effects to work with the incoming Blacktrax tracking data. I think there were 50 performers altogether each tracked with BT and Sam was using the tracking data to light each performer with lighting specials. In the d3 side of things we were only taking tracking data for individual performers and other scenic elements such as projecting water ripples behind the boat as it came onstage.

Below is a basic breakdown of the process in creating projected bubbles which eminated and grew from Sebastian the lobster in the Little Mermaid scene. In cinema i created a double skinned sphere, textured it and lit it to give a shiny bubble effect. I then took it into Photoshop and tidied it up and gave it alpha. I then brought it into Notch and made a basic particle system turning the amount of particles right down to 64 and tweak the emission rate. I had a pre-made bin with the correct parameters exposed for a camera in the scene and control handles for the particles which I added for control to the particle system. Further bringing this into d3 I hooked up the correct BT beacons and with some parameters exposed like brightens, contrast, emission rate etc for the bubble we had projected bubbles emitting , growing and popping behind Sebastian as he skated around the stage.

Rich was incredibly busy on the job, we initially though tit was going to be a gentle first week starting and getting things ready for the intense rehearsals but from the first day we were busy. Rich did an outstanding job conforming and cutting together much original Disney content for most of the scenes with constant updates and changes required form the director. Even though it was mega busy with programmignand updates we still managed to find fun by flying our AcroBee micro drone around the arena after we got all our tasks ticked off the list.

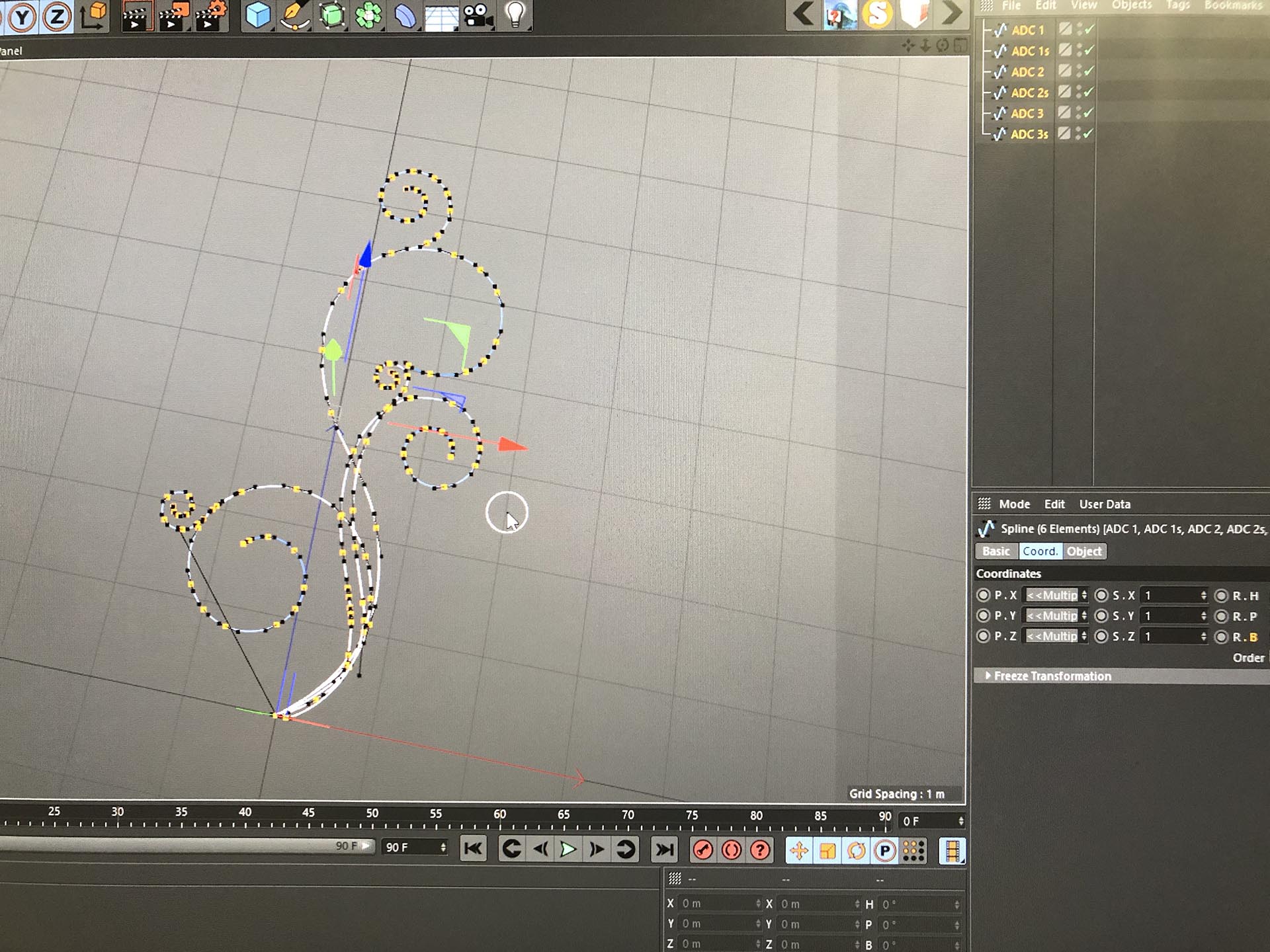

Another really nice Notch moment in the show was the finale where we were tracking the performer who was playing Elsa out of Frozen and I created a Notch effect so we could generate a “ice flourish” on cue at various parts though out the performance.

This was a challenging brief and after a few days work making good use of the spline emitter and doing fine adjustments of the particle parameters w had a really nice looking effect/ This is just a snippet of many effects and hard work that went in to the show, we were working closely with Sam who is LD for the show, and what a genuinely lovely chap. During the day before we left the rehearsal venue and went to see the show go into Orlando, Sam took us out in his Cessna to StPetersberg for lunch which was so fun.

This is just a snippet of many effects and hard work that went in to the show, we were working closely with Sam who is LD for the show, and what a genuinely lovely chap. During the day before we left the rehearsal venue and went to see the show go into Orlando, Sam took us out in his Cessna to StPetersberg for lunch which was so fun.

It was such an incredible view flying out on Bradenton and across the sea to StPetersberg, what an awesome day.

I arrived back in the UK and eventually Newcastle on the 9th September where there was allot of catch up to do with friends. After sorting out all my washing and crap and visiting my parents on the Sunday I went out for a few beers with James on the Monday night and then Dan joined us later on in Tilleys. I think it was around 21:00 when i got a message from Manuel from Pixway to ask if i was available for a job in Sanfran for CT flying out in the morning. Within the course of 15 minutes i had had an email conversation with Matt from CT and a brief chat and i was booked on the 10:40 flight Newcastle to London then London to San Francisco.

It was a very last minute call for me as the original operator became sick and had to go to hospital. I didn’t think i would know anyone else on the job but when I was waiting outside the hotel for Ben from CT I turned around and saw Thomas, a good friend and operator which was nice surprise.

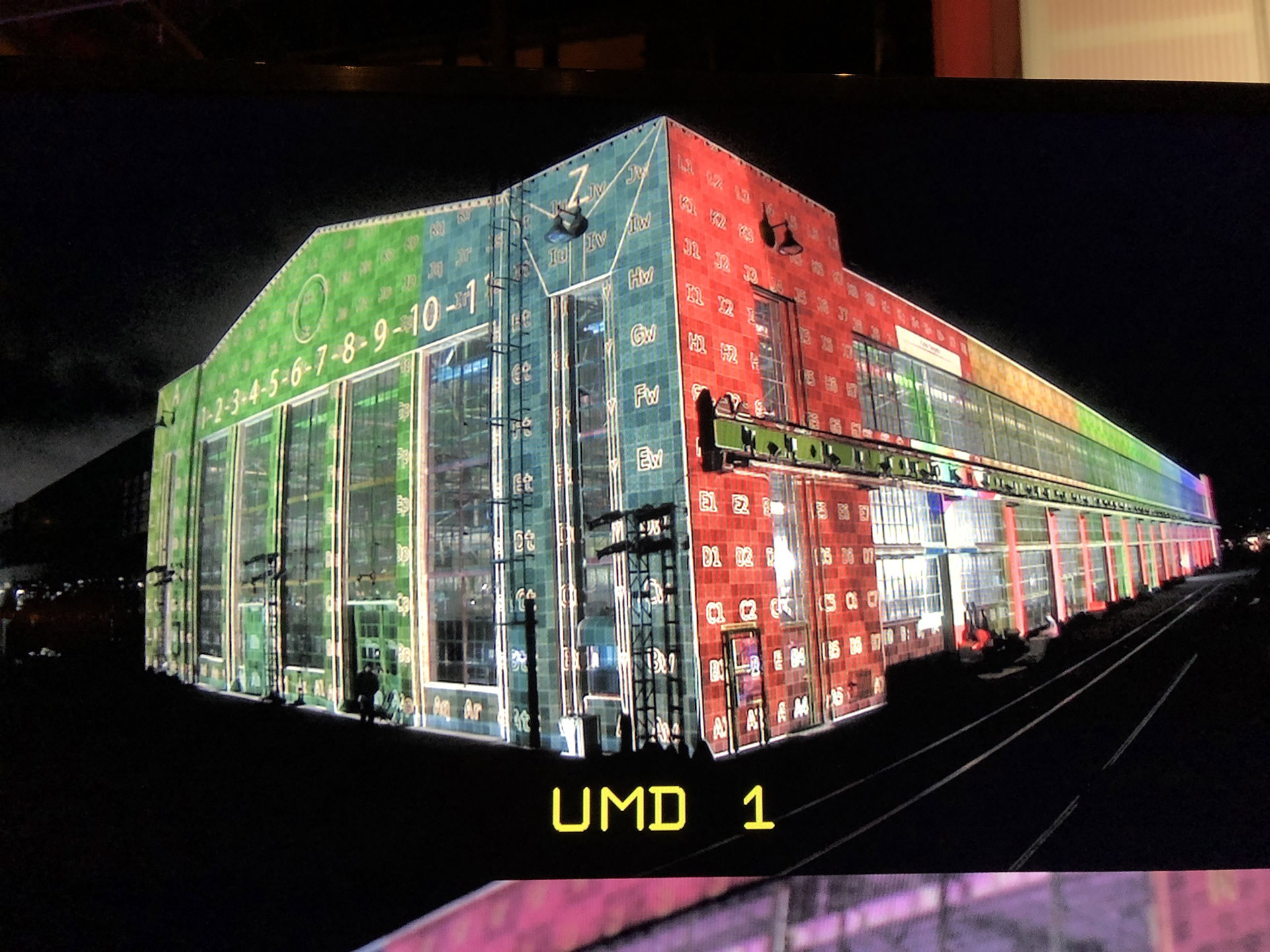

We split up and i was looking after lineup overnight with the projection team, it was the first time I met any of the CT Vegas guys and everyone was super friendly, it was a really nice team to work with. We had 2 nights for lineup which consisted of 36 30K projectors covering 2 sides of an old Ford assembly plant on the shore of Berkley, ironically of the launch of the new Audi e-tron.

It was also nice to finally meet JT from Silent Partner Studios on this gig, we had chatted over email before but never met so i t was a nice surprise to be working on the same job.

Below is a video of the show, the excellent drone show and projection part is from the start to 3 minutes in.

I was not long back from San Francisco and i was travelling again, this far by train and only down the road to Paris to help Pixway on the Paris motor-show.

Previous to the Paris show we had done Geneva and Beijing with Pixway for Renault. This by far was the biggest with a 19k wide LED screen and d3 controlling hundreds of lighting fixtures and LED tape.

I in the roof of the exhibition stand we were controlling the pixel batons on over 150 robot arms and we were synced to time-code from Kuesi’s Medialon which was also controlling sound and lighting. It was a massive system, I programmed the press launch and the public loops, it was a seam less show and we were pushing a lot of Animation content, the whole thing looked so crisp.

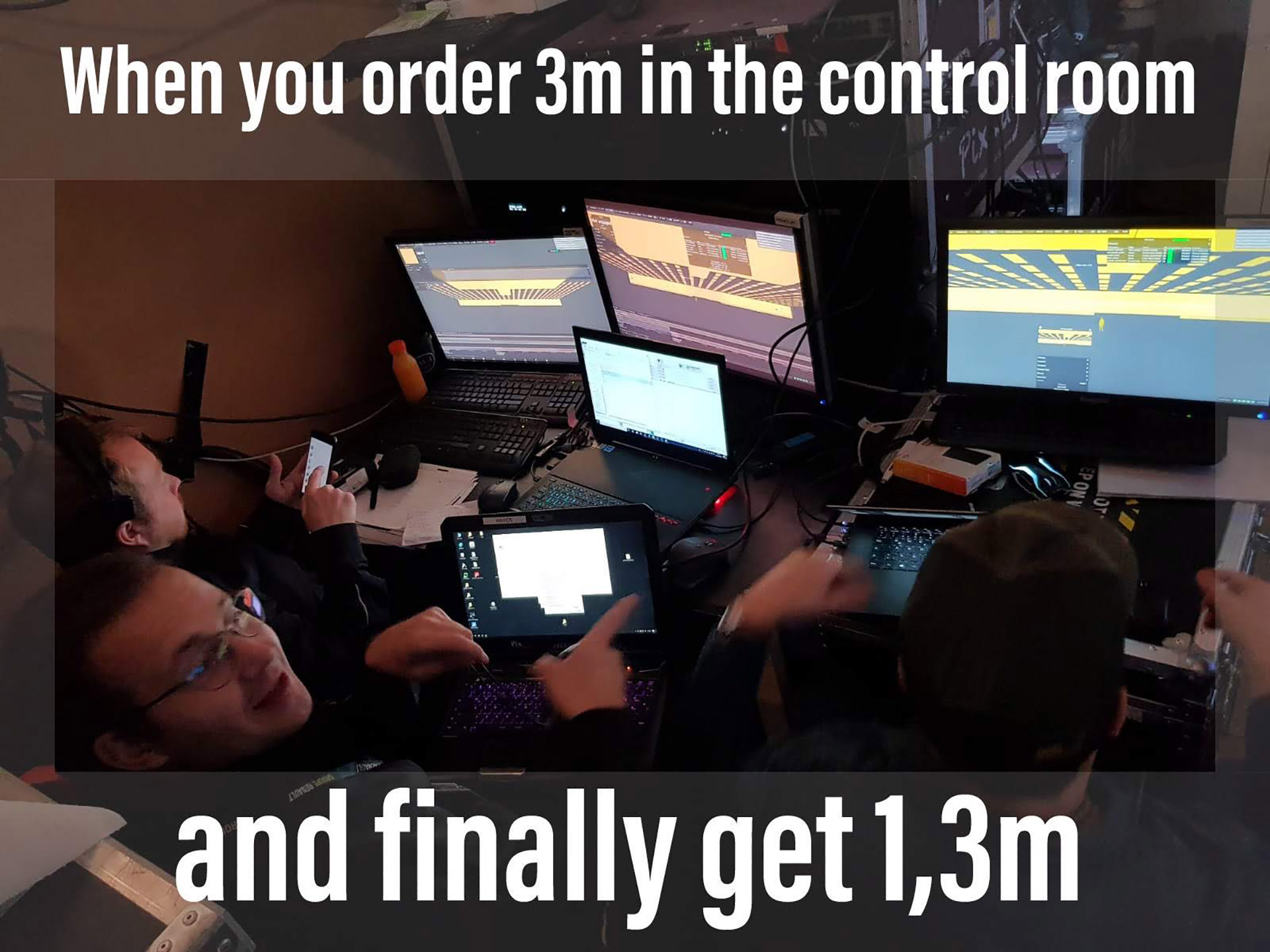

It was pretty cosy in the control room when there was more than 3 of us at video control. Below is a iPhone panorama of the gig, the stand was so big it was impossible to fit the whole thing in a standard picture.

Returning from Paris i was in London for a few days getting training on Omnical, disguised new camera based projection calibration system which has been working pretty well after optimising the system on this current job. I was also attending the disguise come together annual event where people form the disguise community get together and basically get drunk. It was nice to see people I haven’t seen in quite a while, but also see people away from work. Look at these drunkards :).

After 5 years of not living anywhere as I am working away in different places, and also being eternally grateful to friends who have let me stay at their houses when i haven’t been working for a few days I finally bought a flat. I started the process in June and finally 4 months later I got the keys, its nice to have a space again and a pad to invite friends and family around to for food/drinks.

Also, its so cool to walk down the street and be right on the Newcastle Quayside, no matter how many times I see the Gateshead millennium bridge tilt its always impressive to see the engineering in action.

So after being in the flat for only a few days I was out off again on another job this time working with Dan, Dave, Harry, and Mike. We were projection mapping onto the roof and walls of the Empress ballroom in Blackpool as part of the Light Odyssey festival. There were various artists who had created projection work and we had some excellent students from Guildhall who were helping with the physical install and showcasing 2 pieces of animation. I had been teaching for a day at Guildhall the workflow of cinema 4d and d3, advanced projection mapping techniques and was impressed with the students there. Dan and Dave done an incredible job of the planning which made it a pretty smooth install and lineup. I’ve just came across this excellent video by one of the artists Ruth Taylor who contributed to the festival.

Following on from Blackpool i had a couple of days in Newcastle and I was flying to Berlin to help out withe the Pixway open house showcasing disguise, Notch, Stype, Blacktrax, Omnical, the new VX4 media server and video and entertainment geeky’ness

Its always really cool working with the Pixway guys, everyone works had and has lots of fun at the same time. Peter Jamie and Joe, were also over from d3 but some of the equipment wasn’t as it was stuck in customs. So for the Omnical demo Paul ent out to craft shop and brought back some stuff for us to projection map onto to demo the Omnical system. I modelled and UV’d the props and made some content and then Jame set to work bringing it together in d3 to make a pretty cool last minute Omnical demo, it looked really neat.

Not only did we have Omnical to demo but there was also the Blacktrax and Notch combination to generate real-time tracking content on led and projection.

After a busy few days and for i flew back to Newcastle, Paul took us to Fransico and myself to the highly recommended Princess Cheesecake shop in Berlin to put on a couple of pounds, it was delicious!

I landed back in Newcastle around 19:00 on the Friday and then the following morning around 11:00 I was off away again, this time to Abu Dhabi for PRG Gearhouse. This was a last minute job where we were projecting in the Louvre Abu Dhabi for the 15th Birthday of Etihad airways and also th elaunch of the new Etihad scarf

It was a super short job, basically one night of lineup overnight, and then the following day was show-day. It was nice to see Matthew again who i worked with last year in Turkmenistan and also great to meet some d3 operators from Asia, Malcolm and Laurence

The surfaces were straight just big flats, but a little challenging 2×2 & 3×1 projection layout. I marked the centre points of the surfaces tog give us a fighting chance and together with the projectionists we got a sweet lineup in a very short space of time.

And that is it, a pretty good roundup I think and I’ve tried my best to keep on top of my grammar and auto-correct though I think a few spellings may have slipped though the net. If your reading this and I’ve missed off crediting someone please drop me an email and let me know. In the meantime, here’s a face I found I the end of a LED light.

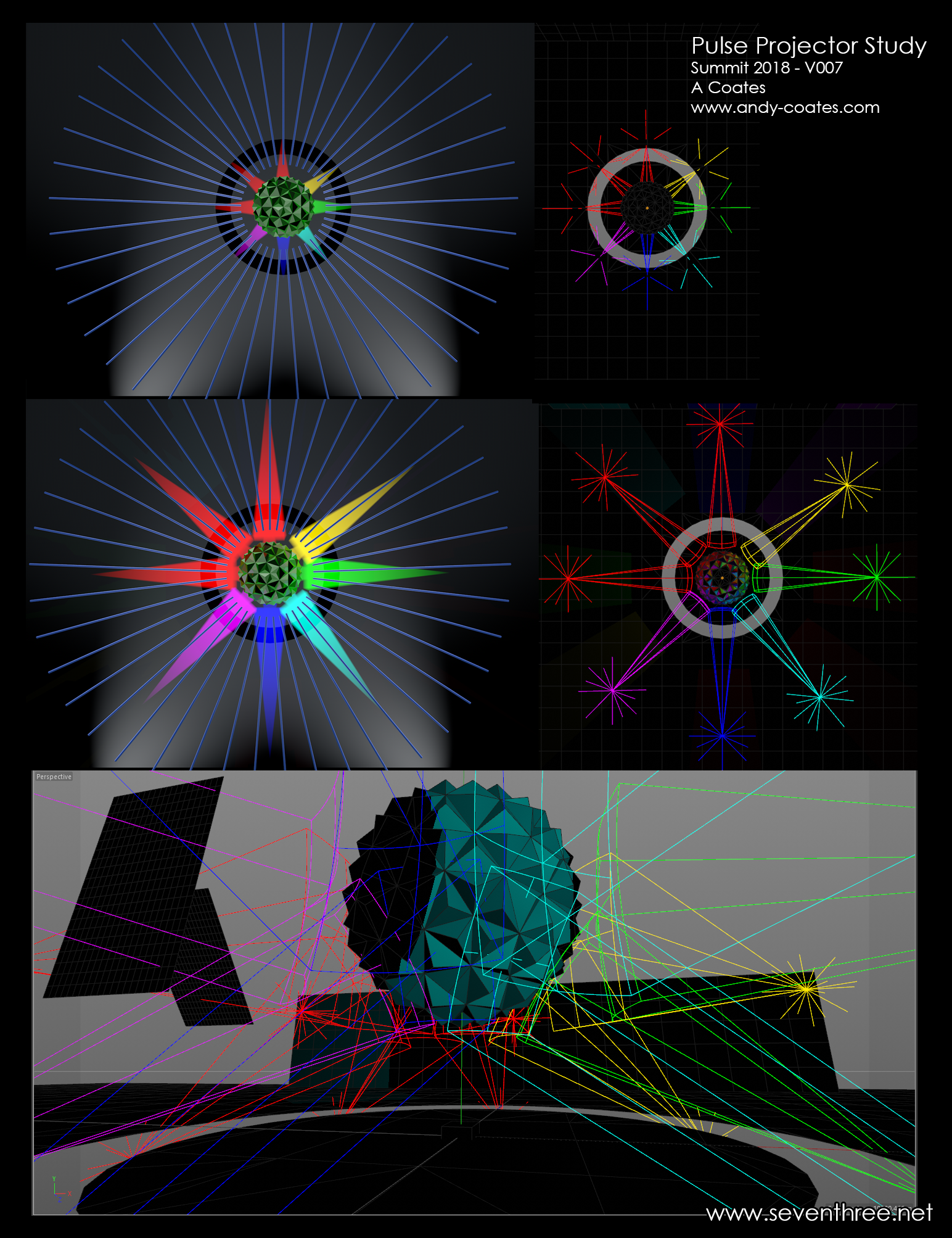

Adobe Summit 2018 London

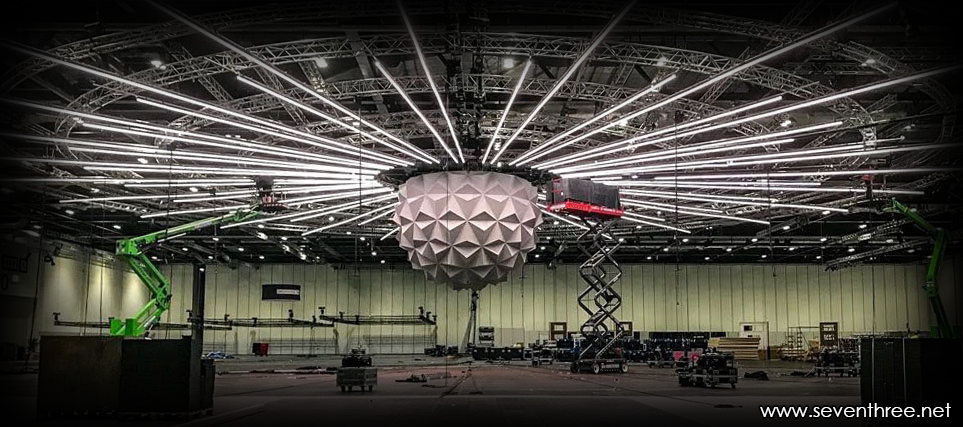

This was the third year Pete from Hawthorn asked me to come and work on Adobe Summit London, the yearly meet about everything Adobe. I think it was around late Feb, Pete had been sent some ideas from Russel for the centrepiece of the delegates meeting area which was a 6m diameter object called “Pulse” representing brain of Adobe Sensei. The challenge with this one was to plan the projection so it was bright enough to be visible in the ambient light of the are and for the projector positions to be non intrusive.

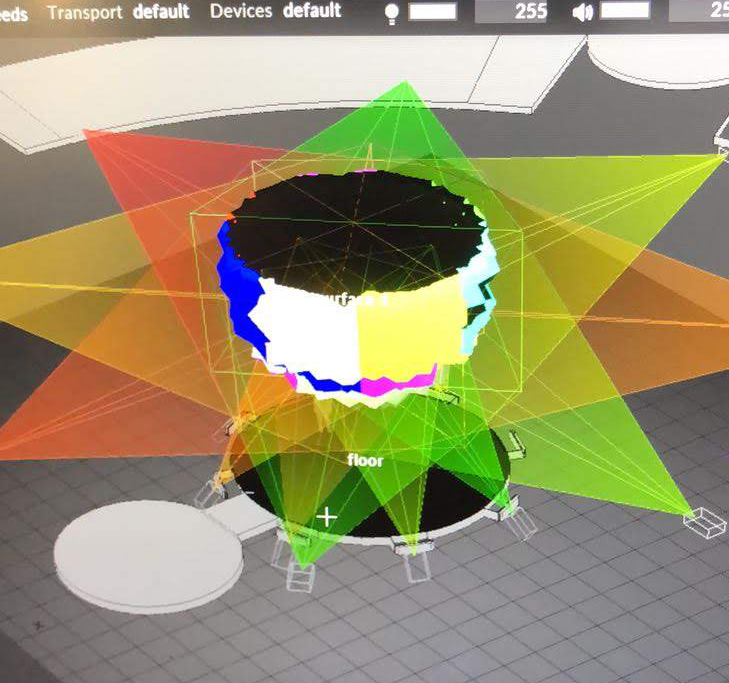

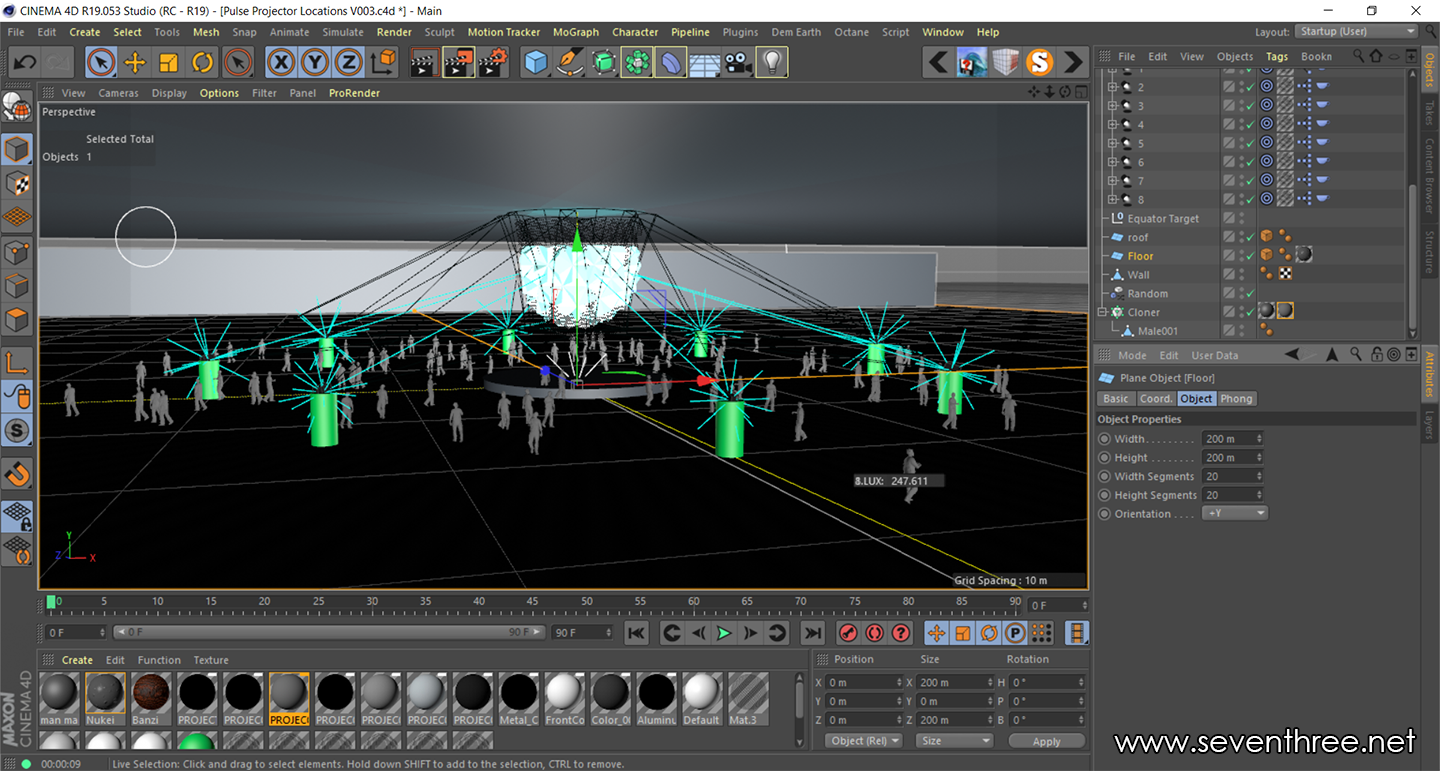

This was the third year Pete from Hawthorn asked me to come and work on Adobe Summit London, the yearly meet about everything Adobe. I think it was around late Feb, Pete had been sent some ideas from Russel for the centrepiece of the delegates meeting area which was a 6m diameter object called “Pulse” representing brain of Adobe Sensei. The challenge with this one was to plan the projection so it was bright enough to be visible in the ambient light of the are and for the projector positions to be non intrusive. There was quite allot of email conversations and sharing visualisations of projector positions and trying to keep as even coverage as possible. I use a combination cinema 4d and d3 (disguise) to work out projection. Below is an early cinema screenshot and also a almost finalised d3 projector setup.

There was quite allot of email conversations and sharing visualisations of projector positions and trying to keep as even coverage as possible. I use a combination cinema 4d and d3 (disguise) to work out projection. Below is an early cinema screenshot and also a almost finalised d3 projector setup.

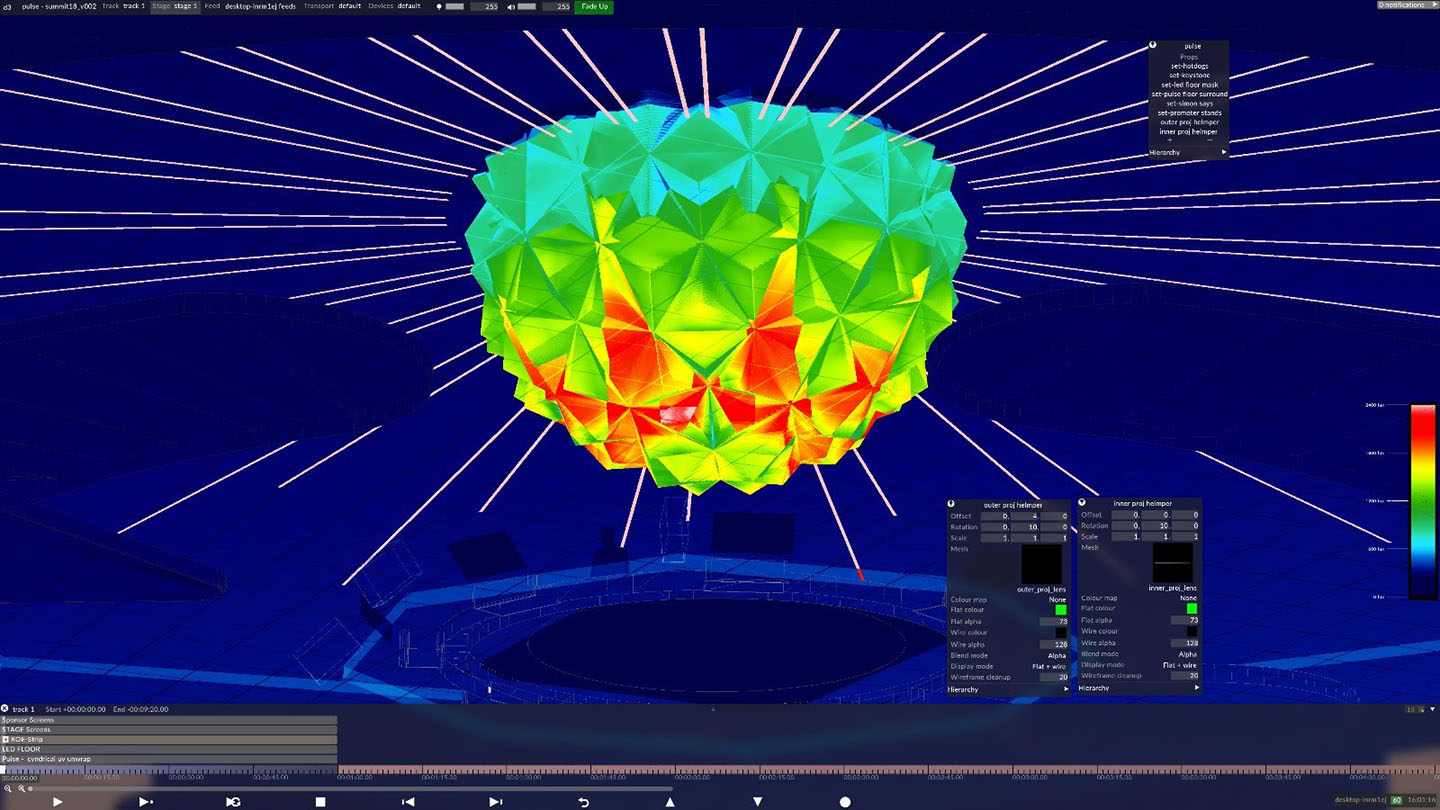

After finalising the study I asked Rich for a second opinionon the setup just to be sure. Rich had a beta release of d3_r15 and sent back lovely stage output showing the heat map with a thumbs up.

The Pulse was just one d3 element of the Adobe event I was looking after there was also the, plenary which was the main conference presentation area and also the party room where there was a 10m projection mapped tower. This video below by Hawthorn really shows off the event.

For the install and running of the event the d3 team consisted of Rich Porter, Sam Lisher and myself. We were together for the first few days for the install of the pulse then I branched off into the plenary where i was looking after the programming and running of the conference show. It was so so good working with Rich & Sam knowing that they could just make things happen and overcome any technical challenges. Rich, I’m sorry but the only photo I have of you two is you with your eyes closed.

This was us in our coffee shop office in Excel finalising some of the 3d stuff and generally having geeky conversations.

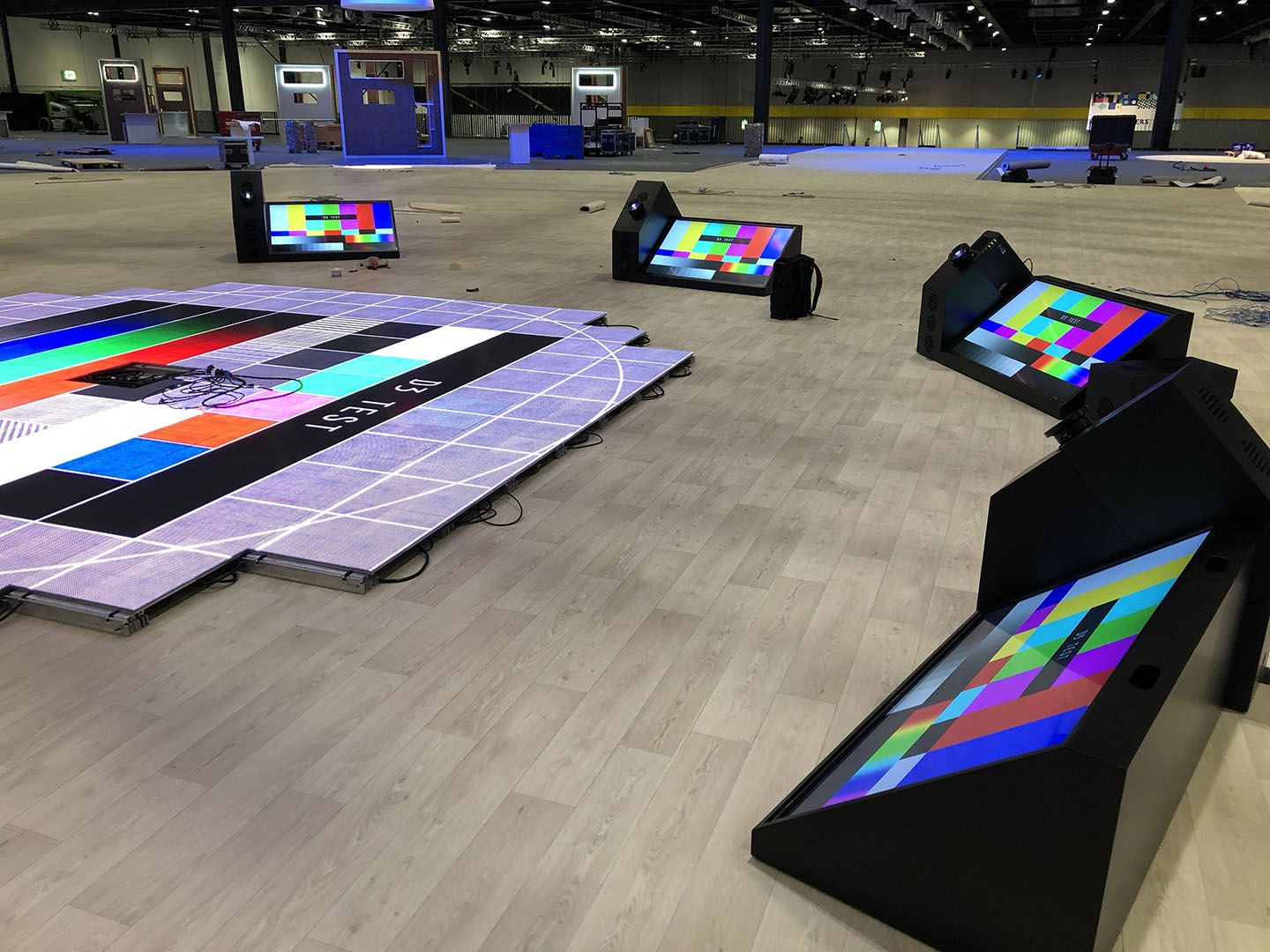

There was allot of physical assembly cabling and infrastructure to go into the gig to, of which Dave and his team did a Stirling job. In addition to the projection on the Pulse there was an LED floor, 8 monitors on the floor, 8 led screens around the perimeter and also a load of creative led tube displaying content.

Pulse being assembled from its fibreglass component part above and below the creative LED tubes being rigged and put in place. It was nice to see the everything coming to life after spending a few weeks converting and UV’ing cad to OBJ for d3 .

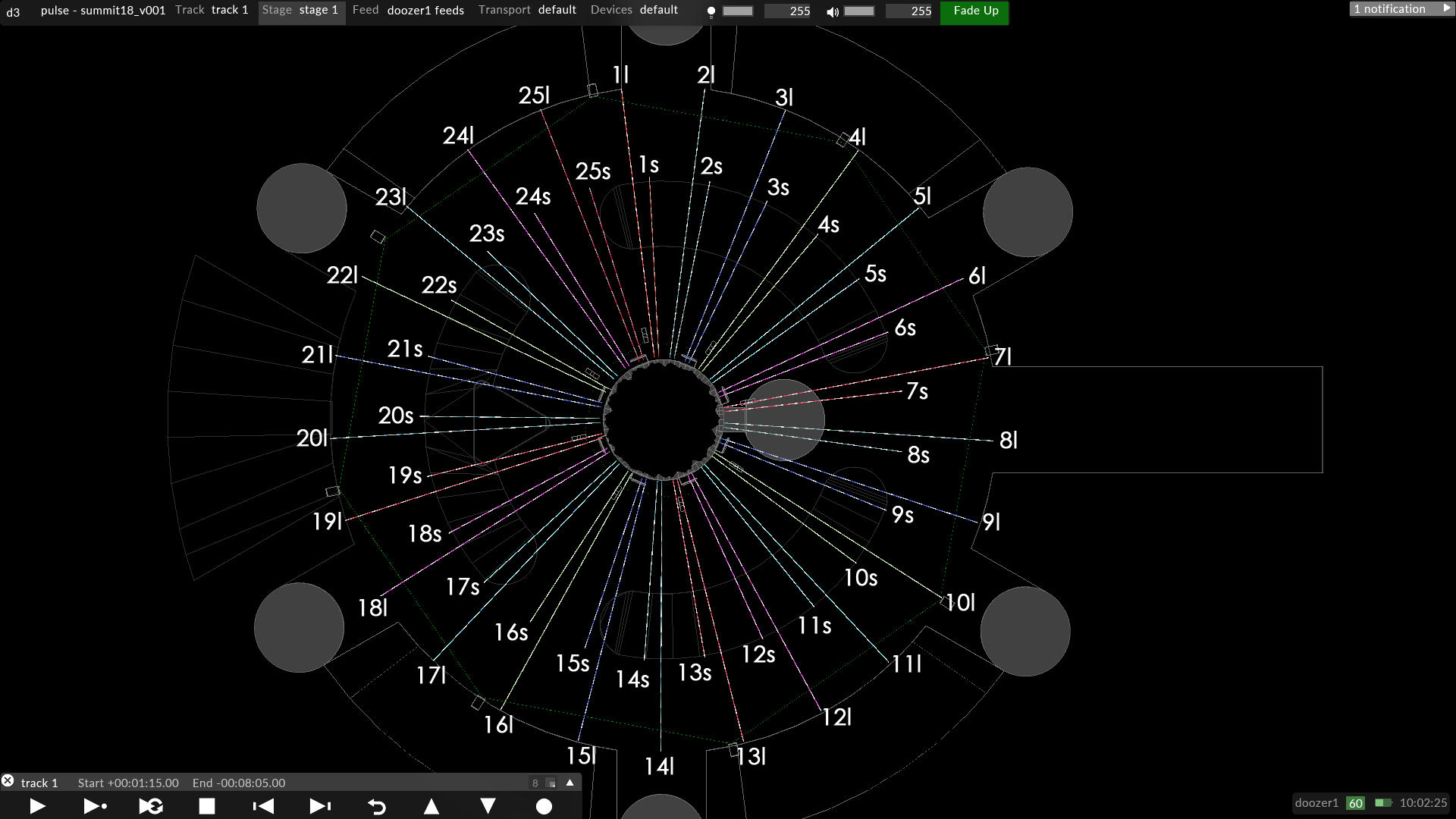

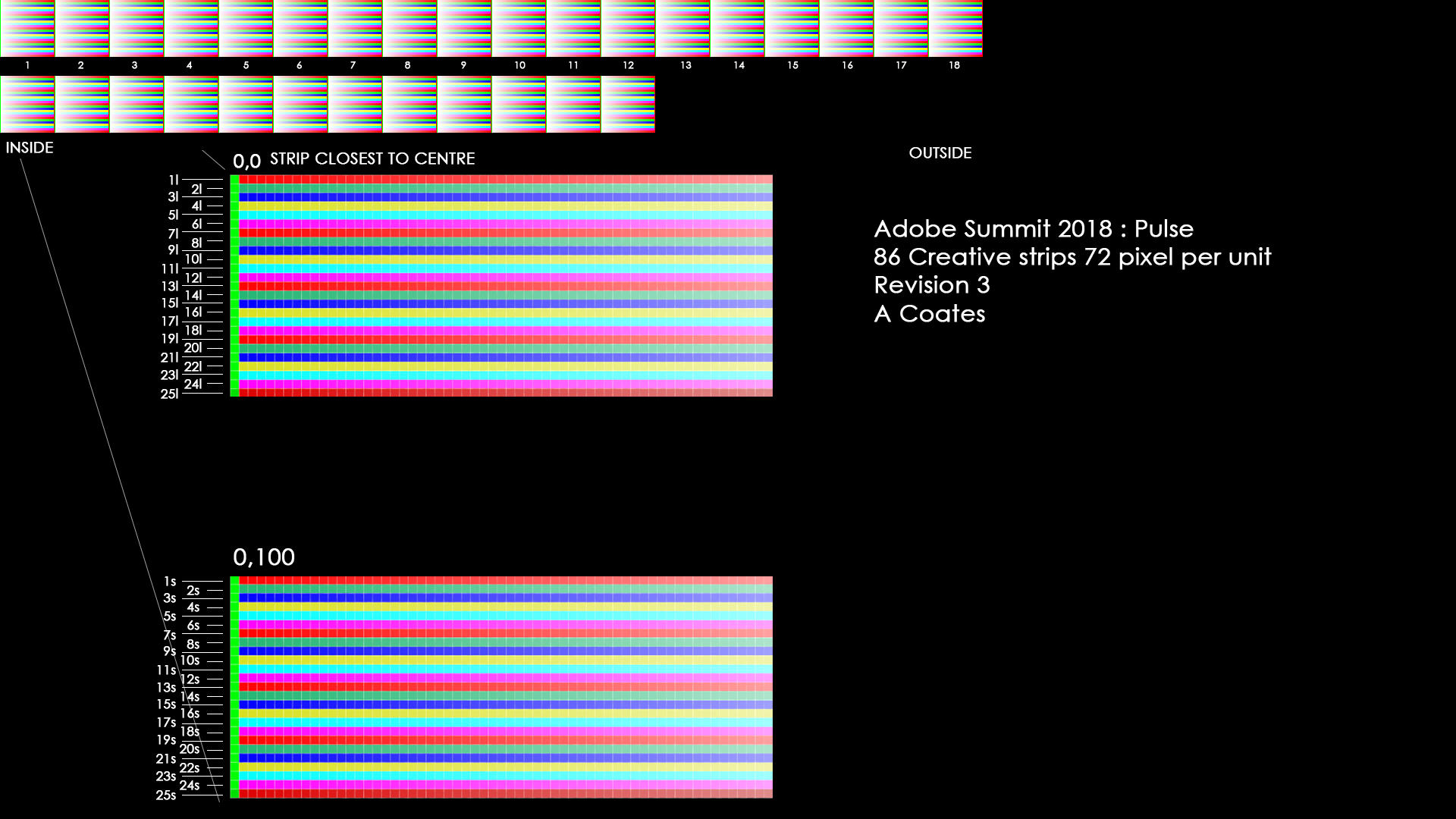

I spent quit a bit of time modelling and doing a tidy UV for the LED strip, below is the final version of the pixel map for the guys looking after the LED strip. Below is a top down view in d3 on the plane i applied the parallel map to push content to the led strips

Below is the pixel template for the led strips which matches up with the above map. The top cluster are the long strips going in order 1 to 25 and the bottom cluster are the shorter led strips 1 to 25 top to bottom.

I also made the start pixel of each of the led modules in the physical construction green and the end pixel red with a coloured gradient to A show the run of led and B easy identify the direction of the module.

It such a feeling of satisfaction when it gets rigged and you drop the map in and it looks exactly how you planned it, much to the credit of Justin who was mapping the modules onto the map in the Brompton processors.

MESHING ABOUT

Things were well underway on the Sunday when I left Sam and Rich to do the line up on the pulse, there was a point where using quick cal we couldn’t line it up, things just weren’t dropping into place as normal. Pete had organised a laser scan of the structure and we had adjusted the existing model to the actual can data and it shoud have just lined up quite easy but it didn’t. We can only summarise that in the process of reducing the triangle count when converting taking the point could data to a mesh some edges and critical points had gotten “dulled” or discarded resulting in slightly off final mesh. Not to anyone fault as no-one was really away of how the fine tolerances we were working to. the rish to turn around the point cloud and reduce the size of the mesh to get it to us quickly in a small file size was probably a large factor in this. We eventually got the raw point cloud data and Rich re-meshed from scratch and after this everything started to work as expected.

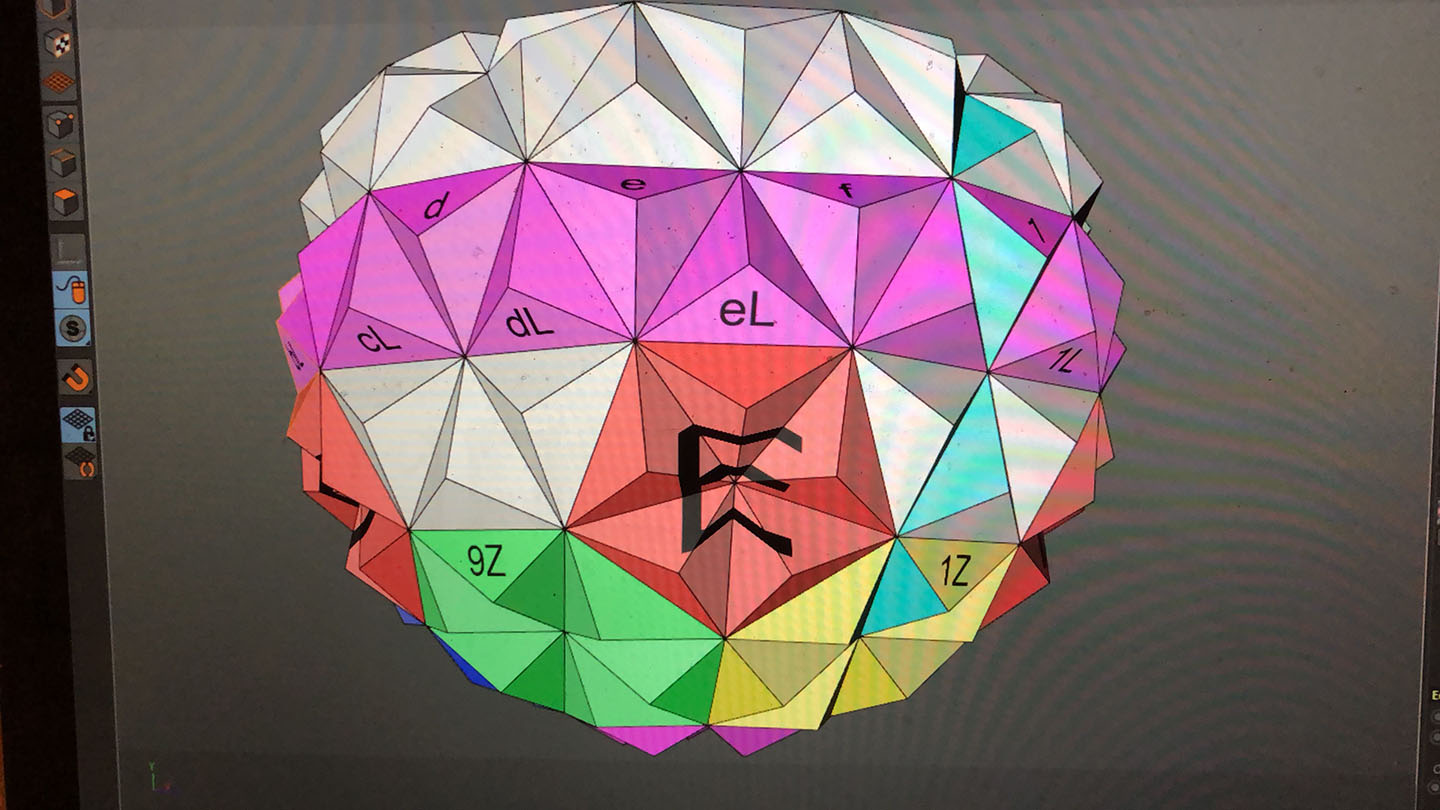

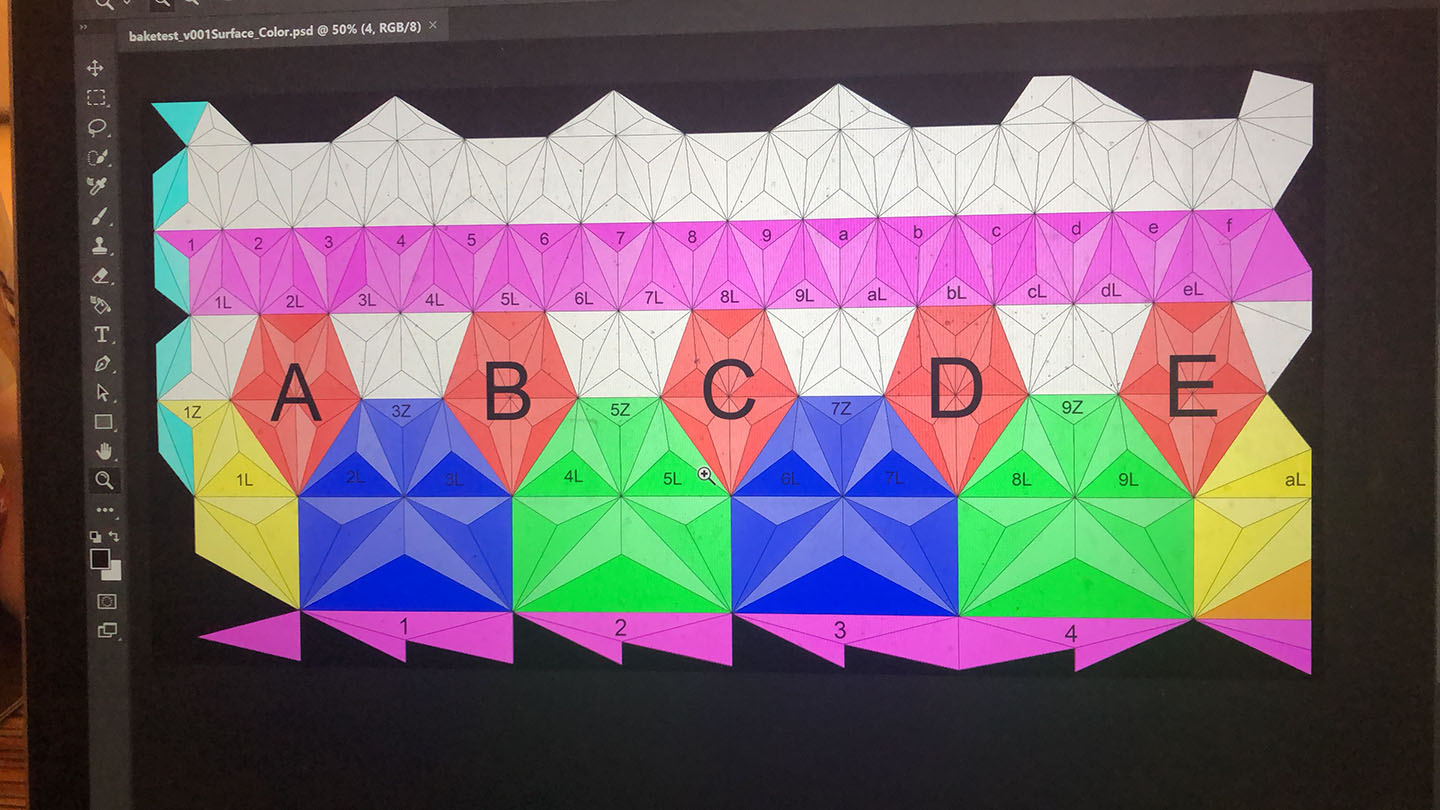

This is the UV for the Pulse, Cinimod did the content for the exhibit and took my OBJ and UV’d it to work best for their content creation. From here I got Cinimod’s UV’s pulse and generated a lineup grif by applying textures to the mesh and then baking this out onto the UV. I then added numbering and letters to identify key elements of the structure to have a fighting chance at lineup.

Sam and Rich done an fantastic job of lineup and blending everything as well a s programming the timeline to work with Cinimods OSC triggers from their interactivity software.

Sam (holding the pulse) & Rich (right)

For the interactivity element of the Pulse, Cinimod created a station where delegates got their face scanned and placed a small version of the pulse onto the centre holder, and based off the users facial expression and their choice of pulse dictated what content was displayed on the floor, led pulse and surrounding floor screens.

So! That was a technical rundown of one element of Adobe Summit 2018 London, i wasn’t intending to write so much and have so many pictures but there you go, i couldn’t have described it with any less. The whole thing was brought together by massive team and credit to everyone for pulling a technically packed event well managed by Pete Harding.

Image courtesy Taylor Bennett Partners & Hawthorn

Image courtesy Taylor Bennett Partners & Hawthorn

Posted in Art, d3, Projection Mapping

Tagged c4d, cad, d3, hawthorn, lazer scan, planning, rich porter, sam lisher

Leave a comment

90 Minutes

Im in Moscow working for Whitlight in-turn working for ITV on the Russian world cup, I’m looking after d3 (disguise) content to screens in the studio where we are based int he middle of Red Square. We’ve been here for just over 2 weeks and tomorrow and are here for another 4 weeks.

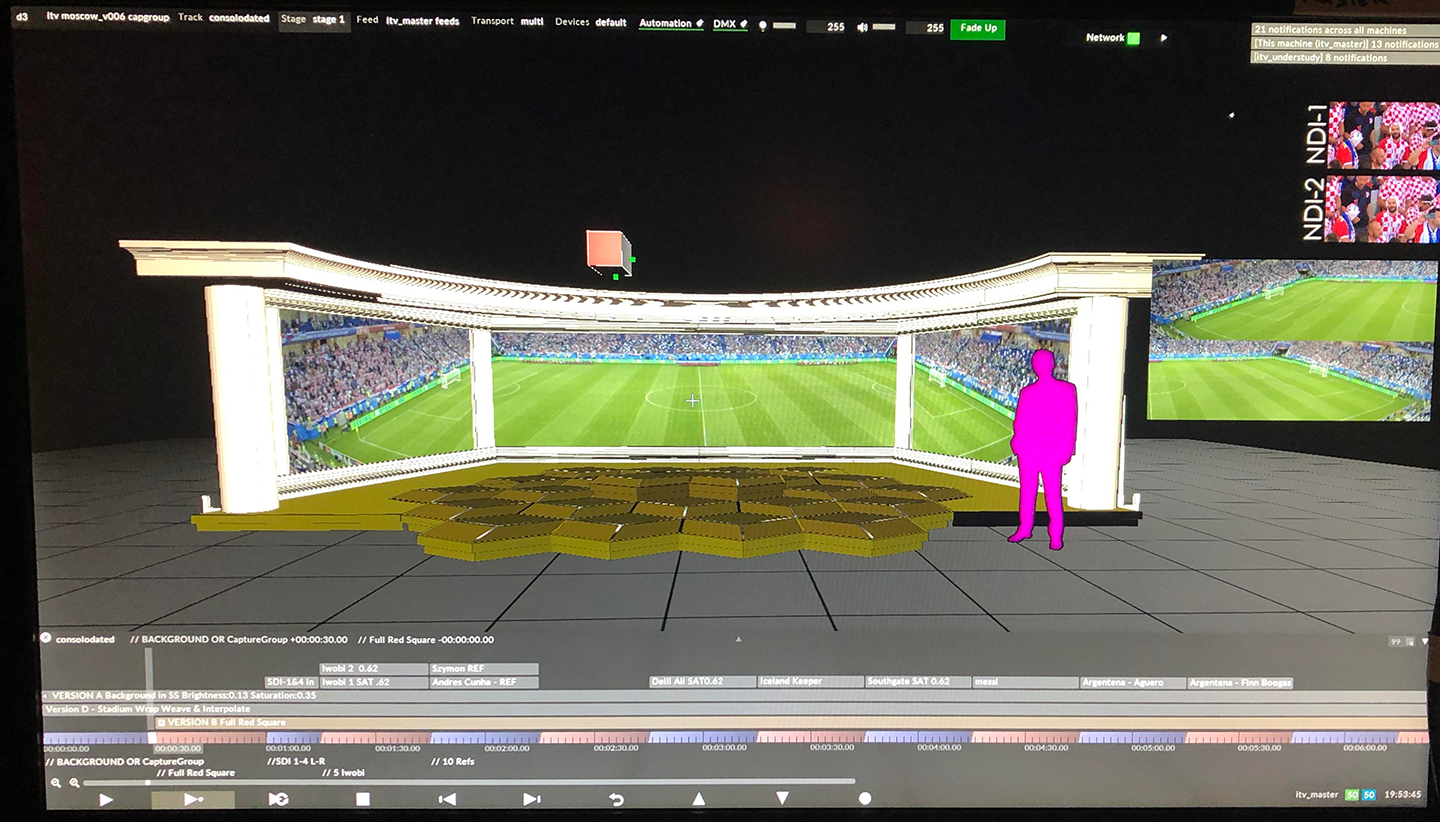

Its been such a long time in posting, I’ve been wanting to do an update post and catch up on the past year but the sear amount to cover is pretty daunting, its been a busy 12 month. Instead of trying to catch up here is what I’m up to now, we have a medium size studio with a view out onto red square and LED screens either side. In addition we have another LED screen which can be brought in and out which covers the window giving us the ability to fake that the studio is in the stadium, below is a shot taken of d3 my TX monitor.

I have a brilliant little snug in the studio which looks small from the outside but is the ideal amount of space and also out of the way so you don’t end up with people putting shit on your desk.

This isn’t just any studio, this is an augmented reality studio on a couple of levels, the side LED screens serve a couple of purpose. The either have a background and a graphic on framing the presenters, or they are relaying the feed from 2 cameras behind the led looking out of the window. The camera relay serves to extend the view out of the window using the LED as “fake windows”, to help wit this effect the Jib camera is sending out traking data using the Stype system so the camera images are manipulated as the camera moves adding to the effect that the LED really is a window. Below is the only photo I have, I hadn’t really taken many pictures and wasnt intending to blog about this but the words seem to have flowed starting again.

This isn’t just any studio, this is an augmented reality studio on a couple of levels, the side LED screens serve a couple of purpose. The either have a background and a graphic on framing the presenters, or they are relaying the feed from 2 cameras behind the led looking out of the window. The camera relay serves to extend the view out of the window using the LED as “fake windows”, to help wit this effect the Jib camera is sending out traking data using the Stype system so the camera images are manipulated as the camera moves adding to the effect that the LED really is a window. Below is the only photo I have, I hadn’t really taken many pictures and wasnt intending to blog about this but the words seem to have flowed starting again.

This was in the early stages of image alignment, the fake AR roof is looking pretty good but there was allot more work went into the sides. Its an incredibly difficult task trying to align and manipulate a 2D flat image (the camera view) to a 3d enviromnent like this and get the foreground and background elements of the 2D plate to match. Deltatre are looking after the looking after the tracking and manipulation of the camera images and passing those to d3 and then I’m mapping these to the LED, The Detatre team have done a really good job and the AR & Perspective matching is looking really good now.

I forgot to mention that in addition to the “through the window” perspective tracking, there is also a fake AR dome which is composited on to he final studio shot before going out on TX. The fourth image in this blog shows this pretty well.

Its been a good couple of weeks so far, have a look at this video presented by Andy who championed and made the 2 level AR idea happen in the ITV Sport studio. I’ve written this post in less than 90 minutes whist the match is on. I feel pretty rusty at writing these posts so hopefully they will get better!

Posted in Creative, d3

Tagged ar, augmented reality, footbal, fwc, itv, LED, moscow, russia, studio, whitelight

Leave a comment

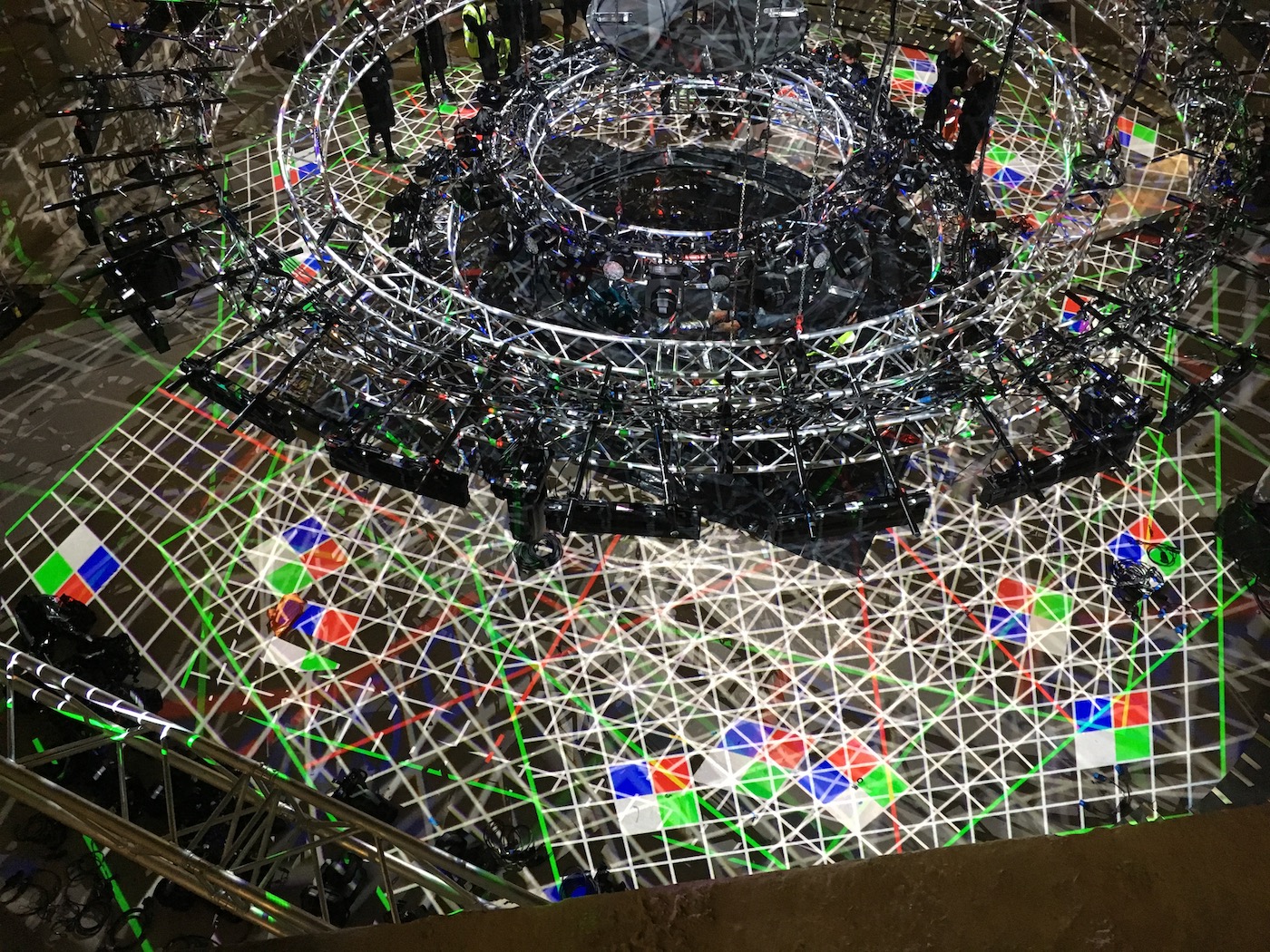

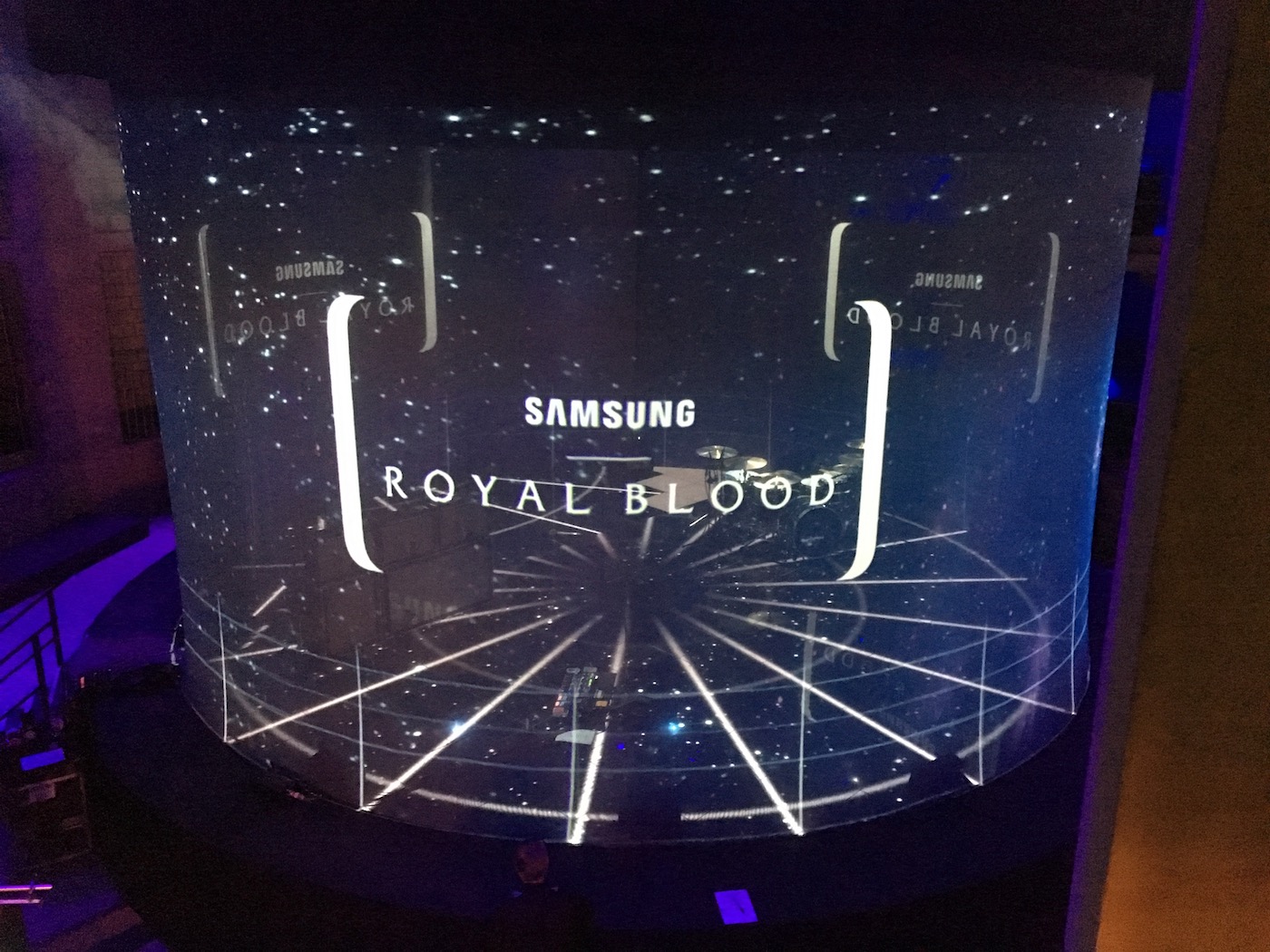

Casino Royal Blood

Straight from the job in Paris I was on the Eurostar and back in London for my next job, i knew that we had a a huge circular gauze and 12 x 0.68:1projectors to cover it as id been doing the projection planning in the run up to it. I got to site and met Rob who was running the job and we went through what was what and where, ti was quite a nice easy start to the job, not too rushed , just steady.

Above, this is Gary setting up the skinny laser which I had initially planned to use when lining up the projection on the gauze to get an equal horizon all the way around. In addition to the gauze we had a huge led floor which tied in with he projection on the gauze.

I was using d3 my favourite weapon of choice to line this up and had thought allot about the best method to do this when setting up the project. I made sure that the cylinder object in the project had vertex with matched divisions on the led floor, tis meant i had the perfect starting point for quick cal. I literally put a rotational lineup grid on the LED floor and used these to quick cal the projects to for the bottom points of the gauze.

For the upper points, placed a horizontal laser up to the height of the top of the virtual mesh and then used another vertical laser to mark the vertices where the lasers crossed. this meant I could create a point in the physical world which twas almost identical to the location in the virtual world giving me perfect quick cal points.

The method worked really well and was very pleased that the thought and effort to get the model and the lineup grids spot on paid off. The gauze id bow in once it was stretched but that was the nature of fabric without any support in the centre. A little subtle warp between all the projectors pulled this in.

The event was a “secret gig” by the band Royal Blood and also a launch event of the Samsung Galaxy S8. Normally the drummer would have a drum man down which helped the tech place the drums in the correct place each time but also helps to stop them sliding about on the floor. For this gig the whole floor needed to be visible and they were gluing small blocks to the floor to keep the drum feet inlace. I cant remember how it came about but I ended up making a exact photoshop drum mat based on a photo of the original mat. I then put it on the led floor, and because I knew the pitch of the led it was scaled perfectly first time when displayed on the led floor.

With a little pit of positioning and rotation the bands drum tech placed the kit on the led floor marks and pretty much perfectly lined up correctly. It was a really satisfying task and looked pretty cool at the same time.

Bryte Design were uncharge of the design for the event and Jack Banks was uncharge of creative video. It was nice to meet the Bryte team and also to meet Jack again who we figured out we had met a million years ago taking part in the first d3 training back in 2011.

The gig went well and everyone was pretty happy, theres a video for one of the songs below. I don’t think the videos do this gig any justice, it was much better in person.

Posted in Uncategorized

Leave a comment