Early march I got a phone call from Pod Bluman of Bluman Associate’s asking if it was up for being involved in a projection mapping project for Nike Air Max day 2023. On the project already was Rich Porter looking after the projection study + project setup and also Lewis Kyle White looking after texturing and content on the new giant Nike Air Max were were to be helping launch for the agency Amplify.

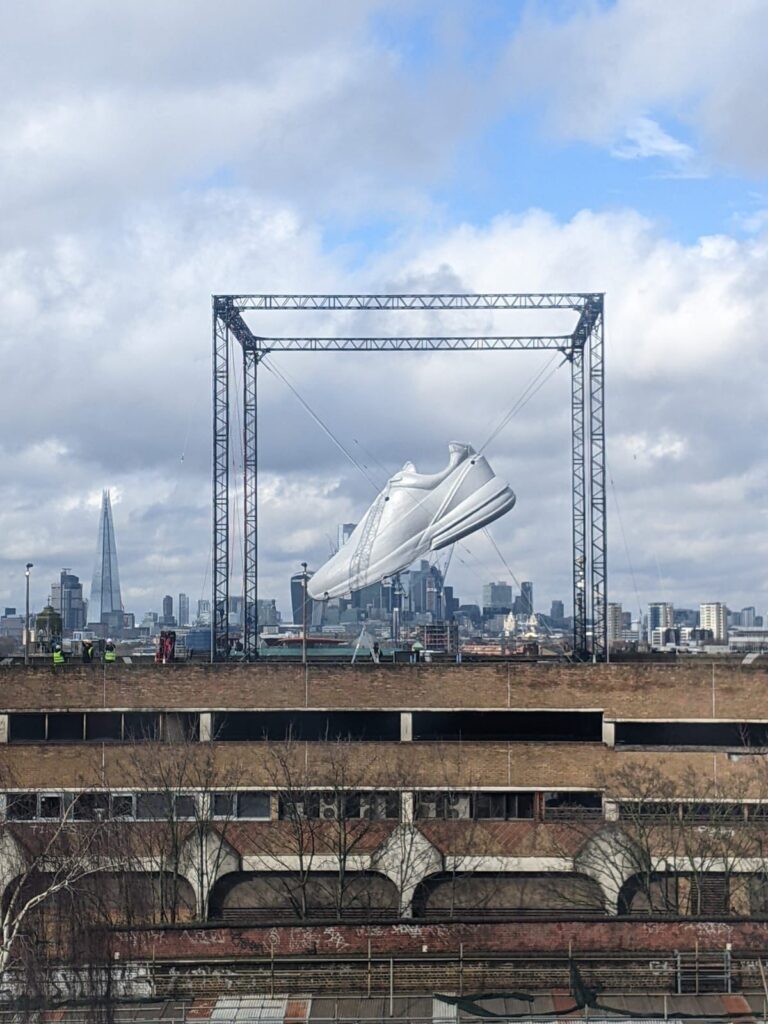

The giant shoe we were projecting onto was to be situated on top of a car park in Peckham opposite the venue where the launch party for the new Nike Air Max was to be hosted. Due to the ovation of the shoe and the forecast high winds my involvement was to help track the shoe if it was buffeted by the wind so the projection would stick to it. This was because the shoe was hung on steel cables and due to the nature of the rigging it was impossible to keep 100 percent static.

Lewis made a great job of remodelling the shoe from high poly mesh into a low poly mesh ready to be sent to Stage One to be machined and built in real life measuring and actual size of 10m toe to heel. The whole thing was suspended in the middle of a 17m cube of truss and supported by heavy steel cables. Above is the incredible texturing work Lewis put into the modelling, the fabric looked so realistic when projected on the shoe.

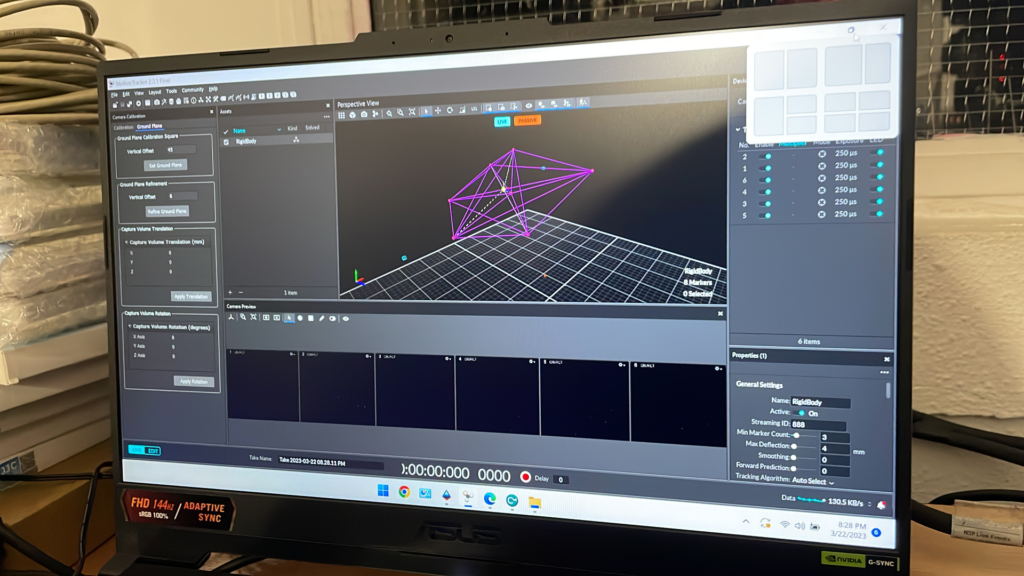

In the pre planning stages the guys from bright studios helped out consulting in the best methodology for tracking. In the end we all agreed that using Optitrack to track the shoe & Stage Precision to format the data in a useful way for the disguise media server to receive it was the most flexible solution

Bright provided us with an 8 camera tracking system and prior to going onsite we had two days pre production in the Bluman studio space to do some testing and work out the technicalities before going onsite. It was great to see everyone who id not seen in ages and also be joined by Ed who was programming video for the launch party across the road from the carpark.

When I got the the studio space and after having a good natter with everyone who I’d not seen in ages I set up some basic rigging for the cameras. We were meant to have a 1m 3d print of the actual shoe to test but we couldn’t get one done in time, so instead Lewis decided to hang the sample shoe from the truss for the craic.

We had a great couple of days testing and working out a workflow for getting the tracking data from Motive to disguise using stage precision in the middle to align the tracking data and the virtual world. Over the weekend the team worked getting the truss and the shoe rigged ready to get the projection in and lined up from Monday.

View of the shoe from the party venue over the other side of the railway tracks from the carpark which houses Peckham Levels. The first thing I had to do once getting onsite was to attach the tracking markers to the shoe, these are small 10mm retro reflective markers. The tracking cameras have a built in light which blasts out infrared and the markers reflect the infrared light back to the camera. Using multiple cameras looking at these markers 3d map of the markers position is formed.

I covered the lower part of the sole of the shoe which could be reached with a small cherry picker with seven markers. This was enough for the camera’s to pick up a large enough area and detect the movement of the shoe.

This below is a reconstruction of the markers attached to the shoe in the Motive software. Due to the nature of the system using infrared light we couldn’t calibrate the system or check if the cameras could pick up the markers until sundown. For a couple of the cameras we were close to the maximum distance limit for the system but as we did the first calibration of the system they picked up pretty well. I ended up moving some cameras around from the original plan to get better coverage, and after some settings tweaks we had the tracking data come in really well.

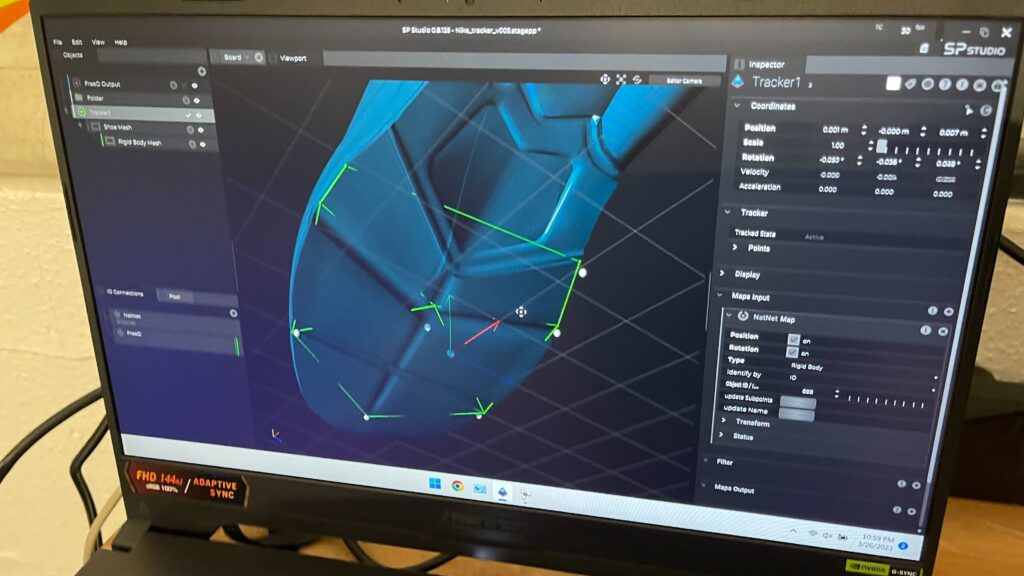

Once I had the tracking data set up I switched on streaming in Motive so I could pick up the NatNet data in stage precision. I was going to try and use the world align tools in SP to align the tracked points to the fixed points to where the projection on the shoe was calibrated too but this didn’t quite work with this setup and I’m told this is fixed in the current version. Instead I manually did the XYZ offsets to match the tracking data to the static position of the shoe which worked a trick, after all the offsets were correct i passed the tracking data to disguise as a FreeD tracking data packet which Rich attached to the shoe object. The whole process of lining up projection then offsetting the data relied on weather being good as the shoe had to be static for the first initialisation for this for it to work, luckily we had enough moments of good weather for this to happen.

In the end everything came together and as the shoe was buffeted by the wind the tracked data passed to the disguise project and helped the projection stick to the shoe, a great success. In addition to that it was great to catch up with a load of people id not seen in ages and have a really good time in Peckham over a few beers after work.